Introduction to Resnet

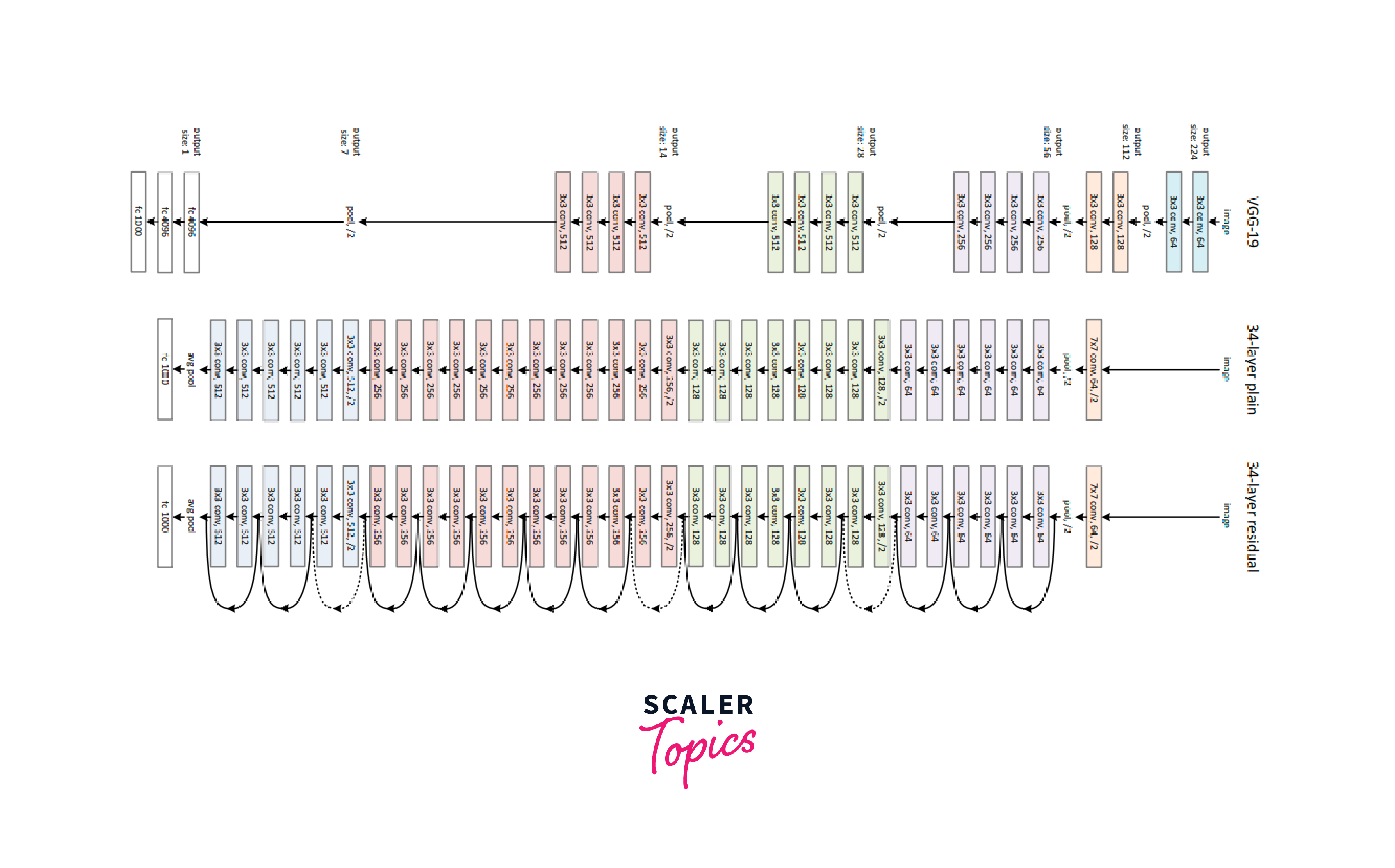

Overview

ResNet (Residual Neural Network) is a popular convolutional neural network architecture developed by Microsoft in 2015 that uses residual connections to alleviate the vanishing gradients issue in deep neural networks. The residual connections allow the network to learn the residual function between the desired output and input to the layer, enabling effective training of very deep neural networks. ResNet has been widely applied in various computer vision tasks such as image classification, object detection, and semantic segmentation and has served as a basis for other neural network architectures such as ResNeXt and Wide ResNet.

ResNet(Residual Neural Network) is a convolutional neural network (CNN) architecture developed by Microsoft in 2015. It uses residual connections to alleviate the problem of vanishing gradients when training very deep neural networks. The basic idea behind ResNet is to add "shortcut connections" between layers, which allow the gradients to flow directly from the output of one layer to the input of another, bypassing the intermediate layers.

ResNet is widely used in computer vision tasks such as image classification, object detection, and semantic segmentation. It has been shown to achieve state-of-the-art results on several image classification benchmarks and has become one of the most popular architectures in deep learning. The architecture of ResNet is composed of several building blocks called "residual blocks", which contain multiple layers of convolutional and non-linear activation layers. The Network is trained using backpropagation and stochastic gradient descent. The ResNet architecture has been further improved by introducing ResNeXt and other versions.

What is ResNet?

Convolutional Neural Networks (CNNs) have become the state-of-the-art models for image classification and other computer vision tasks. One of the most popular and successful architectures is ResNet (short for Residual Network), introduced in 2015 by Microsoft researchers. The key feature of ResNet is the use of residual connections, which allow the Network to learn a residual function or the difference between the desired output and the input to the layer.

The motivation for using residual connections is to help alleviate the problem of vanishing gradients, which can occur when training deep neural networks. By allowing the Network to directly learn the residual function, rather than the full mapping from input to output, ResNet can learn the identity function more easily and pass gradients through many layers without encountering the vanishing gradients problem.

Residual Neural Network has been widely used in various applications, including image classification, object detection, and semantic segmentation. It has also been the basis for many other neural network architectures, such as ResNeXt and Wide ResNet.

The Need for ResNet

- ResNet(Residual Neural Network) was introduced to address the problem of vanishing gradients in deep neural networks.

- Vanishing gradients occur when gradients of the parameters concerning the loss function become very small as they are backpropagated through many layers.

- This can make learning difficult for the Network, as the gradients may become too small to significantly impact the Network's parameters.

- ResNet(Residual Neural Network) uses residual connections to alleviate this problem by allowing the Network to directly learn the residual function or the difference between the desired output and the input to the layer.

- This makes it possible to train very deep neural networks more effectively.

- Residual Neural Network is widely used in computer vision tasks such as image classification, object detection, and semantic segmentation

- ResNet(Residual Neural Network) has achieved state-of-the-art results on several image classification benchmarks and has become one of the most popular architectures in deep learning.

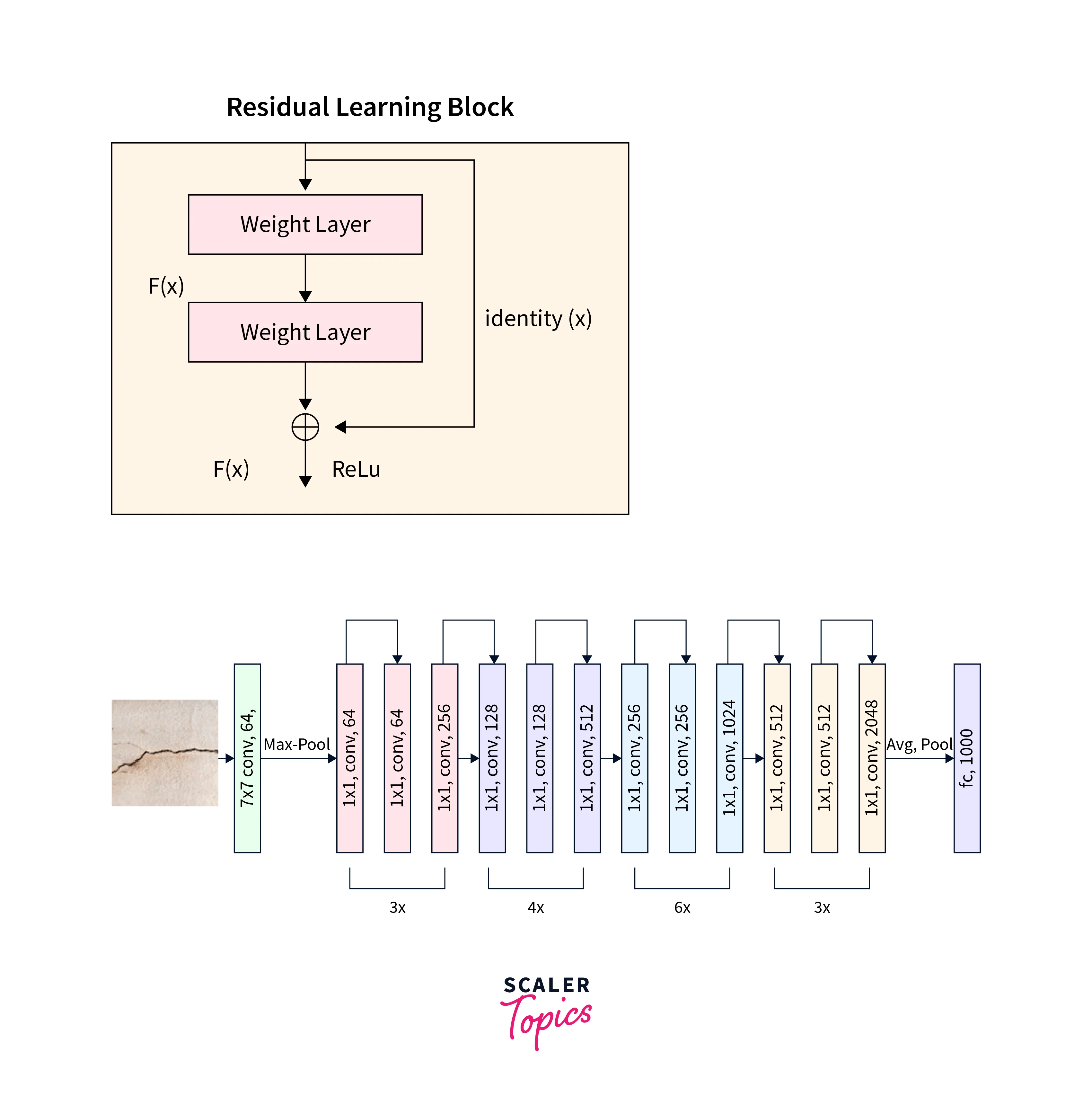

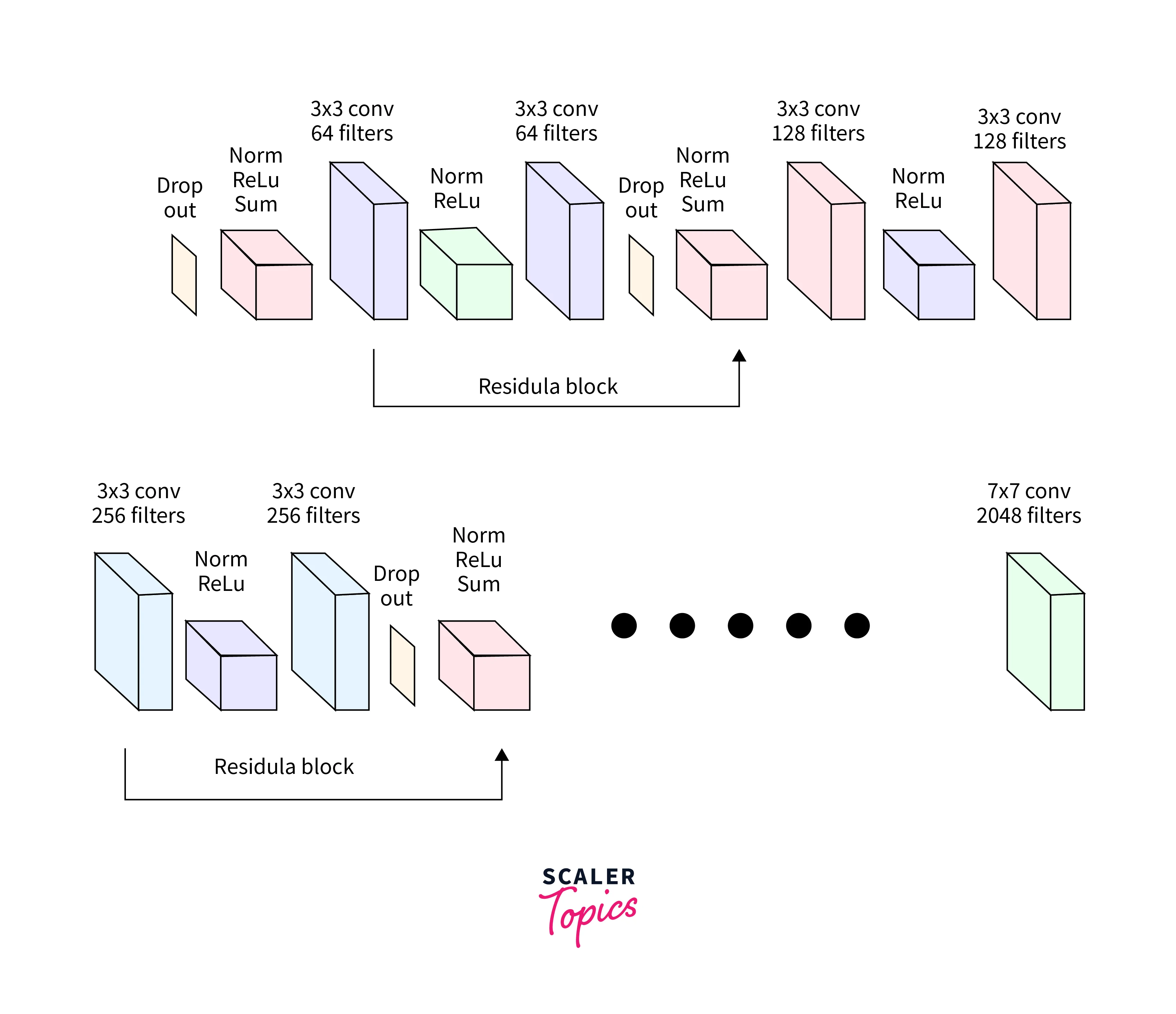

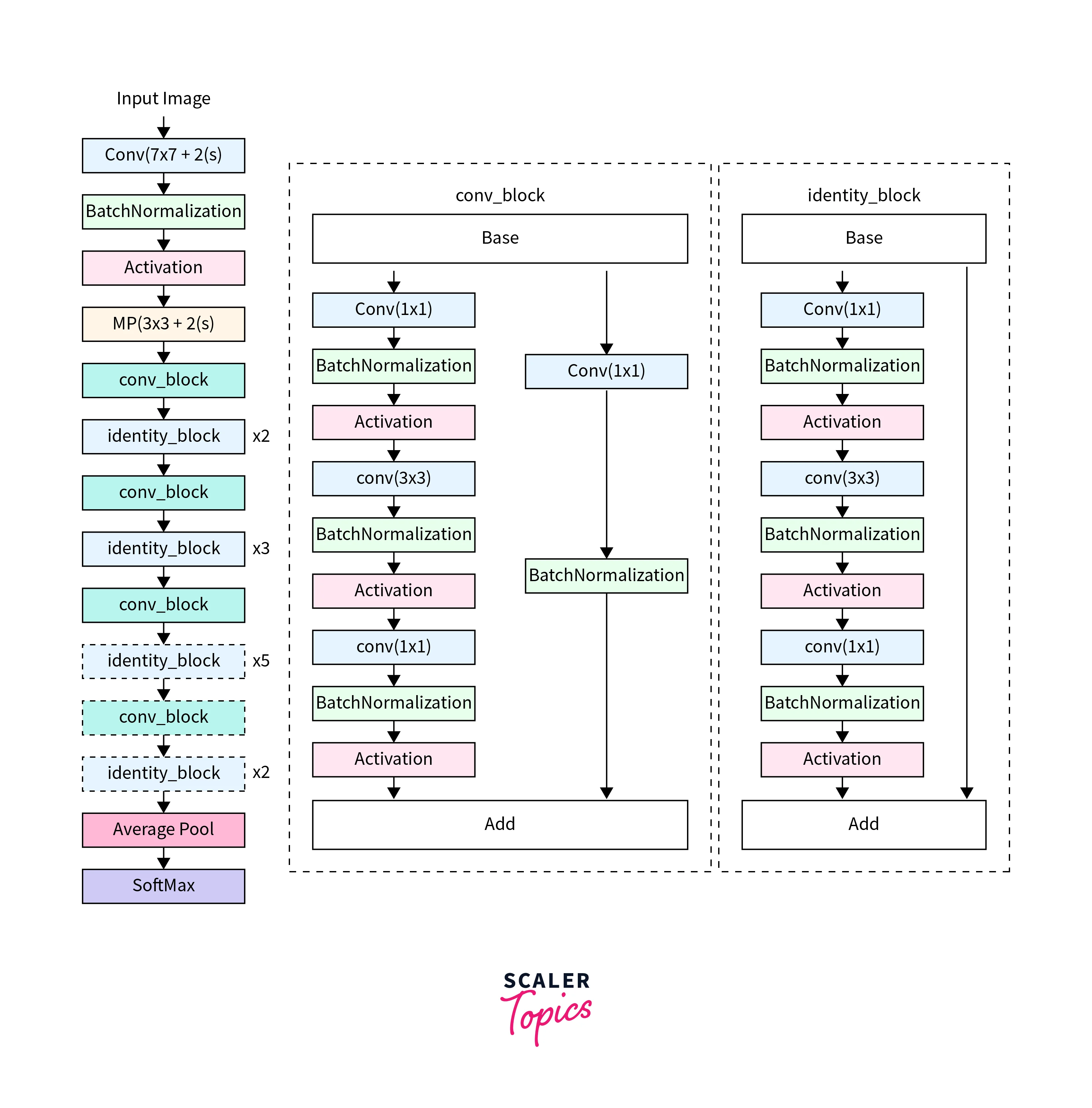

Residual Blocks

In the ResNet(Residual Neural Network) architecture, the building blocks are called "residual blocks". These blocks contain multiple convolutional and non-linear activation layers and are connected through residual connections. The residual connections allow the Network to learn the residual function or the difference between the desired output and the input to the layer. This helps the Network learn the identity function more easily and pass gradients through many layers without encountering the vanishing gradients problem.

Each residual block consists of two or more layers of convolutional filters, batch normalization, and a non-linear activation function (such as ReLU). The first layer of the residual block applies a convolutional filter to the input, and the second layer applies another convolutional filter to the output of the first layer. The output of the second layer is then added to the input of the block, creating the residual connection.

The residual blocks are stacked on top of each other to form the final architecture. The deeper the Network is, the more residual blocks are used. This allows the Network to learn more complex features and improves its ability to distinguish between different classes. The ResNet architecture has been further improved with the introduction of ResNeXt and other versions, which add more filters in each block and increase the representational capacity of the Network.

ResNet Architecture

The ResNet(Residual Neural Network) architecture consists of a series of residual blocks, blocks of layers containing residual connections. Each residual block contains one or more convolutional layers, which are used to extract features from the input data. The output of the convolutional layers is then added to the input of the block via a residual connection before being passed through an activation function.

The general form of a residual block can be represented as follows:

Where "input" is the input to the block, "conv(input)" is the output of the convolutional layers, and "activation" is the activation function applied to the sum of the input and conv(input). The output of the residual block is the output of the activation function.

The ResNet(Residual Neural Network) architecture also includes other types of layers, such as pooling layers and fully connected layers, which are used to reduce the spatial dimensions of the feature maps and make predictions from the final feature maps, respectively. The specific design of the ResNet architecture may vary, but it typically consists of a series of residual blocks followed by a final set of fully connected layers.

Implementing ResNet with Keras

Importing Required Libraries

-

The code imports the necessary layers and models from the Keras library.

Creating Residual Block

-

It defines a function residual_block that creates a residual block with two convolutional layers and a residual connection.

Creating Input Tensor

-

It defines the input tensor for the model, which has shape (32, 32, 3) for images with 3 channels (RGB).

First Convolutional Layer

-

It adds a convolutional layer and an activation layer to the model.

Loop for Residual Blocks

-

It adds three residual blocks to the model by calling the residual_block function multiple times.

Max Pooling, Flattening, and Dense Layer

-

It adds a max pooling layer to the model.

-

It flattens the output of the pooling layer and adds a fully connected layer.

Output Layer

-

It adds a softmax activation layer to the model to produce the final output.

Add a Softmax Activation Layer

-

It creates the model using the input and output tensors.

Compiling and Training the Model

-

It compiles the model with the Adam optimizer, categorical cross-entropy loss function, and accuracy metric.

-

It trains the model on the training data for ten epochs, with a batch size of 64.

-

It evaluates the model on the test data and calculates the loss and accuracy.

Evaluating the Model

It makes predictions on new data using the prediction method.

Making Predictions

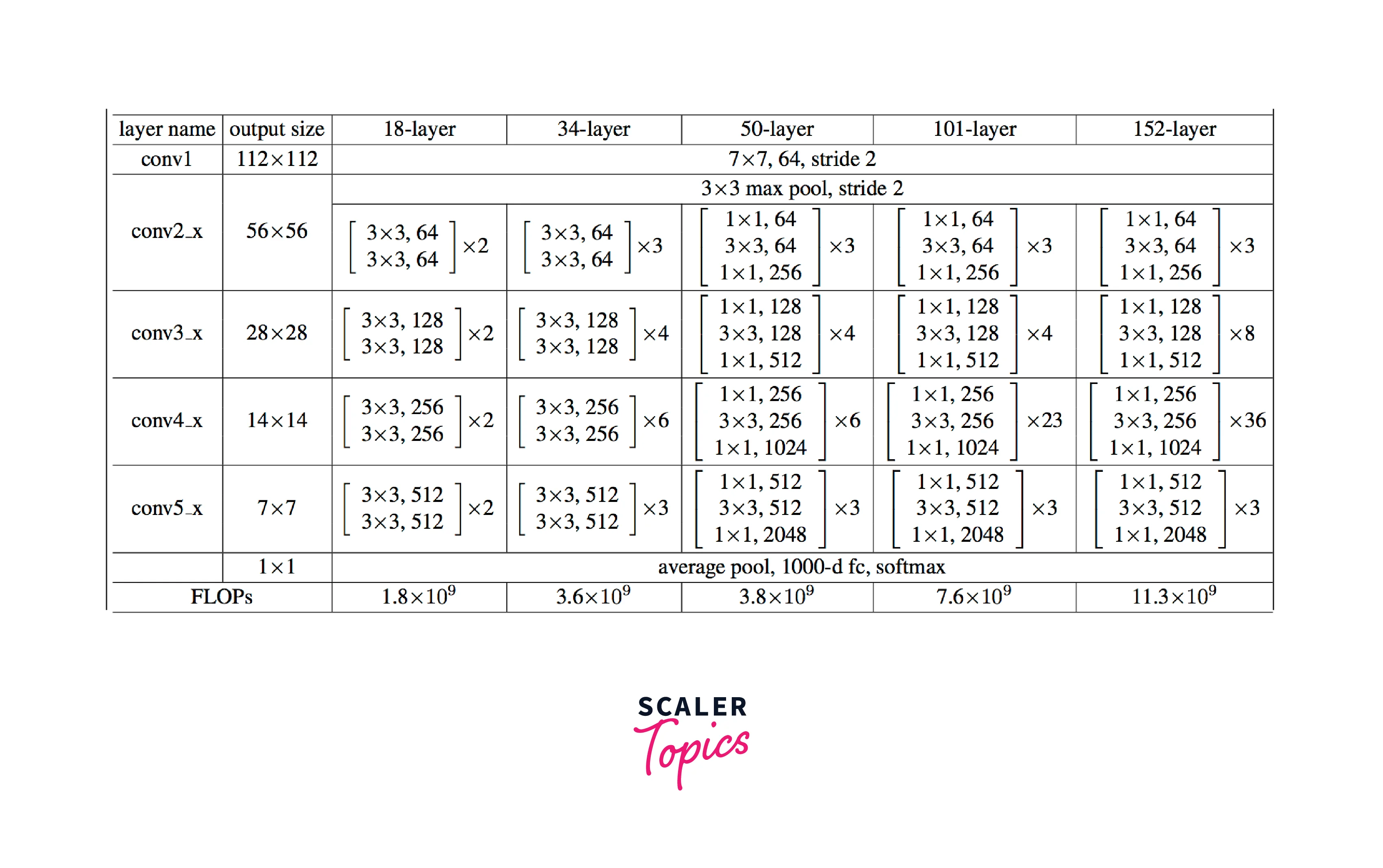

Resnet Variants

Several variants of the ResNet architecture have been developed over the years. Here are some of the most popular ones:

-

ResNet-50:

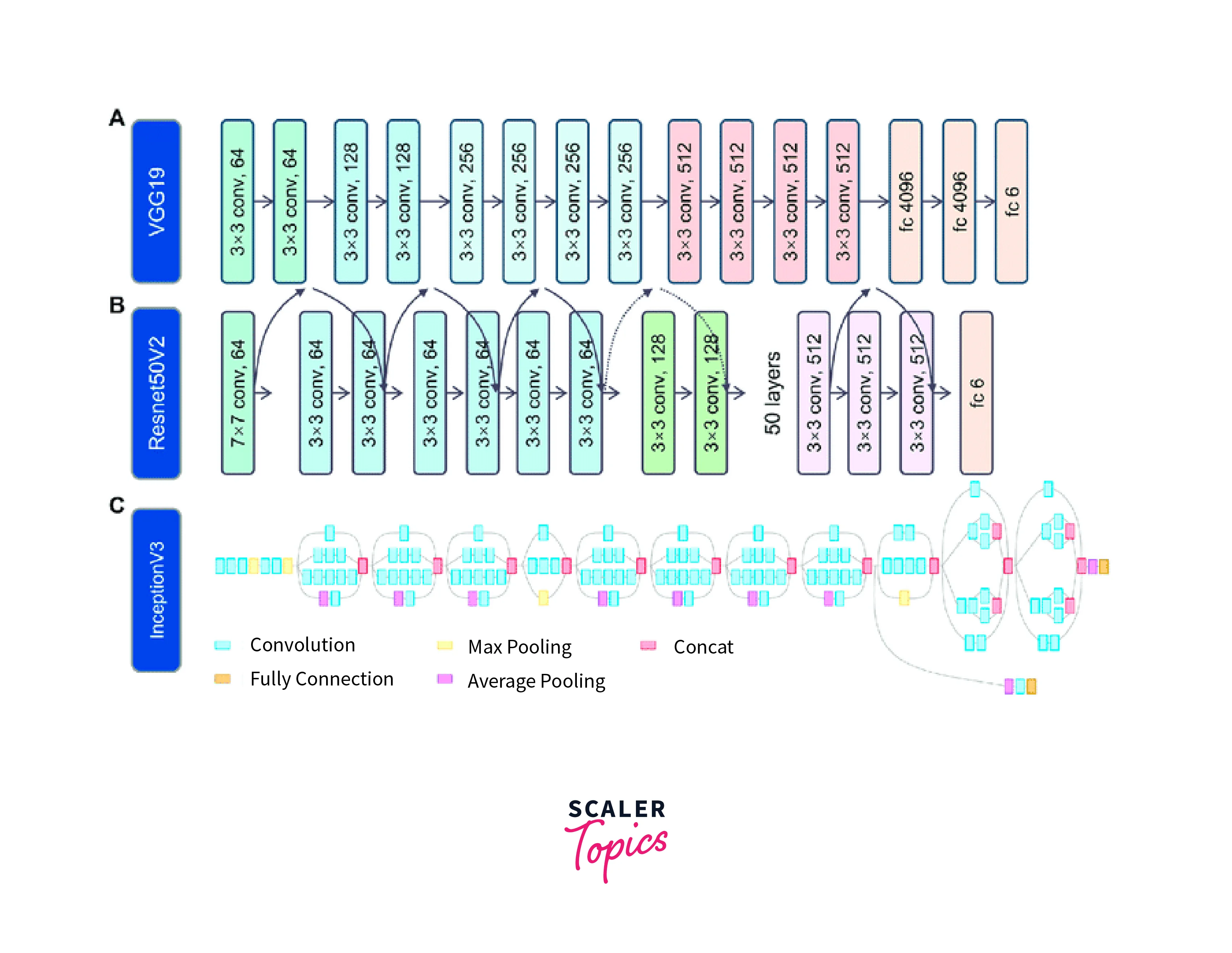

ResNet-50 is a deep convolutional neural network architecture that won first place in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2015. It is a 50-layer residual network, with a depth of 152 layers, and is trained on more than a million images. The "residual" in the name refers to the use of residual connections, which are connections between layers that bypass one or more layers, allowing the Network to learn identity mappings easily. This architecture is widely used for image classification tasks and has been used as a backbone for many state-of-the-art models in computer vision.

-

ResNet-101:

ResNet-101 is a deep convolutional neural network architecture similar to ResNet-50 but with 101 layers. It is a deeper version of the ResNet architecture, which Microsoft Research also developed, and it won first place in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2016. Like ResNet-50, it also uses residual connections, which help ease the training of such a deep network by allowing information to flow more directly from lower to higher layers. ResNet-101 is widely used in computer vision for image classification tasks, object detection, and semantic segmentation.

-

ResNet-152:

ResNet-152 is a deep convolutional neural network architecture with 152 layers, using residual connections to facilitate the training process. It is similar to ResNet-50 and ResNet-101. It was trained on the ImageNet dataset, achieving state-of-the-art results in image classification and other computer vision tasks when it was first introduced. It is widely used as a feature extractor for other computer vision tasks.

-

ResNeXt:

ResNeXt is a variant of the ResNet architecture developed by Facebook AI Research, introduced in 2016. It improves the performance of ResNet by increasing the width and depth of the Network and by using a new building block called a "split-transform" block. This allows the Network to learn more expressive feature representations and scales up to thousands of parallel branches, making it ideal for large-scale image classification tasks. It's been used as a backbone in various computer vision tasks such as object detection and semantic segmentation.

-

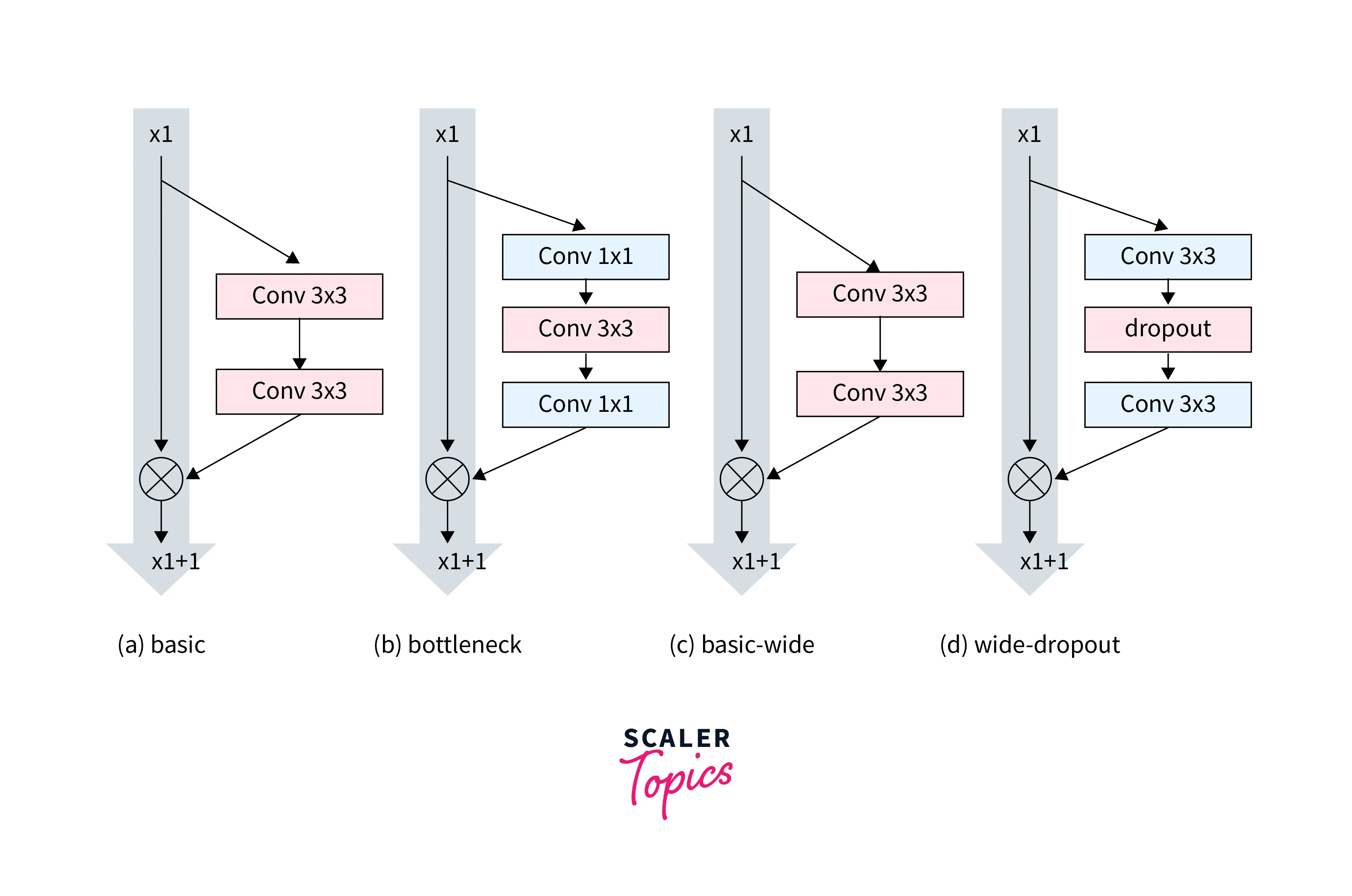

Wide ResNet:

Wide Residual Neural Network (WRN) is a variant of the ResNet architecture that uses wider layers, which means more filters in the convolutional layers. WRN was introduced by Zagoruyko and Komodakis in 2016 using a widening factor 'k' to increase the number of filters in the Network. The idea is that a wider network has more learning capacity and better performance than a deeper network. A wider network is more computationally expensive, but it can often achieve better performance with the same number of layers.

-

ResNet-50 v2:

ResNet-50 v2 is an updated version of the ResNet-50 architecture. It was introduced in 2017 with several changes to the original ResNet-50 architecture, such as using "pre-activation" layers and an identity shortcut structure. This modification makes the Network more efficient and improves the model's accuracy. ResNet-50 v2 is widely used for image classification tasks and as a backbone for many state-of-the-art models in computer vision.

-

ResNet-101 v2:

ResNet-101 v2 is an updated version of the ResNet-101 architecture. It has the same modifications as ResNet-50 v2, such as using "pre-activation" layers and an identity shortcut structure. This makes the Network more efficient and improves the accuracy of the model. Like ResNet-101, ResNet-101 v2 is also used for image classification, object detection, and semantic segmentation tasks in computer vision.

These are just a few examples of the many variants of the ResNet architecture that have been developed. Many other variations and modifications of the ResNet architecture have been proposed and used in various tasks.

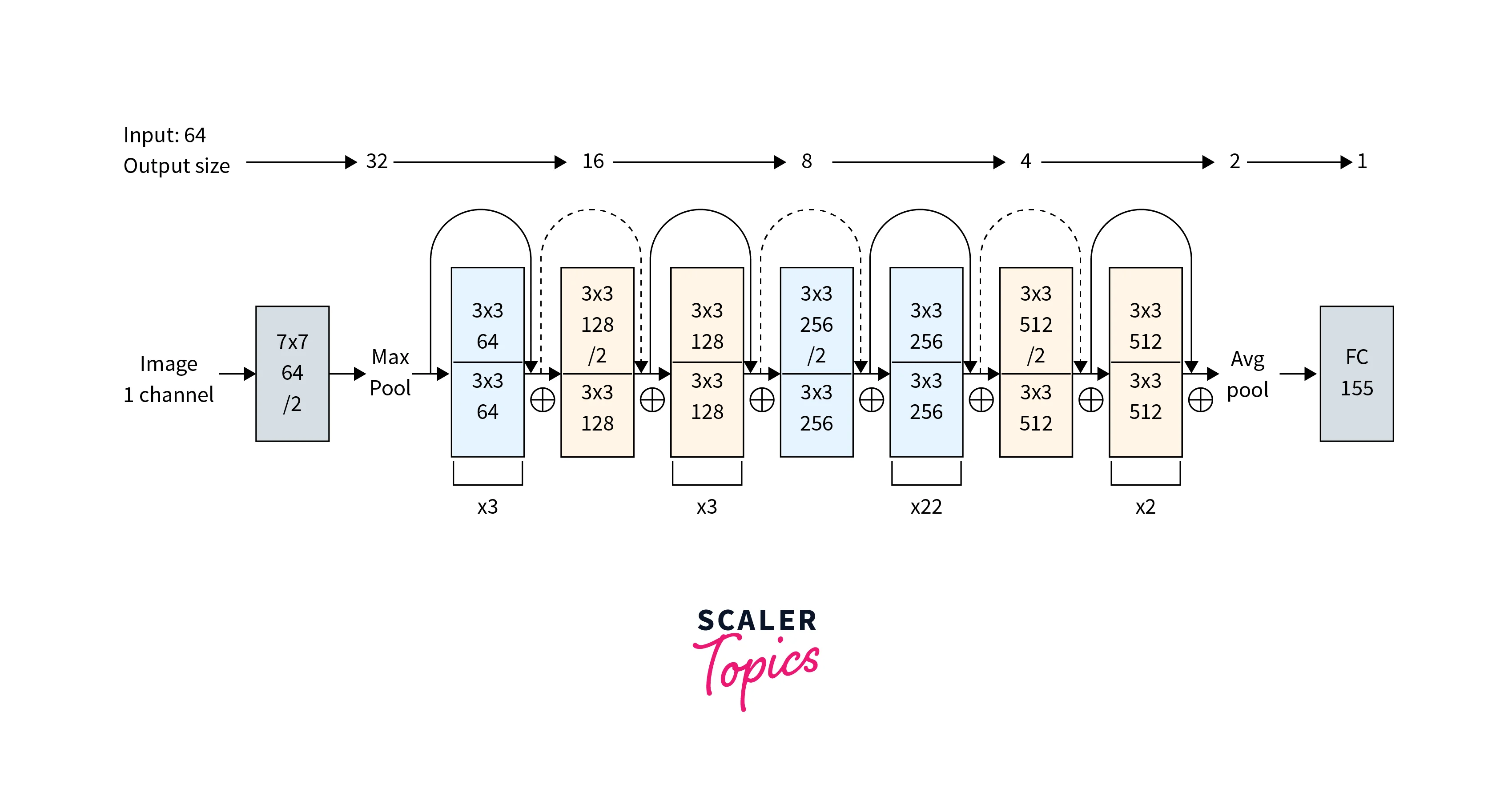

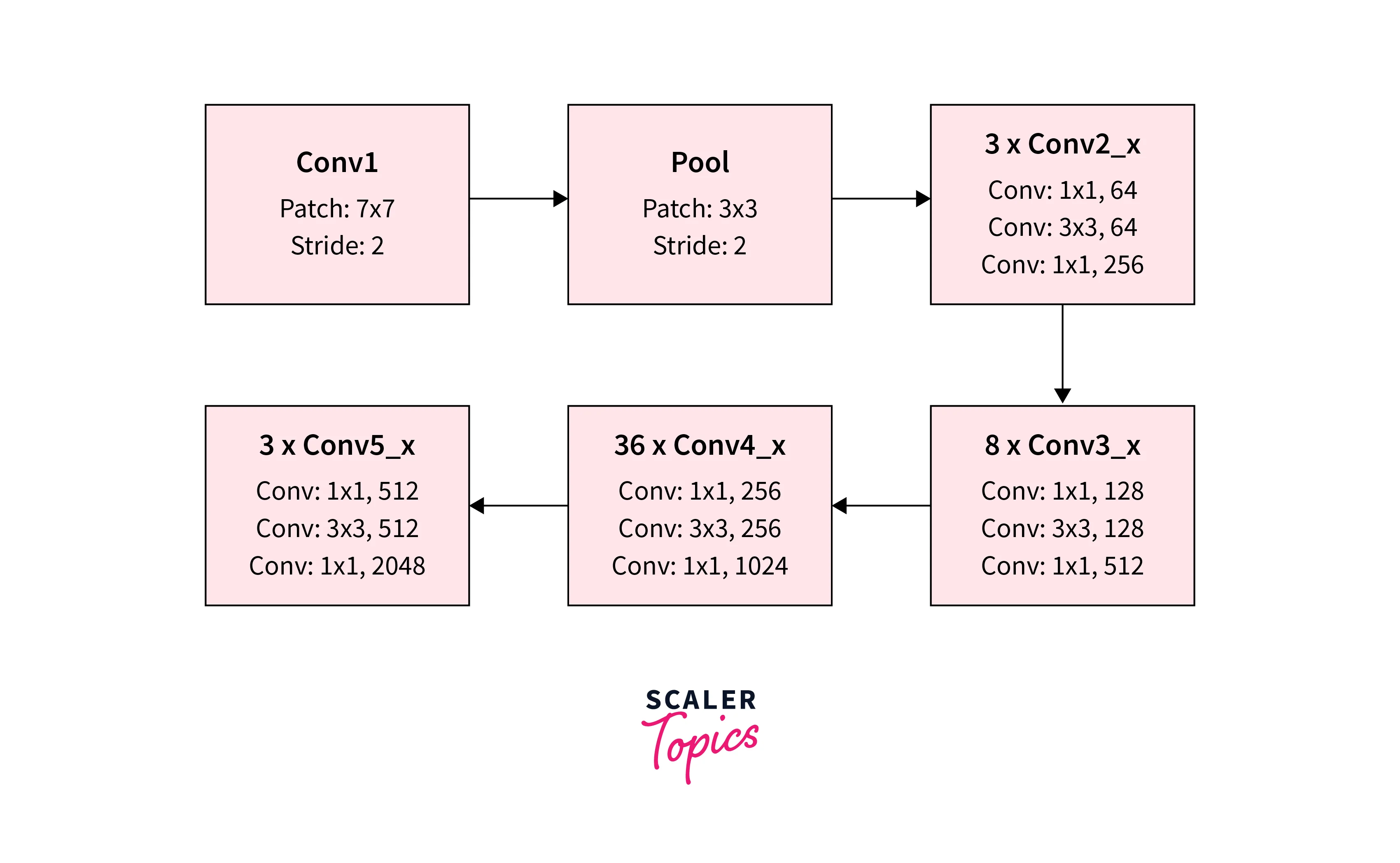

What is ResNet 50?

The ResNet-50 architecture comprises 50 layers, including several convolutional layers, activation layers, and residual blocks. The residual blocks allow the Network to learn residual functions, improving the model's ability to learn and generalize.

ResNet-50 is trained on the ImageNet dataset, which consists of millions of images and thousands of classes. The trained model can then be fine-tuned on other datasets or used as a base model for other tasks.

ResNet-50 is a variant of the ResNet architecture widely used as a base model for various tasks, including image classification, object detection, and semantic segmentation. It has achieved strong results on several benchmarks, including the ImageNet Large Scale Visual Recognition Challenge (ILSVRC).

How is it better than ResNet?

-

ResNet-50 is a variant of the ResNet architecture developed to improve the performance of deep neural networks on various tasks. It is generally considered to be more accurate and efficient than shallower models, such as ResNet-20 or ResNet-32.

-

One of the main benefits of the ResNet-50 architecture is its ability to learn residual functions, which can improve the model's ability to learn and generalize. This is achieved through residual blocks, which allow the model to skip certain layers and add the output of these layers to the output of earlier layers. This helps to mitigate the vanishing gradient problem, which can occur when training deep neural networks.

-

Another benefit of the ResNet-50 architecture is its ability to scale to larger datasets and more complex tasks. It has a larger capacity than shallower models and can learn more intricate patterns and features in the data. This can improve performance on various tasks, such as image classification and object detection.

-

However, it is important to note that the performance of a ResNet-50 model will depend on various factors, such as the quality of the training data, the choice of optimizer and loss function, and the hyperparameters of the model. Therefore, a proper comparison should consider these factors and measure the performance of the models on a specific task or dataset.

Code examples and proper diagrams

-

This will download the pre-trained ResNet 50 model weights from the internet and create a model object that you can use for prediction.

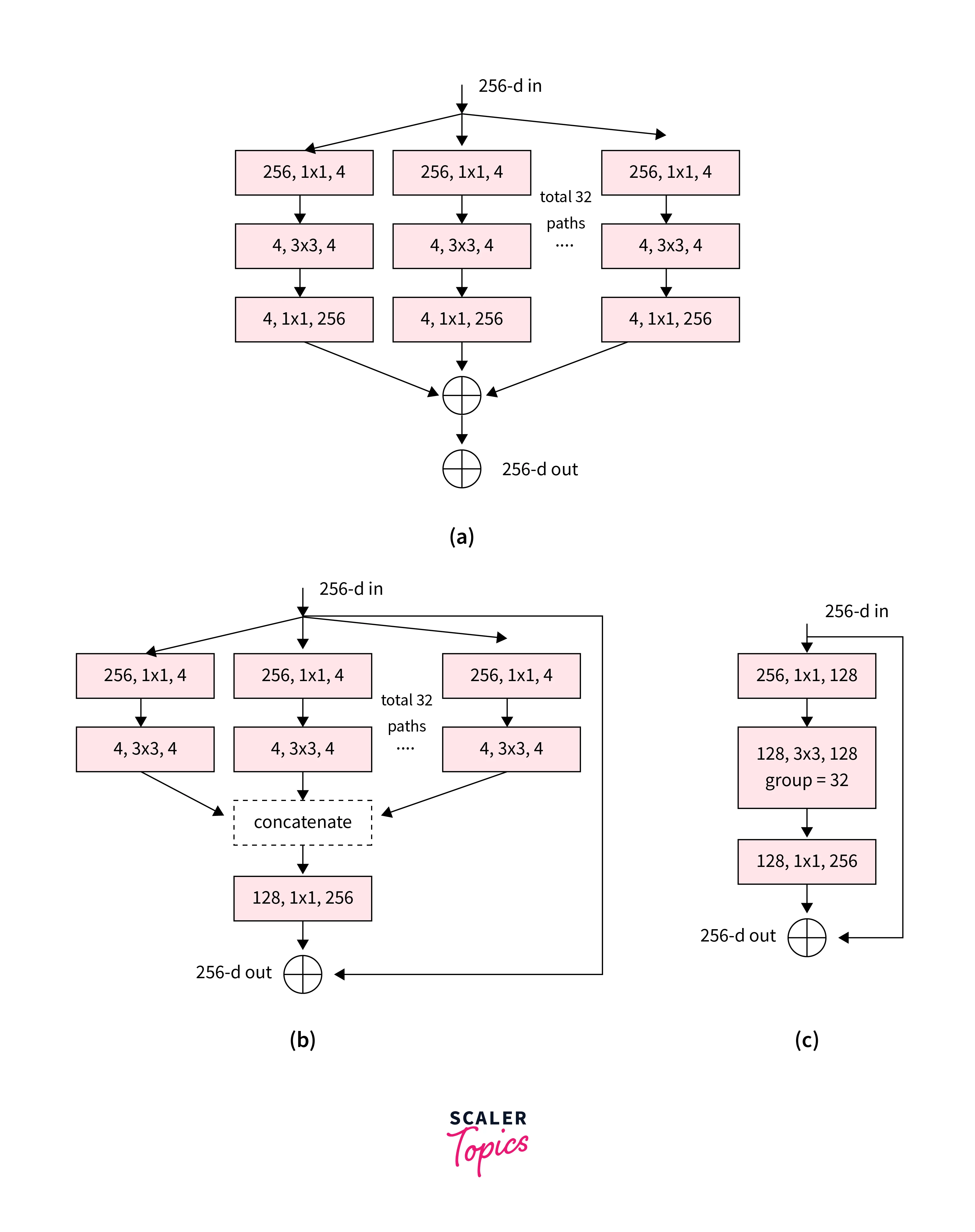

ResNeXt

ResNeXt is a convolutional neural network architecture introduced in 2017 by researchers at Facebook AI Research. It stands for "Residual Network with Extremely Aggregated (Xtreme) Transformations." The key innovation of ResNeXt is the use of split-transform-aggregate blocks, which allows the Network to learn more complex and diverse feature representations compared to the standard residual blocks used in the original ResNet architecture. ResNeXt has been shown to achieve state-of-the-art results on several computer vision benchmarks and is widely used in practice.

Advantages of using ResNeXt include:

-

Improved performance:

ResNeXt can achieve better accuracy on image classification tasks than ResNet. -

Increased capacity:

ResNeXt has a larger capacity to learn features than ResNet, making it more suitable for large-scale image classification tasks. -

Efficient computation:

ResNeXt uses a "split-transform-merge" block that can be efficiently parallelized, resulting in faster training and inference times. -

Scalability:

ResNeXt can scale to thousands of layers by increasing the splits in the "split-transform-merge" block, making it well-suited for even more complex tasks.

Disadvantages:

-

High computational cost:

ResNeXt requires a lot of computational resources, making it less suitable for devices with limited computational power. -

Complexity:

ResNeXt is a complex architecture, making it harder to understand and implement. -

Large memory requirements:

ResNeXt requires a lot of memory, making it less suitable for devices with limited memory. -

Difficulty in fine-tuning:

ResNeXt is less easy to fine-tune, making it less suitable for tasks that require fine-tuning.

Conclusion

- Residual Neural Network (ResNet) is a convolutional neural network architecture introducing the concept of residual connections.

- ResNet won the ImageNet Large Scale Visual Recognition Challenge in 2015 and is widely used for image classification and other computer vision tasks.

- There are several variants of ResNet, including ResNet-50, ResNet-101, ResNet-152, and ResNet-200, which are deeper and wider versions of the network.

- The choice of which ResNet variant to use will depend on the specific requirements of the task and available computational resources.