Parallel Programming in Scala

Overview

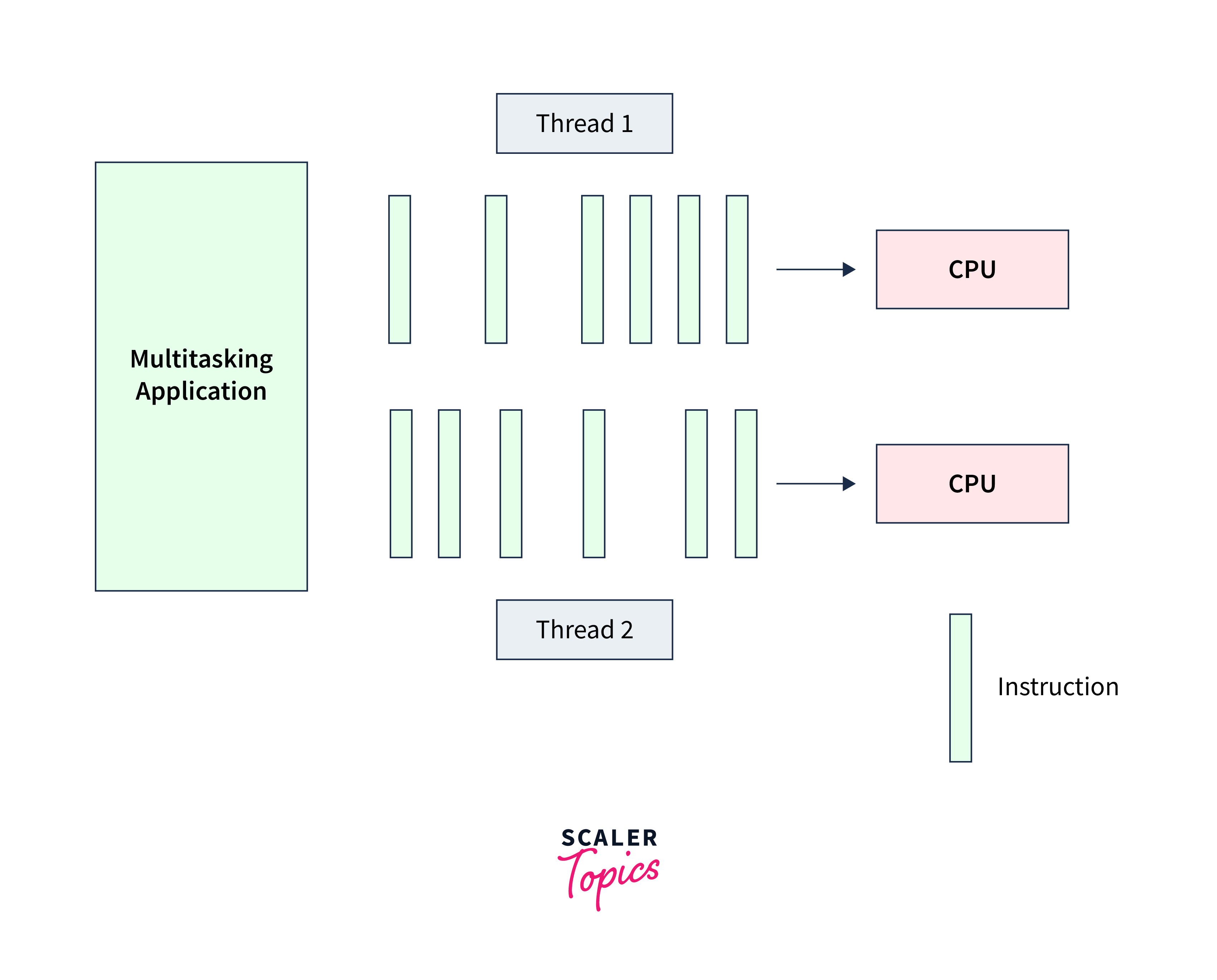

Parallel programming in Scala is a technique that enables the execution of multiple tasks concurrently to improve performance, make better use of multi-core processors, and enhance the responsiveness of software applications. Parallel programming in Scala can be achieved through various mechanisms and libraries. Scala, being a language that runs on the Java Virtual Machine (JVM), inherits the concurrency and parallelism features of the JVM. Scala provides features like actors, futures, parallel collections, and libraries like Akka to manage concurrency and parallelism.

Introduction

Parallel programming in Scala is a compelling approach that empowers developers to unlock the full potential of modern computing hardware. By executing multiple tasks simultaneously, it enhances the performance and responsiveness of software applications. Scala, being a language that runs on the Java Virtual Machine (JVM) offers a rich ecosystem of concurrency and parallelism tools, including actors, futures, parallel collections, and libraries like Akka. These tools enable developers to harness the power of multi-core processors and distributed computing environments, making it an essential skill in the ever-evolving landscape of software development.

Difference between Parallel Programming and Concurrent Programming

Parallel programming and concurrent programming are two related but distinct approaches to handling multiple tasks or processes in a computer program. Here are the key differences between the two:

-

Definition:

- Parallel Programming:

Parallel programming focuses on simultaneously executing multiple tasks or processes, typically to improve performance by taking advantage of multi-core processors or distributed systems. Parallel tasks may execute concurrently on different processing units. - Concurrent Programming:

Concurrent programming deals with managing multiple tasks that are executed independently but not necessarily simultaneously. It aims to improve the efficiency of resource usage, enable better responsiveness, and handle tasks that overlap in time without blocking each other.

- Parallel Programming:

-

Goal:

- Parallel Programming:

The primary goal of parallel programming is to execute tasks concurrently to achieve faster execution, often making use of multiple processor cores or distributed systems. It aims to distribute the workload to improve overall performance. - Concurrent Programming:

The primary goal of concurrent programming is to enable multiple tasks to make progress independently, allowing for better resource utilization and responsiveness, particularly in scenarios with potentially overlapping tasks.

- Parallel Programming:

-

Use Cases:

- Parallel Programming:

It is commonly used for computationally intensive tasks, such as scientific simulations, rendering, data processing, and tasks that can be divided into subtasks that run simultaneously. - Concurrent Programming:

It is used in scenarios where multiple tasks need to run independently and possibly interact with one another. Examples include handling multiple user requests in a web server, managing user interfaces, and I/O operations.

- Parallel Programming:

-

Resource Utilization:

- Parallel Programming:

Parallel programming aims to maximize the use of available hardware resources, such as multiple processor cores, to execute tasks in parallel. - Concurrent Programming:

Concurrent programming aims to ensure efficient use of resources by allowing tasks to work independently and, if necessary, yield resources when they are not actively working.

- Parallel Programming:

-

Examples:

- Parallel Programming:

Examples include parallel processing of large datasets, multi-threaded video encoding, and distributed computing in clusters. - Concurrent Programming:

Examples include handling multiple user connections in a web server, managing concurrent user sessions in a database, and implementing multi-threaded user interfaces.

- Parallel Programming:

How to Create a Parallel Collection in Scala?

Scala provides parallel versions of common collection types, such as Seq, List, Vector, and others, that allow us to parallelize operations on the data they contain.

Here's how we can create a parallel collection and use it in our code:

-

Import the necessary package:

To use parallel collections, we need to import the appropriate package. For example, to work with parallel sequences, we can import the following package:

We can also import parallel versions of other collection types like ParList, ParVector, and so on, depending on our needs.

-

Create a parallel collection:

Once we've imported the package, we can create a parallel collection by converting an existing collection into its parallel counterpart using the .par method:

In this example, originalList is converted into a parallel list, parallelList.

-

Perform parallel operations:

With the parallel collection in hand, we can perform parallel operations on it. Parallel collections offer methods for parallel mapping, filtering, reducing, and more, allowing us to take advantage of multiple processor cores for data processing. For instance:

This code maps a function to double each element in the parallelList in parallel.

Here's a complete example:

This code demonstrates creating a parallel collection from a sequential list, applying a parallel map operation, and displaying the results.

Examples of Parallel Collection

Here are some examples of using some common parallel collections in Scala:

Parallel List:

A parallel list can be created from a regular list, and then parallel operations can be performed on it:

In this example, a regular list (myList) is converted into a parallel list (parallelList). The map operation is applied to double each element in parallel.

Parallel Vector:

Vectors are a well-suited data structure for parallelism due to their efficient random access:

In this example, a regular vector (myVector) is converted into a parallel vector (parallelVector). The reduce operation calculates the sum of elements in parallel.

Parallel Range:

Ranges are often used for iterating over a sequence of numbers. We can create a parallel range to perform operations on a range of numbers in parallel:

In this example, a parallel range is created from the range (1 to 1000), and the sum operation calculates the sum of numbers in parallel.

Semantics

In parallel programming in Scala, as in any other programming context, "semantics" refers to the meaning or behavior of a program when executed in a concurrent or parallel environment. Specifically, it addresses how the various concurrent and parallel operations interact, execute, and affect each other.

1. Operational Semantics in Parallel Programming:

- In parallel programming, operational semantics defines how concurrent or parallel operations in a program should be executed and how they interact.

- It deals with aspects like thread creation, synchronization, communication between threads, and the order in which parallel tasks are executed.

2. Denotational Semantics in Parallel Programming:

- Denotational semantics, on the other hand, represents the meaning of parallel programs by mapping them to mathematical structures.

- It can be used to formally reason about the behavior of parallel code, ensuring that concurrent operations meet the desired specifications and constraints.

Side-Effecting Operations

Side-effecting operations in parallel programming refer to operations that modify shared state, perform I/O, or interact with external resources in a way that can affect other parallel or concurrent tasks. Parallel programming often involves multiple threads or processes working on shared data, and side effects can lead to race conditions and synchronization challenges.

Managing Side Effects:

- In Scala, managing side effects in parallel programming is essential for writing safe and predictable concurrent code.

- Developers can use constructs like synchronized blocks to protect shared resources from concurrent access, ensuring proper mutual exclusion.

- Functional programming principles can also be employed to minimize the use of side effects. Immutability and pure functions, which don't have side effects, are encouraged for safer parallel code.

Non-Associative Operations

Non-associative operations in parallel programming refer to operations that don't exhibit the associative property, which means the order in which they are executed can impact the final result. While this property holds for many mathematical and computational operations, certain operations in parallel code may not be associative due to the unpredictable ordering of execution across multiple threads or processes.

Example of Non-Associative Operations:

- Parallel Reduction:

Consider the example of parallel reduction, where we're summing a list of numbers using multiple threads. If the addition operation is not associative, the order of execution can affect the final sum. - In parallel reduction, the non-associative property means that the order of summation across multiple threads can result in different final sums, making the result of the reduction dependent on the specific interleaving of operations.

Why should we Use Parallel Programming?

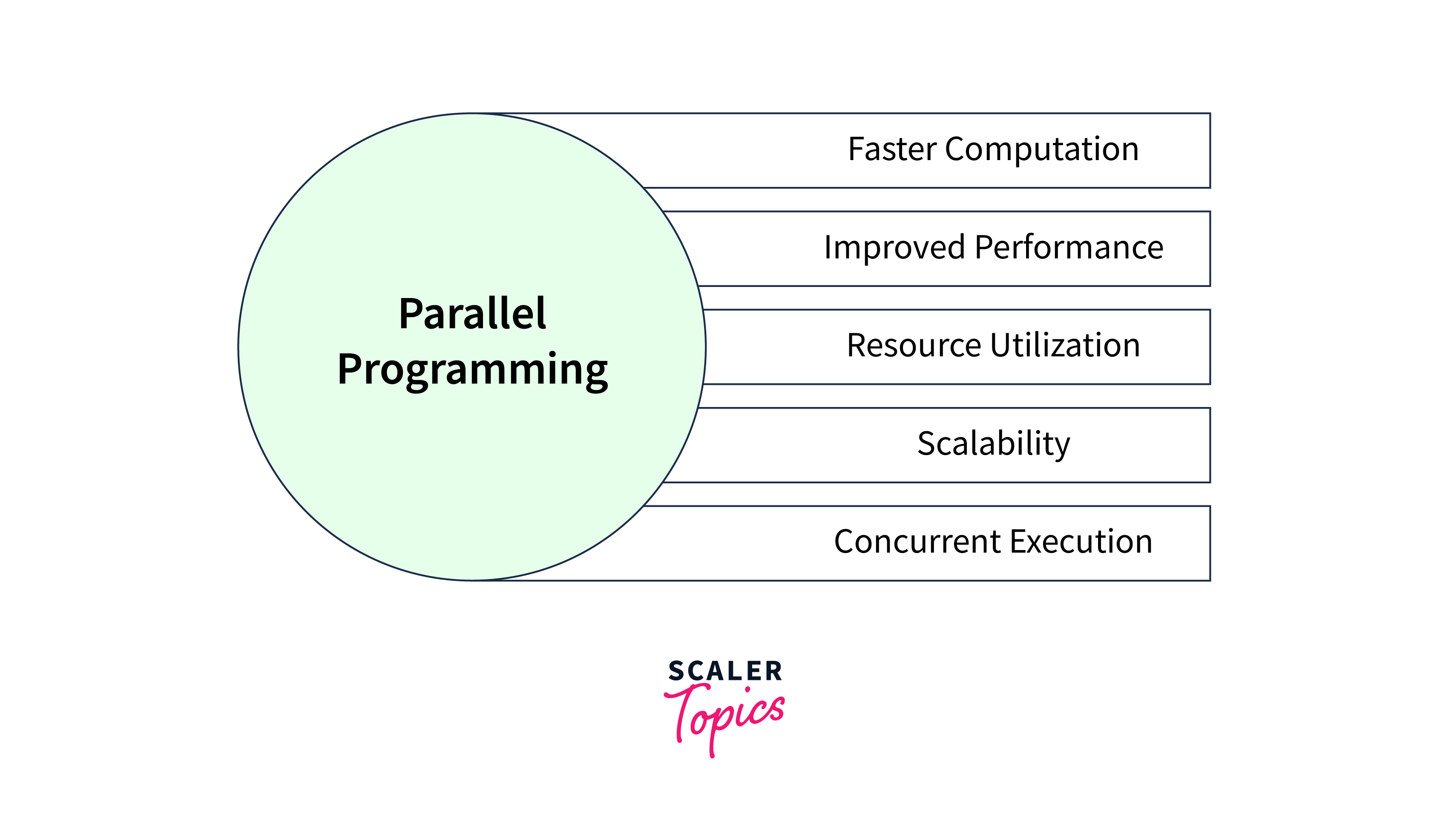

Here are some of the key reasons why parallel programming is important and why we should consider using it:

-

Improved Performance:

One of the primary reasons to use parallel programming is to enhance the performance of our applications. By executing multiple tasks or processes simultaneously, we can harness the full potential of multi-core processors and distributed computing environments. This results in faster execution and improved responsiveness. -

Resource Utilization:

Parallel programming enables efficient utilization of available hardware resources. Without parallelism, modern multi-core processors may remain underutilized. Parallel code allows us to make the most of our hardware, reducing resource wastage. -

Scalability:

Parallel programming techniques are essential for building scalable applications. When dealing with large datasets or high-demand systems, parallelism allows us to expand the system's capacity without significant drops in performance. This is crucial for web servers, databases, and other resource-intensive services. -

Concurrent Execution:

Many real-world scenarios involve tasks that must run concurrently, such as handling multiple user requests in a web server or managing user interfaces. Parallel programming provides a structured way to handle concurrency, making it easier to develop and maintain such systems. -

Faster Computation:

Parallel programming is particularly valuable for computationally intensive tasks. Tasks like scientific simulations, rendering, data processing, and complex mathematical computations can see substantial speedups when parallelized.

Conclusion

- Parallel programming in Scala is the concurrent execution of tasks on multiple processors or cores to enhance performance and resource utilization.

- Parallel programming offers benefits such as enhanced performance, efficient resource utilization, scalability, and its importance in real-time systems, big data processing, and more.

- Though parallelism and concurrency are related terms they differ as parallelism focuses on executing tasks simultaneously, whereas concurrency deals with managing multiple tasks concurrently.

- Semantics in parallel programming in Scala refers to the rules governing the meaning and behavior of concurrent or parallel operations, including how they execute, interact, and access shared resources in multi-threaded or distributed environments.