Scripted Pipeline in Jenkins

Overview

Jenkins' Scripted Pipeline, a dynamic Groovy scripting approach, enhances software workflow automation. This article explores setup, syntax, stages, customization, and real-world cases, offering insights into efficient software delivery orchestration.

Setting Up Jenkins for Scripted Pipeline

Definition

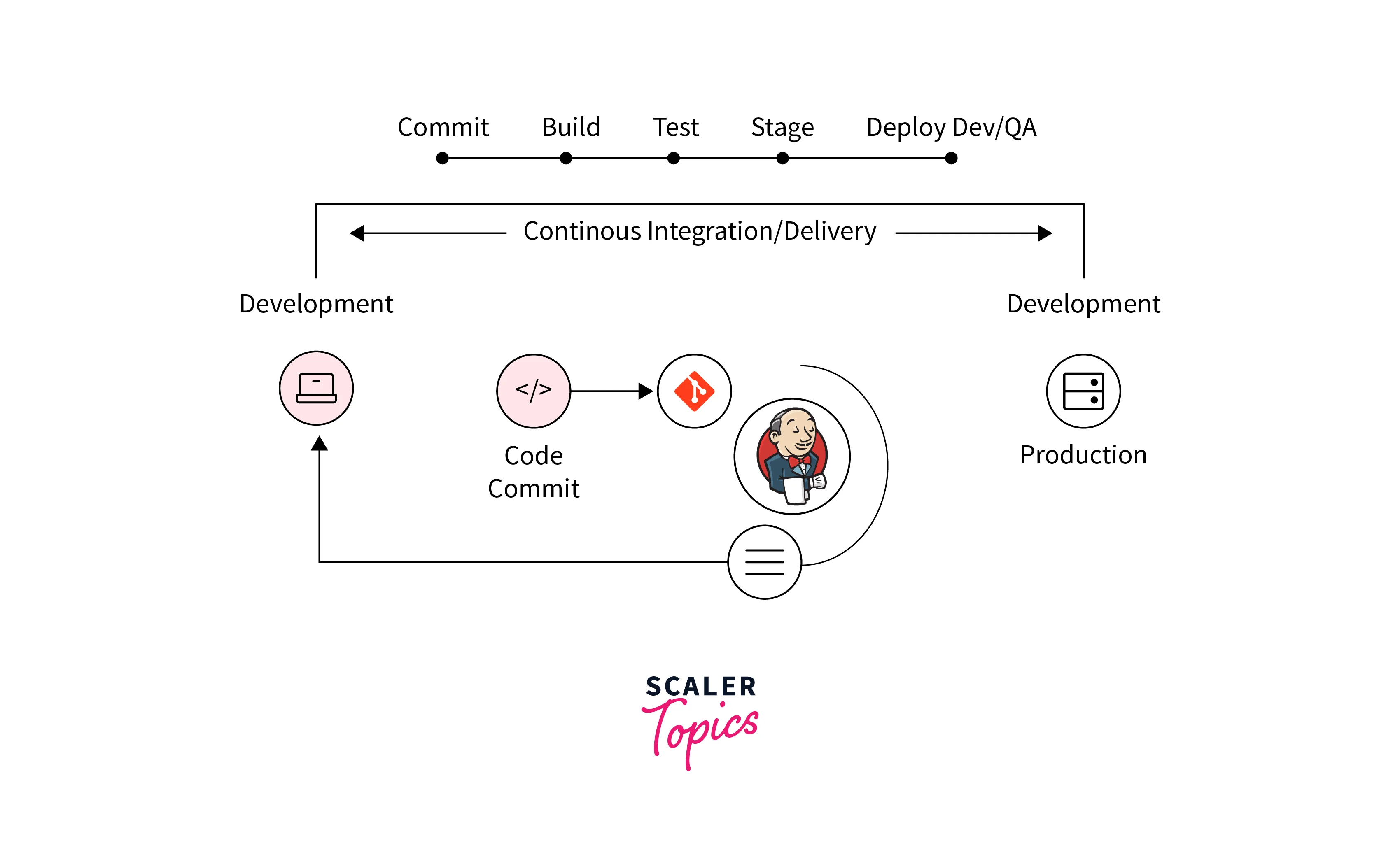

Jenkins Scripted Pipeline is a powerful approach to defining and orchestrating workflows for continuous integration and continuous delivery (CI/CD) within the Jenkins automation server. It allows developers and DevOps teams to express their build and deployment processes using Groovy scripting language, providing exceptional flexibility and control over complex pipelines.

At its core, a Scripted Pipeline is essentially a Groovy script that describes a series of stages and steps needed to build, test, and deploy software applications. Unlike Declarative Pipelines, which rely on a predefined structure, and DSL (Domain-Specific Language), Scripted Pipelines offer a more programmatic and customizable way to define pipelines. This is particularly advantageous when dealing with intricate workflows or scenarios that demand dynamic decision-making.

The Scripted Pipeline script resides in a Jenkinsfile within your version control repository, ensuring that your pipeline configuration is versioned along with your codebase. This configuration-as-code approach not only enhances reproducibility but also simplifies collaboration, as changes to the pipeline can be reviewed and approved through the same version control processes as code changes.

Key components of a Scripted Pipeline include stages, steps, and various Jenkins plugins that extend its functionality. Stages represent distinct phases in your pipeline, such as building, testing, and deployment, while steps are individual tasks or actions performed within each stage. These steps can range from compiling source code and running tests to publishing artifacts and notifying stakeholders.

While the Scripted Pipeline's flexibility is a remarkable advantage, it's essential to strike a balance between customization and maintainability. Writing intricate scripts can become complex, and it's crucial to follow best practices to ensure readability, scalability, and ease of troubleshooting. The use of meaningful variable names, modularization through functions, and comments within the script can significantly enhance the script's comprehensibility over time.

In summary, Jenkins Scripted Pipeline offers a versatile and expressive way to define CI/CD workflows using Groovy scripting. It empowers teams to handle complex pipeline scenarios, though striking a balance between customization and maintainability is crucial. In the following sections, we'll guide you through the process of setting up Jenkins for Scripted Pipelines, equipping you to optimize your software delivery pipeline effectively.

Configuring Jenkins

With the inception of the Scripted Pipeline, Jenkins integrated an embedded Groovy engine, ushering in Groovy as the scripting language within the Pipeline's DSL (Domain-Specific Language).

Follow the following steps to configure and set up Jenkins to start developing scripted pipelines:

-

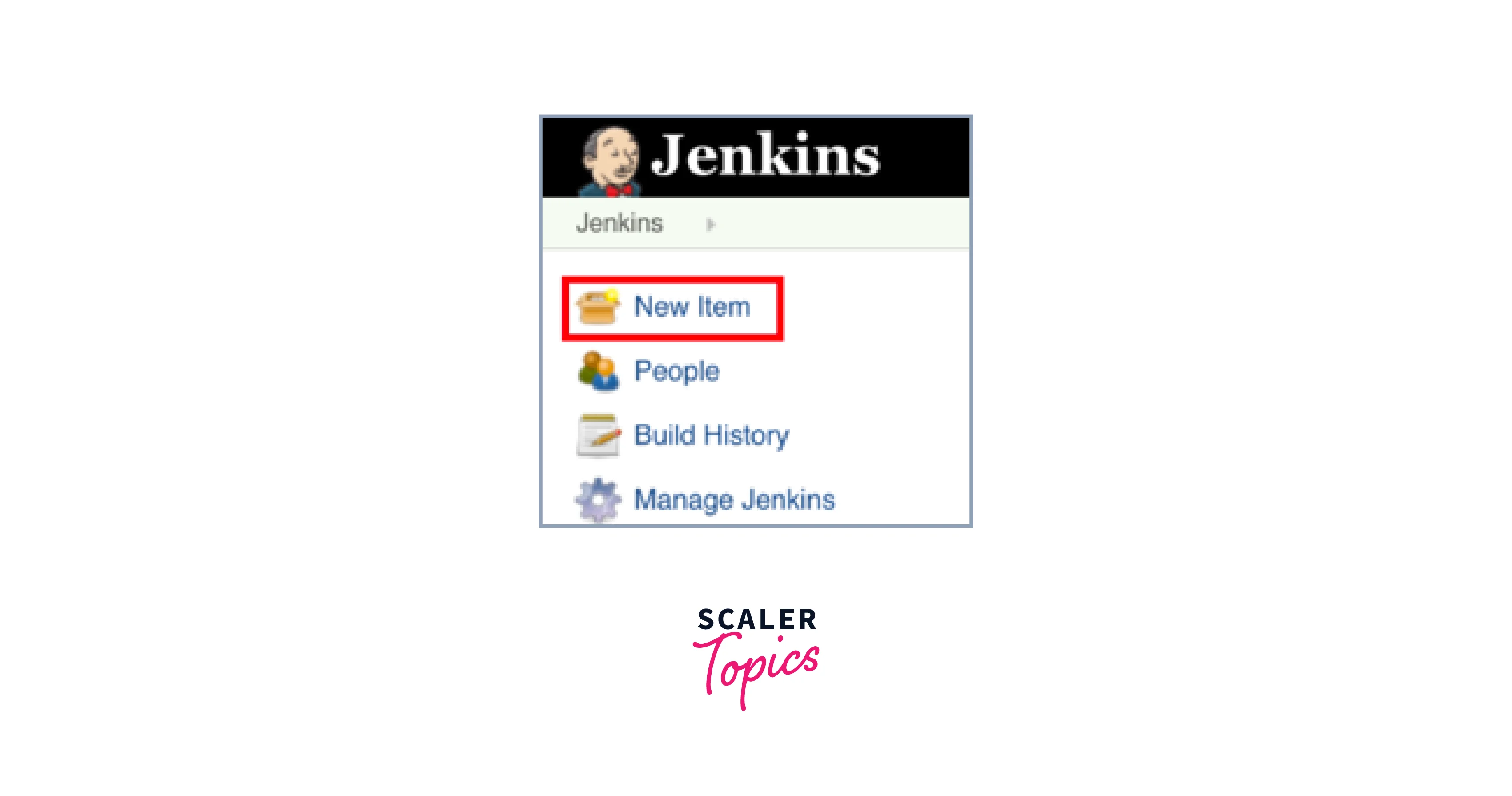

Access Jenkins and Initiate New Item Creation:

Begin by logging into your Jenkins server and navigating to the left panel. Locate and click on the "New Item" option. This initiates the process of creating a new pipeline within Jenkins.

-

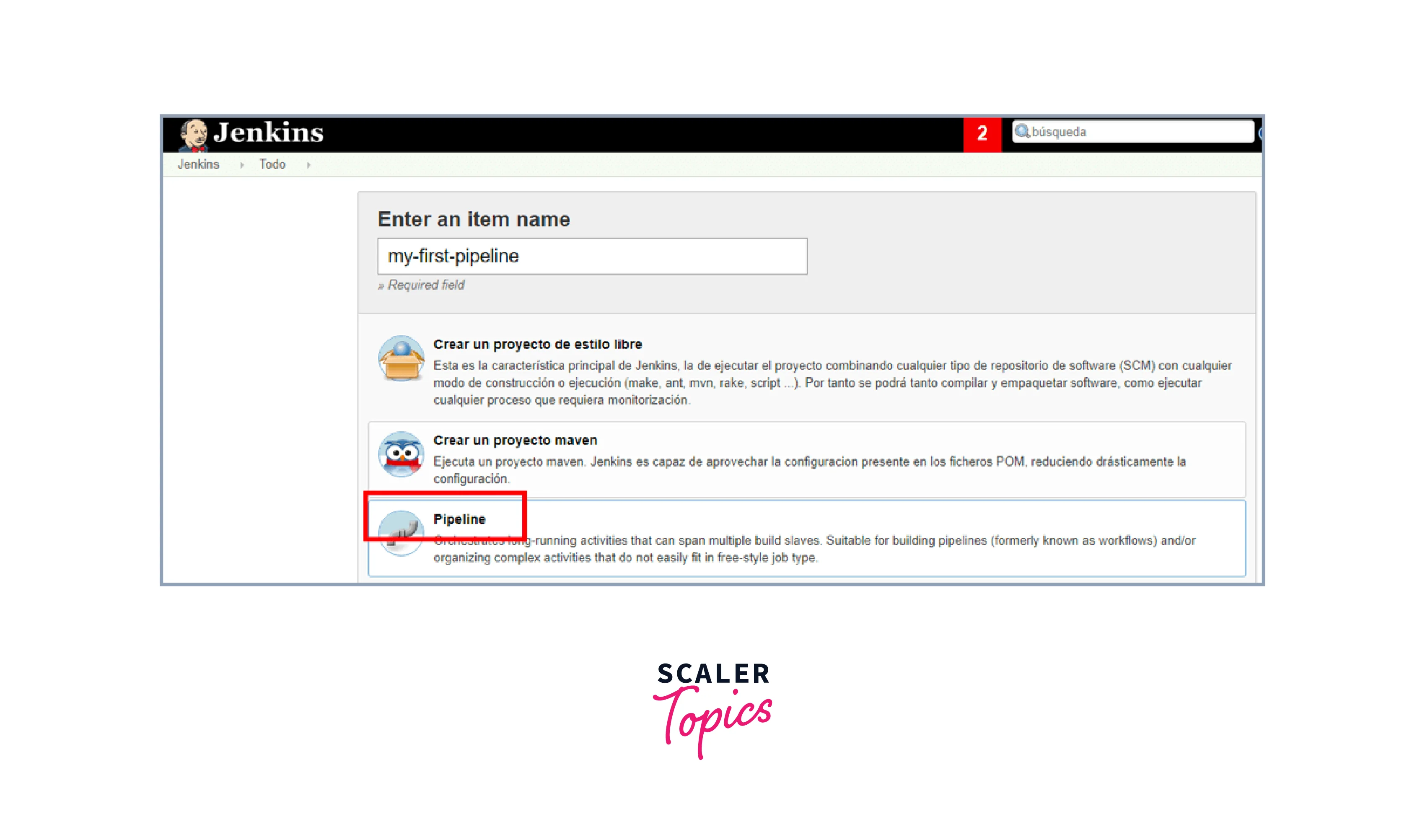

Define Pipeline Name and Select Pipeline Type:

Upon clicking "New Item", you'll be prompted to assign a name to your pipeline. This name should ideally reflect the project or process your pipeline will manage. From the available options, choose "Pipeline". Afterward, click "OK" to proceed to the next configuration phase.

-

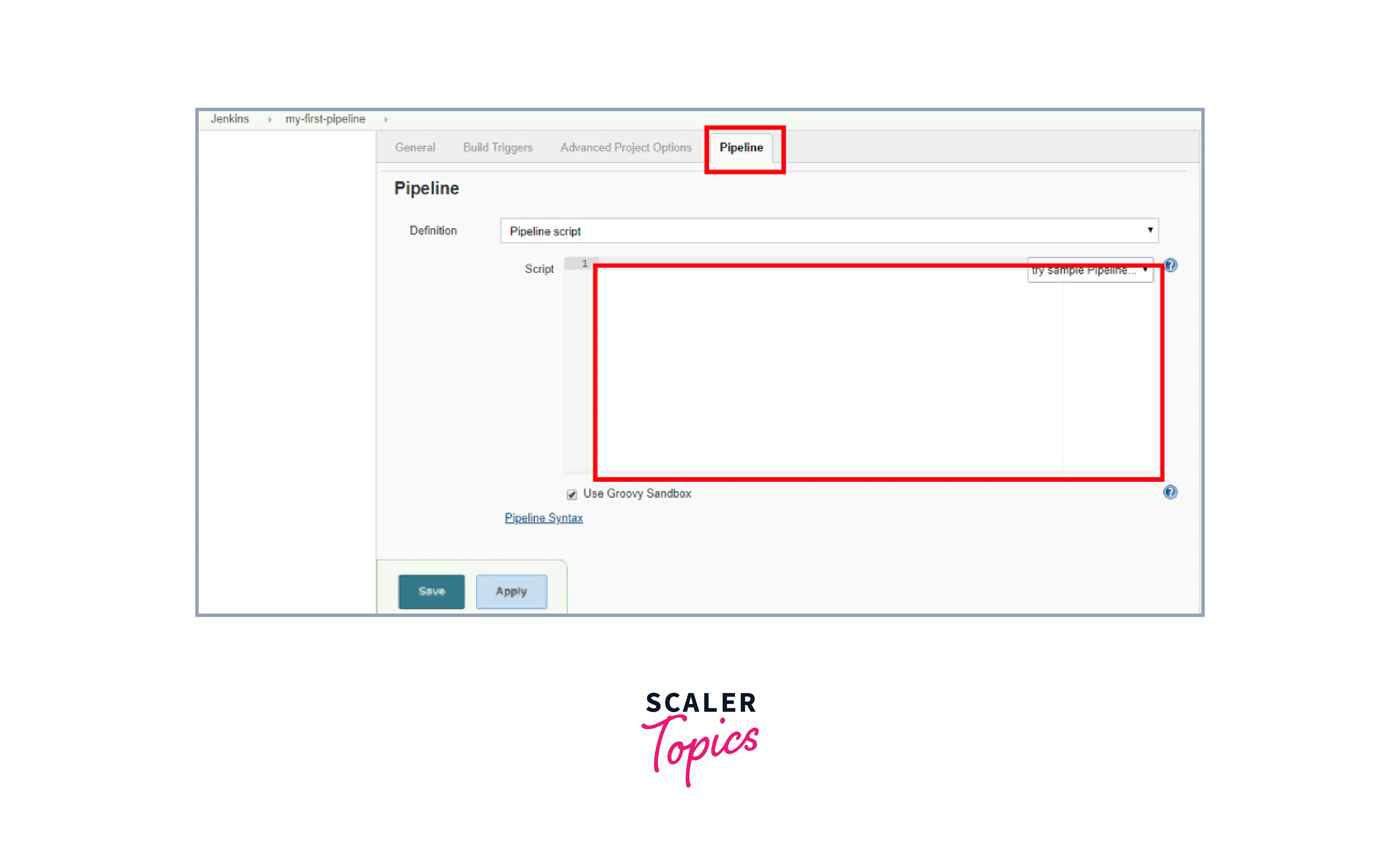

Commence Script Composition:

With the initial setup complete, you are now poised to craft your Scripted Pipeline. The focal point of this phase is the scripting box showcased in the middle of the interface. This is where you'll script the sequence of actions your pipeline will perform.

Basic Syntax of Scripted Pipeline

Understanding the building blocks of a Scripted Pipeline is essential for constructing effective CI/CD workflows. Let's delve into the key elements that compose this structure and their significance.

Node Block:

The node block is the fundamental workspace where pipeline steps are executed. It provides an environment for your pipeline to run, typically on an agent node.

Stages:

Stages define logical sections within your pipeline, reflecting distinct phases of your software delivery process, such as building, testing, and deployment.

Stages help you visualize and monitor the progression of your pipeline, aiding in identifying bottlenecks and issues.

Steps:

Steps are individual actions carried out within each stage. They encompass tasks like compiling code, running tests, and deploying applications.

Steps are the concrete actions that transform your code from source to deployment, forming the backbone of your pipeline.

Post Section:

The post section defines actions to be executed after a stage or the entire pipeline completes, regardless of the outcome.

Utilizing the post section allows you to perform crucial actions, such as cleaning up resources or notifying stakeholders, based on the pipeline's outcome.

Conditional Statements:

Conditional statements enable your pipeline to adapt based on conditions. This facilitates dynamic decision-making during the pipeline's execution.

Conditional statements accommodate scenarios where certain stages or steps are executed only when specific conditions are met.

Parallel Execution:

Parallel execution permits the simultaneous running of multiple stages, enhancing pipeline efficiency.

Parallel execution optimizes resource utilization and reduces pipeline execution time.

Defining Stages and Steps

In the case of Scripted Pipelines, stages and steps are the bedrock upon which your CI/CD workflows are constructed. Each stage signifies a significant phase of your software delivery process, while steps encapsulate individual actions within those stages.

Stages

Stages Segment your pipeline into logical phases, simplifying the visualization and monitoring of your workflow. Each stage encapsulates a series of related steps, making it easier to identify bottlenecks and debug issues. Stages are particularly advantageous for comprehending complex pipelines. Let's explore an example:

In this example, the pipeline is divided into three stages: Build, Test, and Deploy. Each stage houses specific steps tailored to its purpose. Stages enhance the clarity of your pipeline's progression.

Steps

Steps represent the individual actions performed within a stage. They constitute the actual work carried out in your CI/CD process. Each step executes a task, such as compiling code, running tests, or deploying applications. Let's delve into steps with practical examples:

Here, within the Build stage, the pipeline executes steps to install dependencies and build the project. The Test stage runs tests, ensuring code quality. Finally, the Deploy stage utilizes a step to transfer the built artifacts to a remote server.

By utilizing stages and steps effectively, you compartmentalize your pipeline's operations, streamline debugging, and enhance collaboration within your team. Here are some significance and benefits of steps and stages in a scripted Jenkins pipeline:

- Clarity and Isolation:

Stages offer a visual representation of your pipeline's progression. This visibility aids in diagnosing bottlenecks, failures, or performance issues. - Parallel Execution:

Stages can be executed in parallel, optimizing resource utilization and reducing the overall pipeline runtime. - Ease of Collaboration:

Dividing work into stages enhances collaboration, as different team members can focus on specific areas without interference. - Focused Debugging:

In case of failures, stages pinpoint where the issue occurred, streamlining the debugging process. - Structural Adaptability:

Stages allow the pipeline structure to evolve as the project's requirements change. - Visual Reports:

Jenkins provides visual reports of stage execution, offering insights into performance over time.

Environment Variables and Parameters

Environment variables and parameters play a pivotal role in enhancing modularity, flexibility, and reusability within your CI/CD workflows. Let's discuss how these constructs empower you to craft adaptable pipelines while maintaining a beginner-friendly approach.

Environment Variables

Environment variables are dynamic values that can be accessed by your pipeline script during its execution. They serve as a bridge between your pipeline and the external environment, allowing you to inject configuration values and data without modifying the script itself. This not only enhances adaptability but also promotes modular code development.

In this example, the environment variable BRANCH_NAME enables conditional deployment based on the branch being built. By utilizing environment variables, you foster modularity, as changes in deployment targets can be managed externally without altering the script.

Parameters

Parameters provide an interactive means to customize your pipeline's behavior at runtime. They enable you to prompt users for input, further enhancing the flexibility of your workflows.

In this snippet, the TARGET_ENV parameter allows the user to specify the deployment environment when triggering the pipeline. This fosters collaboration and adaptability by granting users the ability to tailor pipeline behavior to their needs.

Needless to say, the ability to abstractify environment variables goes hand-in-hand with the methodology of DevOps:

- Promoting Modular Code:

Environment variables and parameters encourage a modular approach to pipeline scripting. By decoupling configuration values and interactive input from the script itself, you create pipelines that are easier to manage and maintain. - Reusability:

Modular pipelines can be reused across different projects or stages within the same project. The ability to tweak behavior without modifying the script reduces the risk of errors and simplifies updates. - Separation of Concerns:

Modularity aligns with the DevOps principle of separating concerns. The pipeline script can focus on orchestrating tasks, while configuration and customization are handled externally. - Version Control Harmony:

Modularity promotes harmonious version control. Changes to environment variables or parameter configurations can be tracked and reviewed separately from pipeline logic changes.\

Handling Errors and Post-build Actions

Navigating the landscape of continuous integration and continuous delivery (CI/CD) with Scripted Pipelines entails more than just choreographing the steps of your workflow. It involves anticipating hiccups, embracing resilience, and orchestrating a graceful finale through error handling and post-build actions.

Error Handling

In the intricate dance of pipeline orchestration, errors can emerge unexpectedly. A key tenet of robust pipeline design is the incorporation of error-handling mechanisms that prepare your workflow to navigate these turbulences.

Graceful Error Capture:

In this exemplary snippet, the try-catch block envelops the stages. If any stage encounters an error, the pipeline gracefully catches the exception, marks the build as failed, and displays an error message. This proactive approach ensures your pipeline's resilience against unforeseen obstacles.

Interactive Debugging:

This snippet showcases error handling within a stage. If the tests fail, the pipeline captures the exception, echoes an error message, and halts the pipeline with the error command. This facilitates immediate attention to issues during the pipeline's execution.

Post-Build Actions

Post-build actions encompass tasks that should occur after the pipeline's conclusion, be it a triumphant success or a valiant failure.

Holistic Clean-Up:

The always block in this snippet executes the cleanup script, ensuring that resources are tidied up regardless of the pipeline's outcome. This practice maintains a clean development environment and optimizes resource utilization.

Team Notifications:

In this example, the success and failure blocks send email notifications based on the pipeline's result. This keeps the team informed about the outcome and enables them to respond promptly.

The concept of resilience takes center stage when it comes to error handling within Scripted Pipelines. By thoughtfully incorporating error-handling mechanisms, your pipeline transforms into a robust and resilient entity.

It gains the ability to face unexpected challenges head-on without faltering. Errors are inevitable in software development, but with a well-designed error-handling strategy, your pipeline is prepared to weather the storm. The 'try-catch' block encapsulates stages, allowing the pipeline to gracefully catch exceptions and respond effectively. Even in the face of unforeseen obstacles, the pipeline stands strong, preserving the continuous flow of delivery.

Jenkins Scripted Pipelines offer a lot of ways in which we can implement error handling in a modular and scalable way.

Conditional Execution

Conditional execution is the strategic pivot that allows your Scripted Pipelines to dynamically respond to varying circumstances. It empowers your pipeline to make decisions based on conditions, executing specific stages or steps only when certain criteria are met. In this section, we'll delve into the intricacies of conditional execution, exploring how it enhances your pipeline's adaptability and efficiency.

When and Why Conditional Execution?

Conditional execution comes into play when you need to tailor your pipeline's behavior based on specific scenarios. Whether it's running particular steps for a specific branch, skipping stages for certain conditions, or executing custom logic when needed, conditional execution offers the flexibility to adapt your pipeline to diverse situations.

Conditional Stage Execution:

In this example, the 'Deploy' stage is executed only when the pipeline is triggered on the 'master' branch. Conditional execution ensures that stages are executed contextually, aligning with your project's requirements.

Skipping Stages Conditionally:

Here, the 'Test' stage is executed only when the 'RUN_TESTS' parameter is set to 'true'. This dynamic approach allows you to control pipeline behavior based on runtime input.

Using Conditional Blocks:

Conditional execution is facilitated by Groovy's 'if' statements, which evaluate conditions and execute code blocks accordingly. These statements enable you to make decisions within your pipeline script, directing its flow based on predefined conditions.

Conditional Step Execution:

In this snippet, the 'if' statement determines whether to execute integration tests or unit tests based on the branch name. Conditional execution of steps provides an adaptable testing strategy.

Benefits and Best Practices:

- Efficient Resource Utilization:

Conditional execution prevents unnecessary steps or stages from running, optimizing resource usage and reducing pipeline runtime. - Contextual Workflows:

Different conditions trigger different actions, allowing your pipeline to adapt to specific scenarios and contexts. - Dynamic Logic:

By embedding conditional statements, you infuse dynamic decision-making into your pipeline, aligning its behavior with real-time requirements. - Readability and Clarity:

Conditional execution enhances the readability of your pipeline script by encapsulating logic based on conditions, making it clear and understandable.

Using Custom Functions and Libraries

Scripted Jenkins Pipelines embrace the spirit of reusability and modularity by allowing you to create custom functions and utilize external libraries. These tools empower your pipeline to be concise, maintainable, and versatile.

Custom Functions

Custom functions encapsulate logic into reusable units, allowing you to abstract complex operations into a single, easily accessible entity. These functions promote code cleanliness and maintainability, enabling you to focus on orchestrating pipeline flow rather than wrestling with intricate details.

Defining a Custom Function:

In this example, the greet function takes a parameter name and echoes a personalized greeting. By using custom functions, you enhance the readability and conciseness of your pipeline script.

External Libraries

External libraries extend your pipeline's capabilities beyond its native functions. They allow you to incorporate pre-built solutions for common tasks, simplifying your scripting process and reducing redundancy.

Using an External Library:

In this snippet, the my-shared-library external library is used. The customStep() function from the library is invoked, demonstrating how external libraries enhance your pipeline with predefined functionality.

Best Practices and Considerations:

- Modular Design:

Custom functions and external libraries promote a modular design, enabling you to encapsulate logic and share it across different parts of your pipeline. - Code Reusability:

By creating custom functions, you ensure that code snippets are not duplicated, fostering a more maintainable and efficient pipeline. - Version Control:

External libraries can be versioned, ensuring that changes to shared logic can be tracked, reviewed, and rolled back if necessary. - Shared Knowledge:

Libraries facilitate sharing best practices and standardized solutions across your pipeline development team.

Scripted Pipeline Best Practices

Integrating Jenkins Scripted Pipelines with version control and other tools enhances collaboration, streamlines workflows, and ensures a seamless development journey.

Version Control Integration

Version control systems, such as Git, lie at the heart of collaborative software development. Integrating Scripted Pipelines with version control repositories brings synchronization and automation to your CI/CD workflows.

Pipeline Trigger on Repository Changes:

In this example, the pipeline is triggered on changes to the repository. The pollSCM directive monitors the repository for changes and triggers the pipeline accordingly.

Pulling Code and Running Tests:

Here, the pipeline pulls code from the repository, performs the build and tests, and then proceeds to deployment. This integration ensures that your pipeline automatically responds to code changes.

Integrating with External Tools:

External tools augment the capabilities of Scripted Pipelines, extending their reach and enriching their functionality.

Integration with Slack for Notifications:

This code integrates the pipeline with Slack, sending notifications about pipeline results. The notification's color reflects the build's status, enhancing visibility.

Docker Integration for Containerization:

Here, Docker and Kubernetes tools are integrated. The pipeline builds and pushes a Docker image, and then deploys it using Kubernetes. This integration encapsulates the containerization and deployment process.

Comparing Scripted Pipeline and Declarative Pipeline

Jenkins Pipelines offers two prominent approaches: Scripted Pipelines and Declarative Pipelines. Each comes with its strengths and nuances, catering to different scenarios and preferences. Let's compare these two methodologies to better understand their differences and benefits.

Scripted Pipeline

Scripted Pipelines, as explored in earlier sections, provide the canvas for intricate orchestration. They offer unmatched flexibility, enabling you to craft pipelines with fine-grained control over every aspect. You can write pipeline scripts using the Groovy scripting language, leveraging its dynamic nature to accommodate complex logic and conditional execution. This approach is well-suited for projects that demand advanced customization and where a more programmatic approach is preferred.

Pros:

- Unrestricted Flexibility:

Scripted Pipelines offer complete control and flexibility, making them ideal for complex workflows and unique project requirements. - Dynamic Logic:

The Groovy scripting language allows you to introduce dynamic decision-making and logic within your pipeline. - Custom Libraries:

You can create and leverage custom functions, libraries, and shared code snippets to enhance reusability.

Cons:

- Learning Curve:

Mastering Groovy scripting and managing complex logic might require a steeper learning curve. - Prone to Errors:

With great flexibility comes the potential for complex scripts that might introduce errors or difficulties in troubleshooting.

Declarative Pipeline

Declarative Pipelines, on the other hand, focus on structure and simplicity. They allow you to define pipelines using a declarative syntax that abstracts away much of the complexity. With a simplified and human-readable structure, Declarative Pipelines are particularly suitable for projects where ease of use and maintaining a clear overview are crucial. They follow a "convention over configuration" approach, making them a great choice for teams with varying levels of pipeline scripting expertise.

Pros:

- Simplicity:

Declarative Pipelines offer a straightforward syntax that's easier to learn and understand, making them suitable for teams with diverse skill sets. - Readability:

The human-readable structure enhances collaboration and makes it easier to comprehend the pipeline's flow. - Ease of Maintenance:

Declarative Pipelines follow best practices by default, reducing the chances of errors and enhancing maintainability.

Cons:

- Limited Flexibility:

Declarative Pipelines sacrifice some advanced customization in favor of simplicity, which might not be suitable for highly complex workflows. - Constraints:

Certain complex scenarios might require workarounds or fall outside the scope of Declarative Pipeline's simplified structure.

Declarative Pipelines, despite their limitations, prove to be immensely valuable in scenarios where a balance between simplicity and efficiency is paramount. For instance, consider a software development team working on a frequent release cycle for a web application. In this context, the team needs to ensure that each release follows a consistent and error-free deployment process. Declarative Pipelines can shine here by providing an intuitive and easy-to-understand structure for orchestrating the build, test, and deployment stages. Even though the project might have some complexities, such as running various tests, generating documentation, and deploying to multiple environments, the team benefits from the reduced learning curve and enhanced readability of Declarative Pipelines.

Real-world Examples

Jenkins Scripted Pipelines have enormous potential. Here are some practical, real-world use cases:

Dynamic Deployment:

Imagine a project where deployments vary based on the branch being built. Using a Scripted Pipeline, you can dynamically determine the deployment environment based on the branch name. This ensures that features under development are deployed to testing environments while maintaining production deployments for the main branch.

Multi-Platform Testing:

In a cross-platform application, you might require comprehensive testing on various operating systems. A Scripted Pipeline can spin up different agent nodes, each representing a different platform, and execute tests tailored to the respective platform.

External Integrations:

Consider a scenario where your pipeline needs to interact with external tools, like deploying to cloud services or sending notifications to chat platforms. With Scripted Pipelines, you can seamlessly integrate these interactions using custom Groovy scripts.

Custom Logic:

For projects with intricate deployment workflows, you can define custom logic within Scripted Pipelines. This allows you to incorporate approval processes, conditional deployment paths, and other project-specific logic.

Conclusion

- Scripted Jenkins Pipelines empower complex workflows with dynamic Groovy scripting, offering unmatched customization and control.

- Conditional execution adapts pipelines to varying scenarios, making decisions based on conditions and optimizing resource utilization.

- Error handling and post-build actions fortify pipelines, responding to challenges and concluding with finesse.

- Scripted Jenkins and Declarative Jenkins Pipelines offer distinct strengths; Scripted excels in customization, while Declarative simplifies with structure.

- Scripted Jenkins Pipelines shine in dynamic deployments, multi-platform testing, external integrations, and custom logic orchestration.

- Scripted Jenkins Pipelines elevate CI/CD workflows, granting adaptability, control, and the tools to navigate the ever-evolving software development landscape.