Stack organization refers to a computer architecture where memory is managed in a Last-In-First-Out (LIFO) structure. It’s commonly used for function calls and local variables. The stack contains data such as return addresses and values, aiding program flow. This organized approach simplifies memory management and improves efficiency in execution.

What is a Stack Organization?

Stack organization is a fundamental concept in computer architecture and programming that involves the management of memory using a Last-In-First-Out (LIFO) data structure. A stack is a specialized form of data storage that operates on the principle of pushing and popping elements. It is widely used in various computing contexts, including function calls, memory allocation, and expression evaluation.

Stack Operations:

The primary operations associated with a stack are push and pop. When an element is pushed onto the stack, it is added to the top of the stack, becoming the most recent element. When an element is popped from the stack, the most recent element is removed, and the stack’s top pointer is adjusted accordingly.

The push operation involves the following steps:

- Check if the stack is full.

- If not full, increment the top pointer and place the new element at the updated top position.

- Store the element’s value and update the top pointer.

The pop operation involves the following steps:

- Check if the stack is empty.

- If not empty, retrieve the element at the top position.

- Decrement the top pointer.

- Return the retrieved element.

- In addition to push and pop, other stack-related operations include peek (to view the top element without removing it) and

isEmpty(to check if the stack is empty).

Stack Implementation:

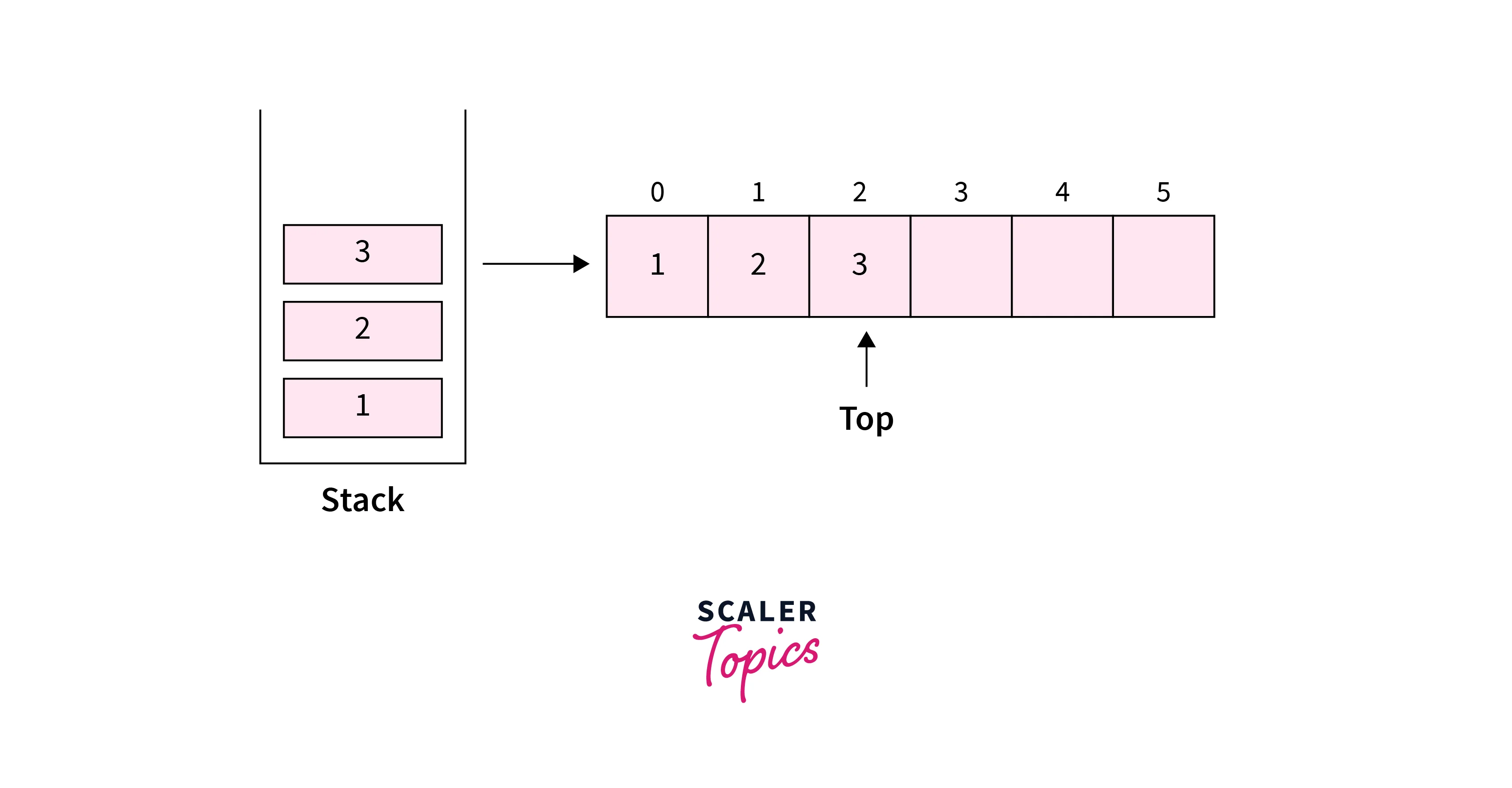

Implementing a stack can be achieved using various programming constructs and data structures. Two common methods are array-based implementation and linked list-based implementation.

1. Array-Based Implementation:

In this approach, an array of fixed size is used to store stack elements. The top pointer indicates the index of the last inserted element.

Advantages:

- Simple implementation.

- Efficient memory usage when the maximum size is known.

Disadvantages:

- Fixed-size, which can lead to overflow or underflow.

- Inefficient resizing if the array becomes full.

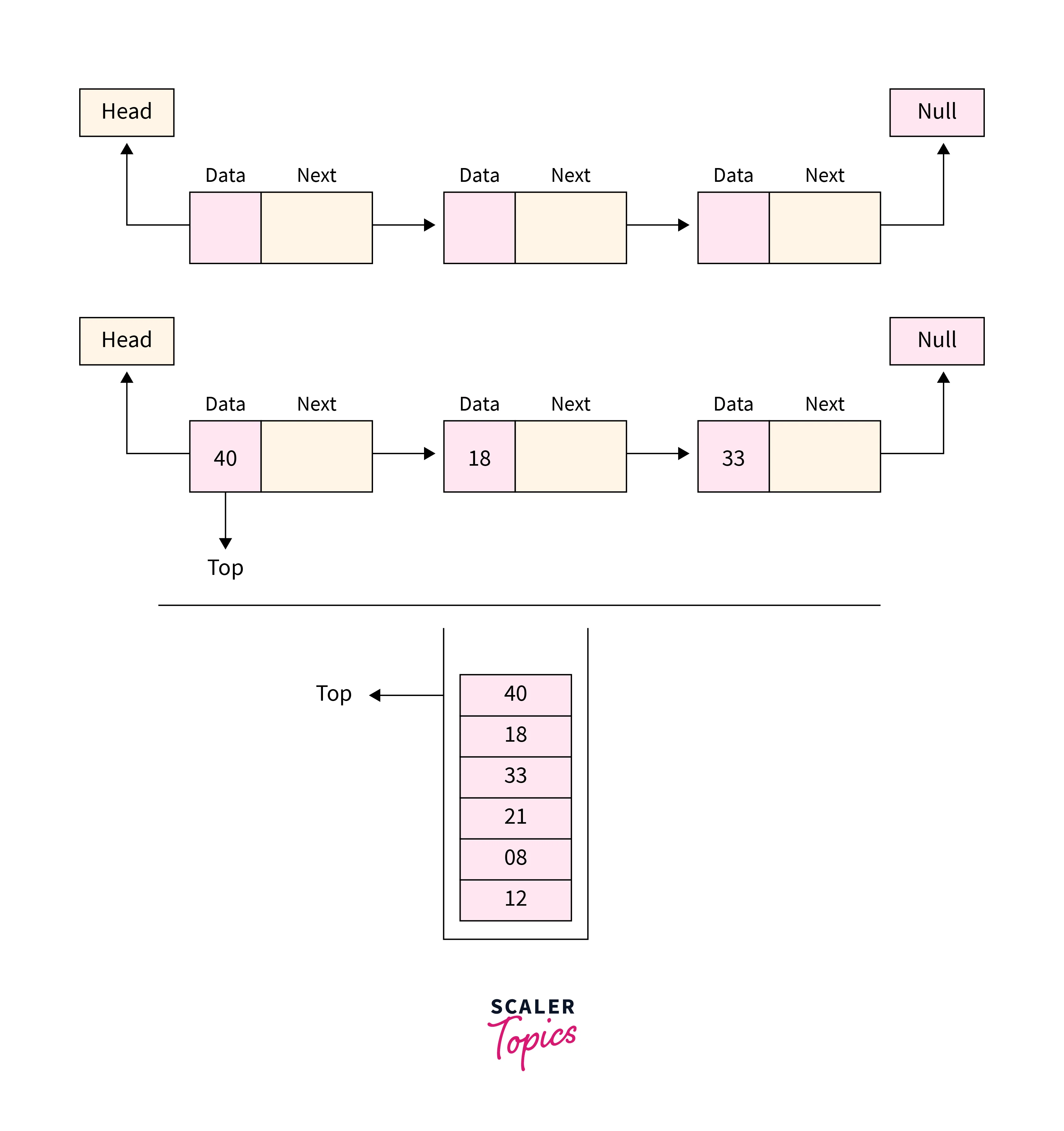

2. Linked List-Based Implementation:

In this approach, a linked list is used to represent the stack. Each node in the linked list holds an element and a reference to the next node.

Advantages:

- Dynamic size: memory usage grows as needed.

- No fixed capacity limitations.

Disadvantages:

- Slightly more complex implementation compared to array-based.

- Slightly more memory overhead due to node references.

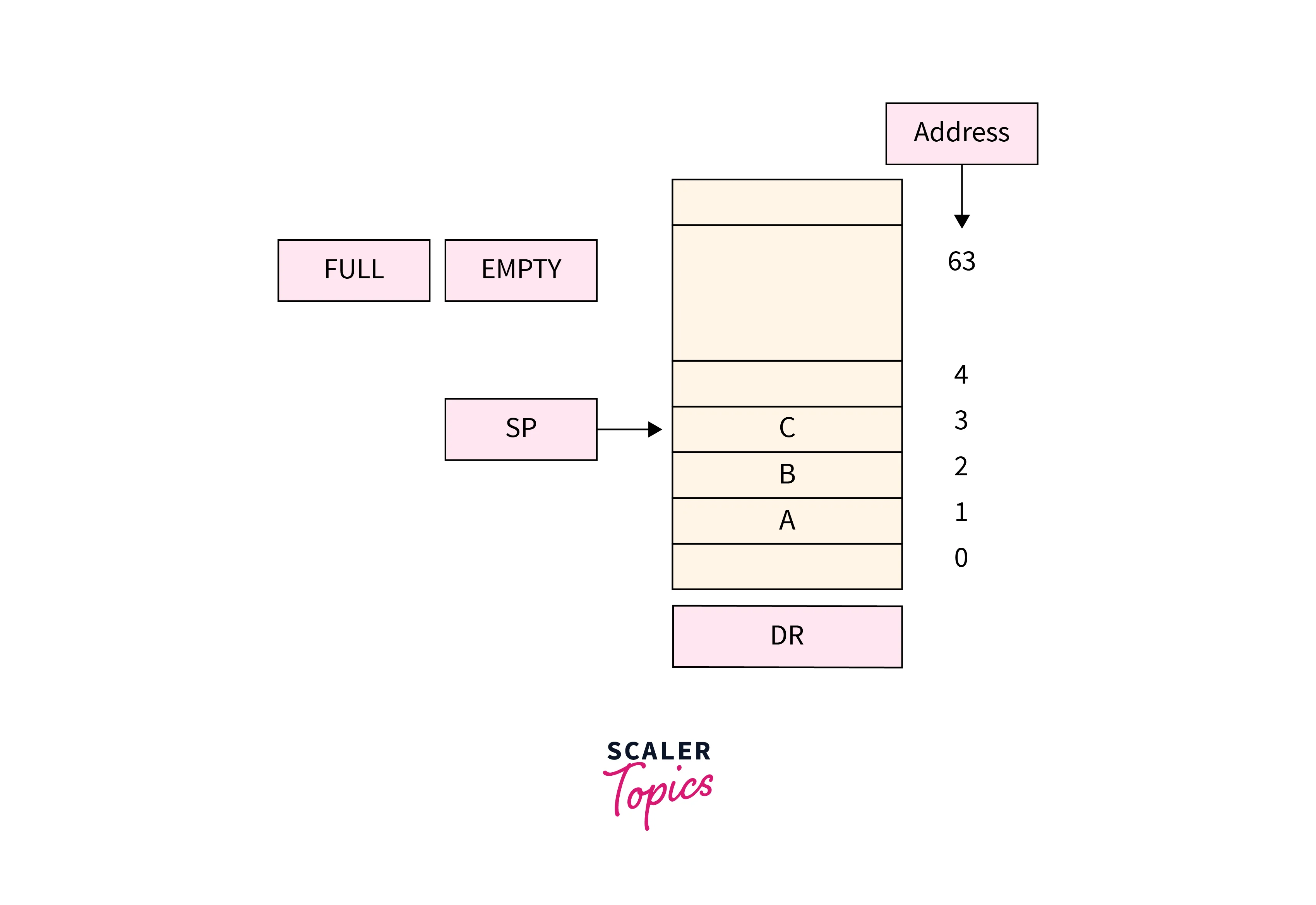

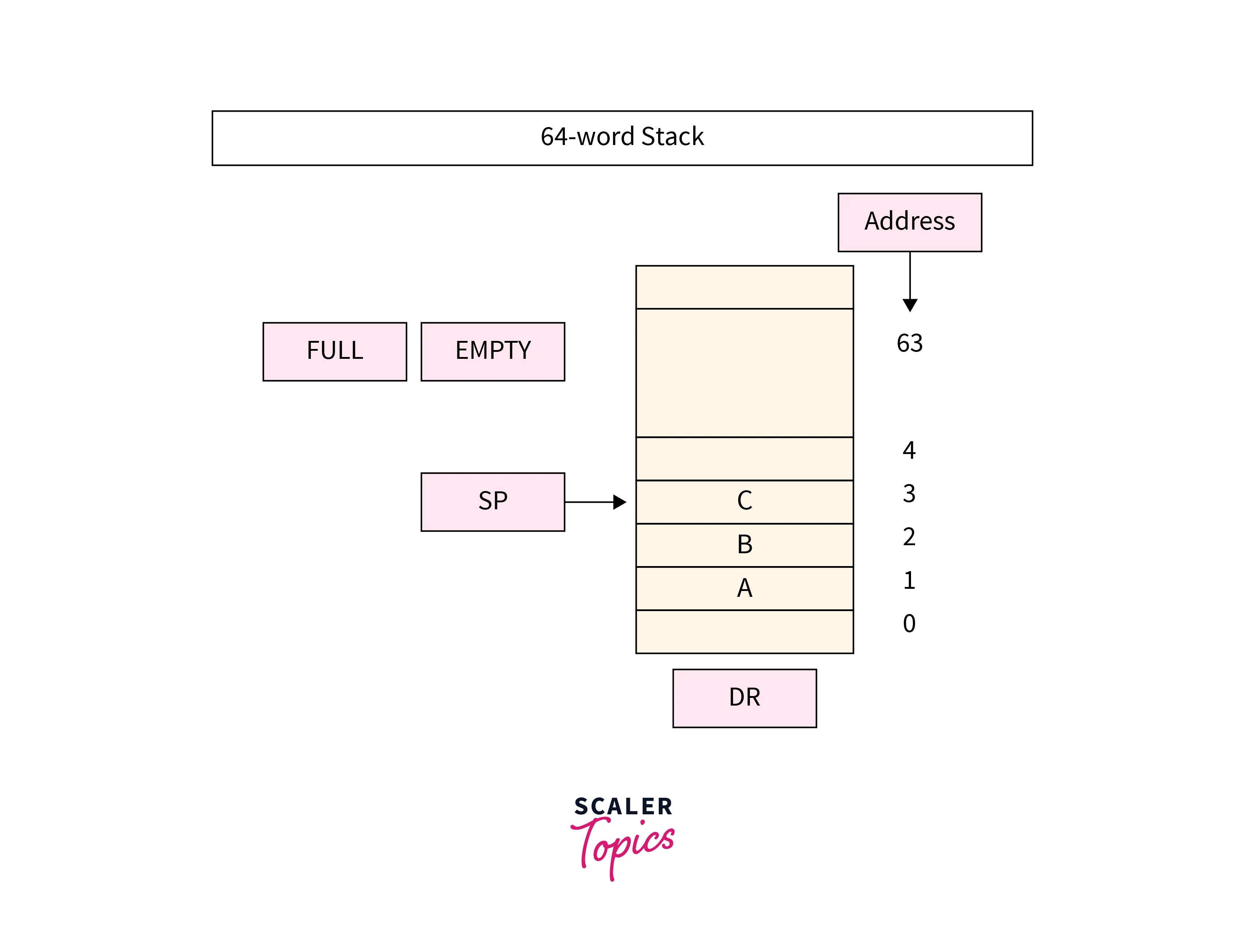

Register Stack

A register stack, also known as a register file stack, is a specialized type of hardware structure within a computer’s central processing unit (CPU) that facilitates efficient register management and context switching. Registers are small, fast memory locations that hold data that the CPU uses for immediate calculations and operations.

Key Features and Functions:

- Context Switching:

Register stacks allow for efficient context switching between different tasks or processes. When the CPU switches from one task to another, it can simply swap the entire register stack context, reducing the overhead associated with saving and restoring individual register values. - Function Calls:

During function calls, a register stack can be utilized to save the caller’s context, including return addresses and function arguments. This enables the CPU to seamlessly switch between different functions without losing track of execution. - Performance Enhancement:

Register stacks can improve performance by reducing memory access latency. Registers are much faster to access compared to main memory. By utilizing multiple registers in a stack, the CPU can keep frequently accessed data closer, minimizing the need to fetch data from slower memory locations. - Parallel Execution:

Modern CPUs often have multiple execution units that can perform different tasks simultaneously. Register stacks can help manage the data flow between these units efficiently, allowing for better utilization of the CPU’s parallel processing capabilities.

Implementation:

A register stack is typically implemented using a combination of hardware registers and control logic. The number of registers in the stack and the organization of the stack depend on the CPU’s architecture and design goals.

Benefits and Considerations:

The advantages of register stacks include faster context switching, reduced memory access latency, improved performance, and enhanced support for parallel execution. However, implementing and managing register stacks can be complex and requires careful hardware design to ensure efficient usage and minimize potential bottlenecks.

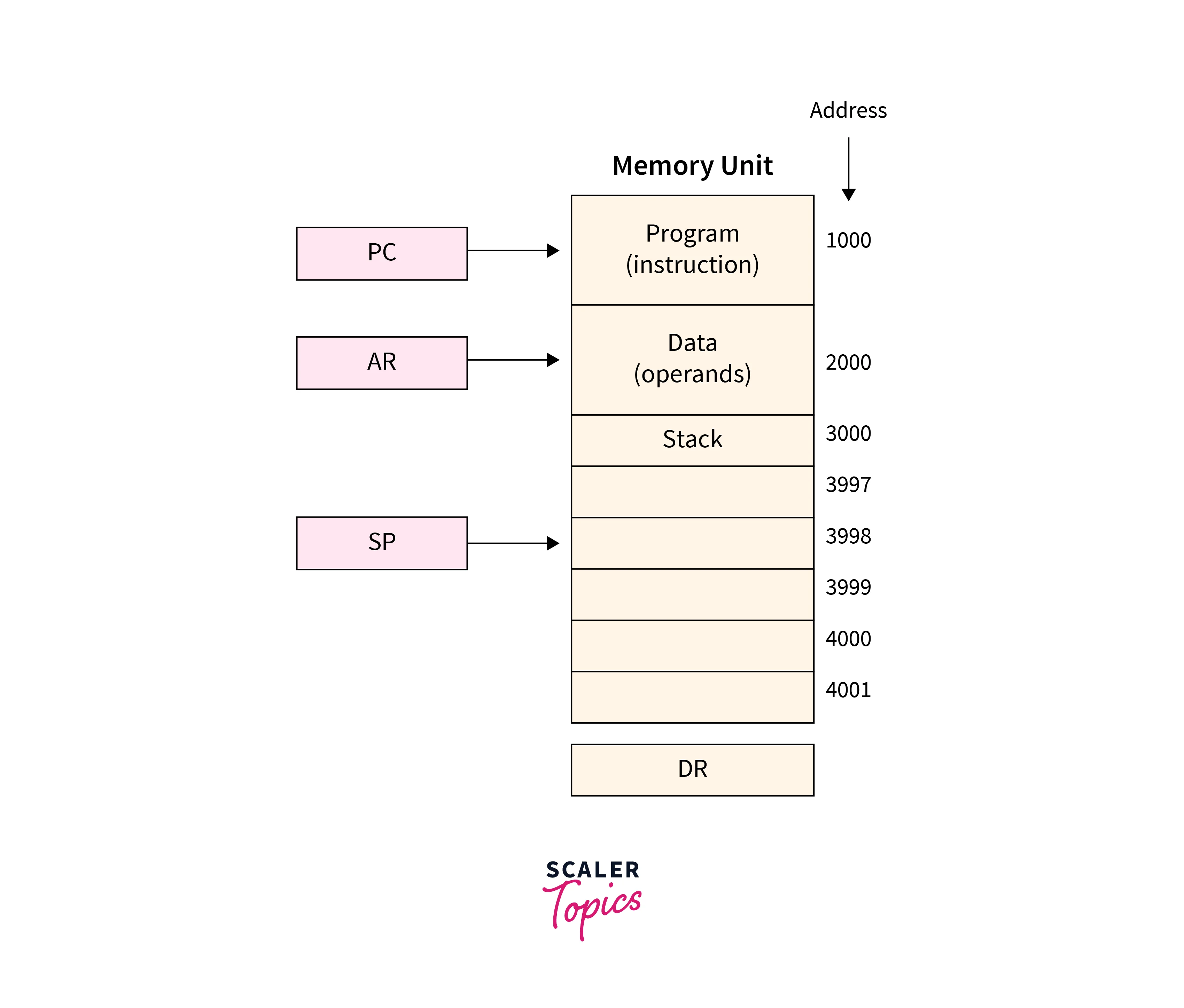

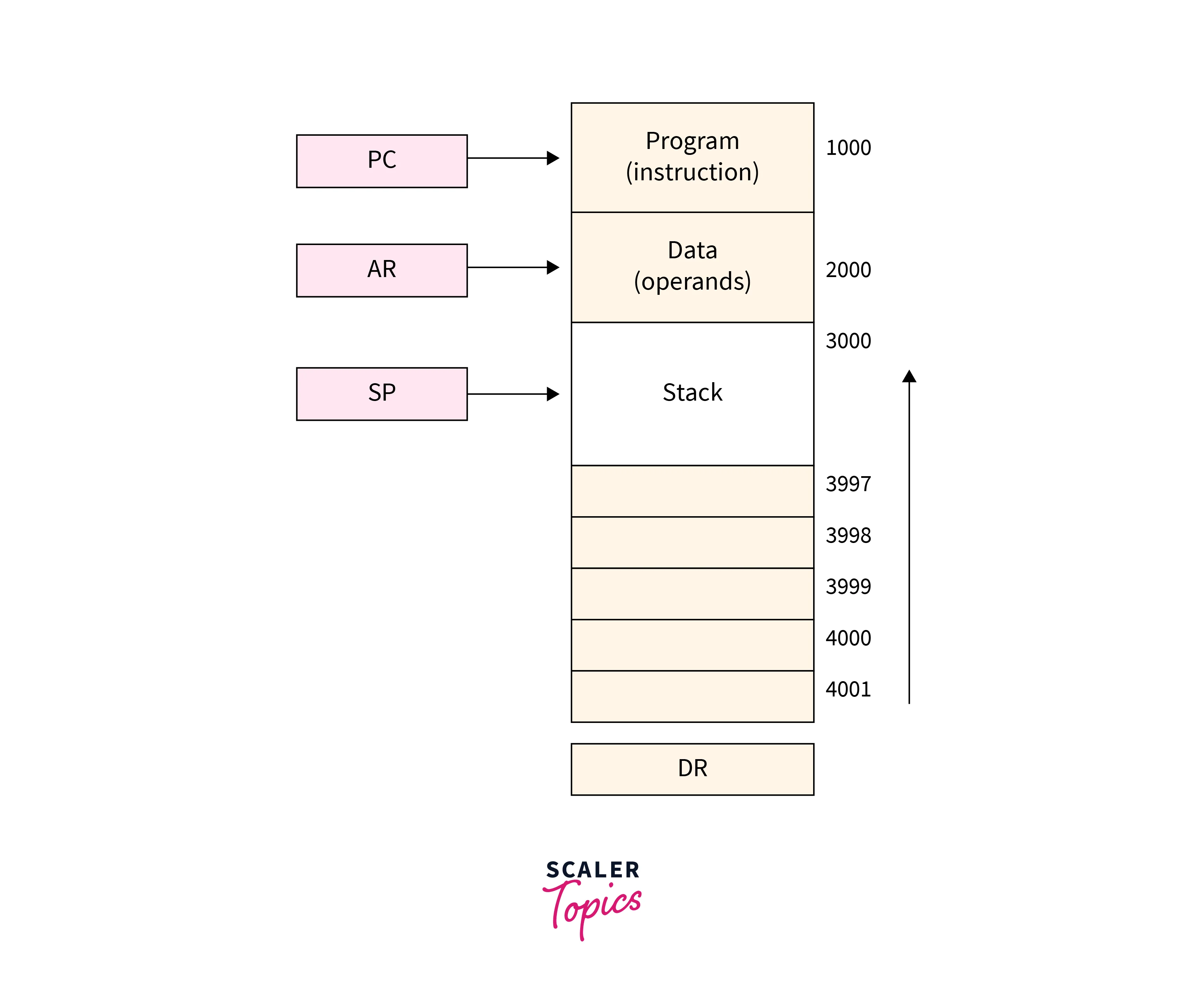

Memory Stack

A memory stack, also known simply as a stack, is a crucial data structure used in computer programming and computer architecture for managing memory in a Last-In-First-Out (LIFO) manner. It operates as a dynamic storage area where data is organized in a way that the most recently added item is the first to be removed. The stack’s primary purpose is to keep track of program execution flow, local variables, and function calls.

Key Characteristics and Functions:

- LIFO Structure:

Data added and removed in last-in, first-out order, similar to a stack of plates. - Function Calls:

Stacks manage function calls by storing return addresses and local variables, enabling program flow after function completion. - Local Variables:

Stack holds function local variables, managing distinct data for various function instances. - Expression Evaluation:

Stacks assist in evaluating mathematical expressions with parentheses and operators, preserving operation order for precise calculation.

Implementation and Usage:

Memory stacks are commonly implemented using arrays or linked lists. In array-based implementation, a fixed-size array is used to hold the stack’s elements. In linked list-based implementation, nodes with data and pointers to the next node create the stack’s structure.

Advantages of Stack Organization

- Efficient Management:

Stack’s LIFO structure offers efficient memory use. - Automatic Cleanup:

Stack auto-cleans after functions, preventing leaks. - Predictable Access:

Enhances cache use and reduces misses. - Function Handling:

Efficiently manages calls, aiding context switches. - Structured Frames:

Fixed frames aid context switches and can hold extra info. - Thread Safety:

Thread-specific stacks minimize data corruption risks. - Minimal Fragmentation:

Stack faces less memory fragmentation. - Low Overhead:

Requires less metadata compared to the heap. - Compiler Optimization:

Compilers optimize stack-based code well. - Hardware Support:

Many processors offer built-in support for stack operations. - Interrupt Handling:

Essential for saving and restoring execution context during interrupts and exceptions. - Context Switching:

Important for transitioning between threads or processes while preserving states. - Memory Allocation Predictability:

Stack memory allocation follows a predictable pattern.

Disadvantages of Stack Organization

- Fixed Size:

Stacks have a limited memory size, which can lead to overflow errors if exceeded. - Limited Lifetime:

Data on the stack is automatically removed when its scope ends. - No Dynamic Allocation:

Stacks cannot allocate variable memory sizes, unlike heaps. - Thread Safety:

Concurrent access to stacks by multiple threads can cause synchronization issues. - Fragmentation:

The stack’s growth and shrinkage can fragment lower memory. - Limited Data Sharing:

Data sharing between functions is challenging due to isolated stack frames. - Inefficient for Large Data:

Stacks are inefficient for managing complex or large data structures. - No Persistent Storage:

Stack data doesn’t persist beyond program execution. - Compiler Limits:

The compiler or runtime can impose restrictions on stack size. - Lack of Flexibility:

Stacks lack the dynamic memory allocation capability of the heap.

Conclusion

- Stack organization employs Last-In-First-Out (LIFO) structure for memory management.

- Core operations include

push(add) andpop(remove) of data elements. - Efficiently handles function calls, managing return addresses and local variables.

- Enables predictable context switching and aids parallel execution.

- Simplifies expression evaluation, maintaining the order of operations.

- Provides a structured approach to manage program execution flow.

- Register stacks optimize hardware context switching in CPUs.

- Drawbacks include fixed size leading to potential overflow issues.

- Frequent push and pop actions can cause memory fragmentation.

- Limited lifespan of data and unsuitability for dynamic data structures.