Superscalar Architecture

Overview

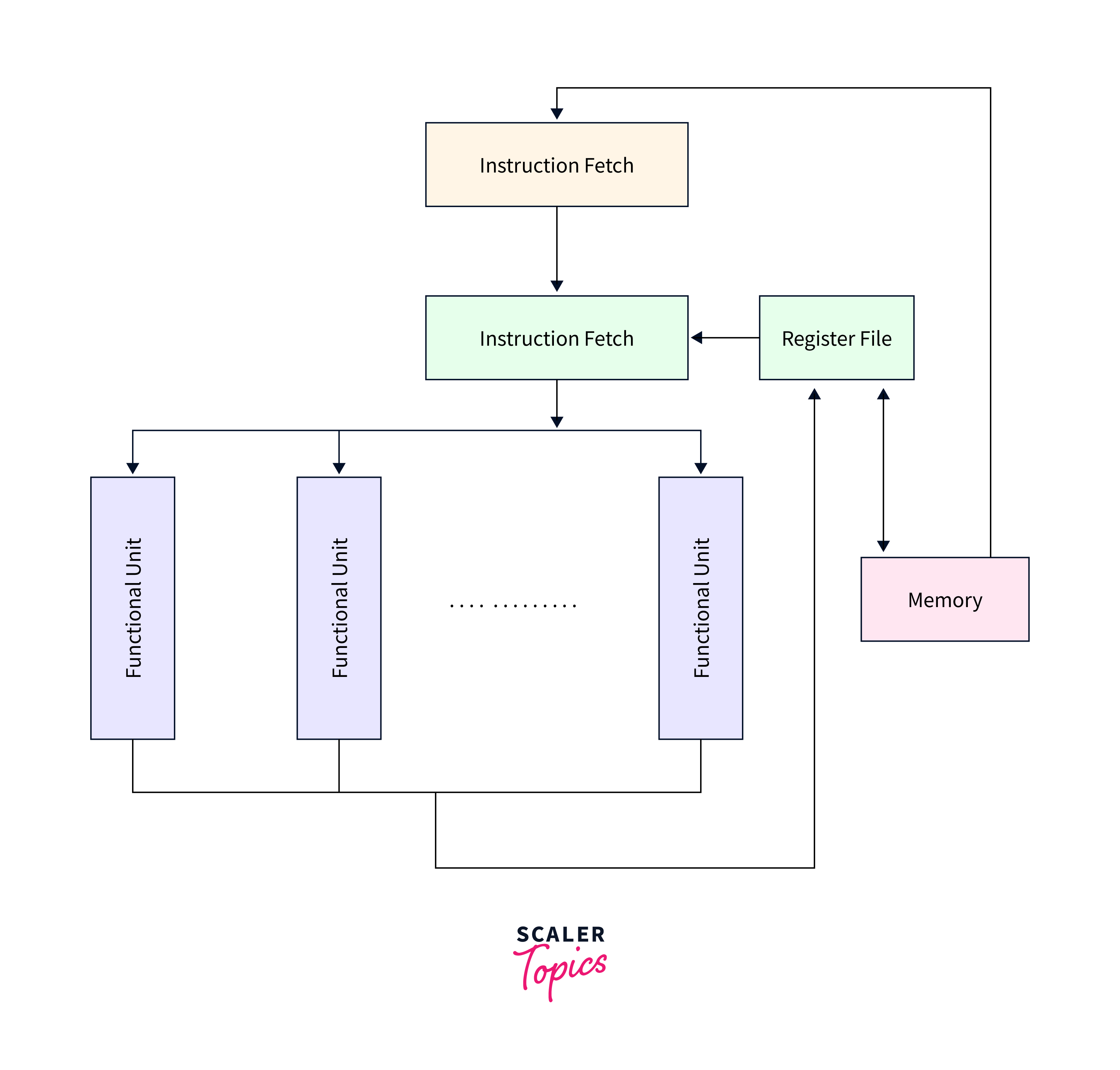

Superscalar architecture is a cutting-edge design in modern microprocessors, enhancing their processing capabilities. Unlike traditional scalar processors that execute one instruction per clock cycle, superscalar processors can execute multiple instructions concurrently. This is achieved by incorporating multiple execution units and a sophisticated instruction scheduler. Superscalar processors analyze the instruction stream, identify independent instructions, and dispatch them to available execution units simultaneously. This parallel execution of instructions significantly boosts the processor's throughput, making it capable of handling a wide range of tasks more efficiently.

What is Superscalar Architecture?

Superscalar architecture is an advanced design concept in modern microprocessor technology that aims to significantly enhance the processing power and efficiency of a CPU (Central Processing Unit). Unlike earlier scalar architectures, which execute one instruction per clock cycle, superscalar architectures can execute multiple instructions simultaneously, effectively achieving parallelism within a single processor.

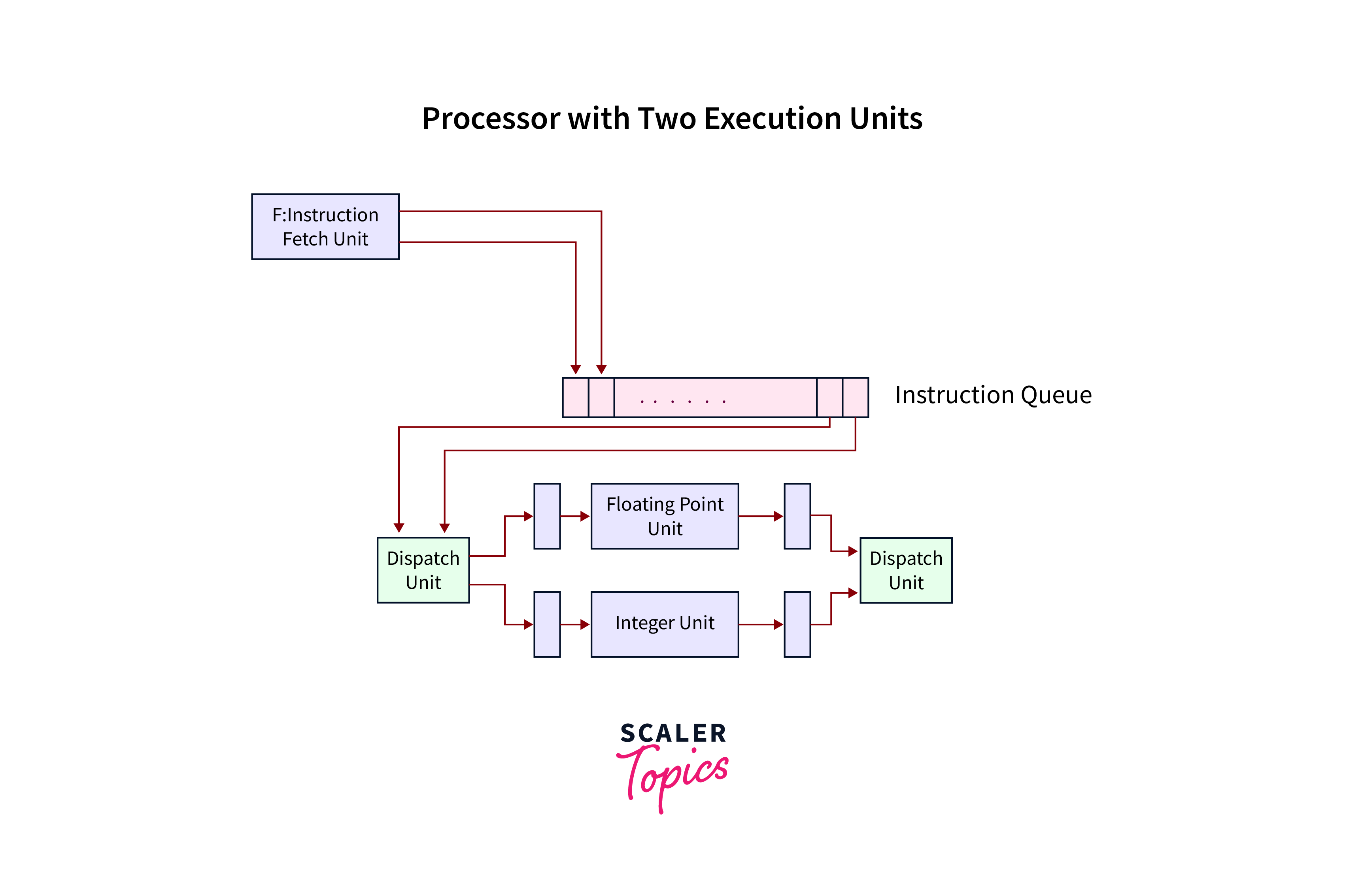

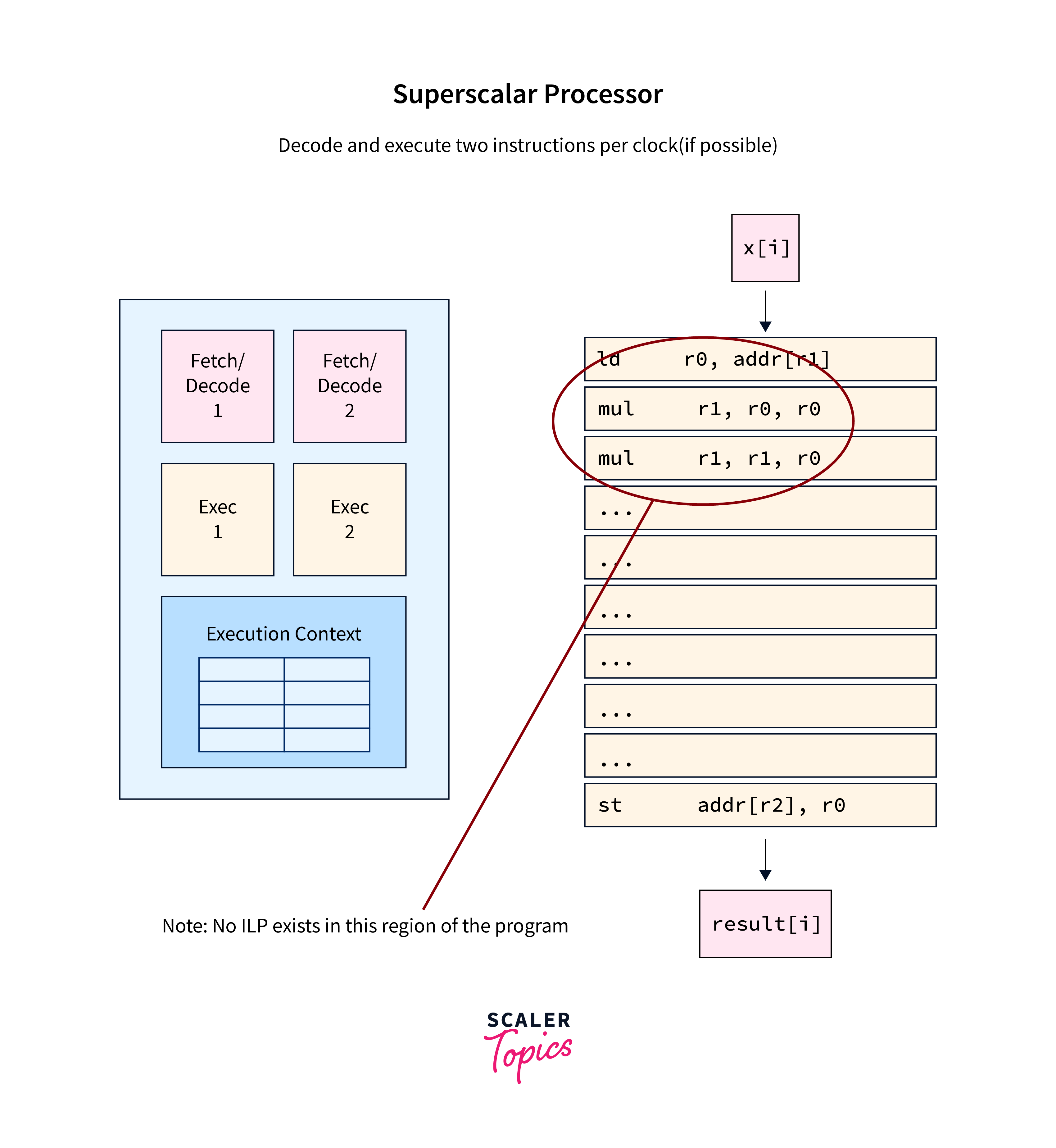

The key feature of a superscalar processor is its ability to analyze and dispatch multiple instructions from a program in a single clock cycle, provided that these instructions are independent and can be executed concurrently. This is made possible through the inclusion of multiple execution units within the CPU, each responsible for handling specific types of instructions (e.g., arithmetic operations, memory access, branch instructions, etc.).

To efficiently manage the execution of instructions, superscalar processors also incorporate a sophisticated instruction scheduler or dispatcher. This scheduler is responsible for examining the incoming stream of instructions, identifying those that can be executed in parallel, and dispatching them to the available execution units.

Superscalar architectures have become vital in meeting the ever-increasing demands of modern computing tasks, such as multimedia processing, scientific simulations, and complex software applications. They offer the advantage of dramatically improved instruction throughput and overall performance, enabling processors to execute more instructions in a given period and achieve higher levels of computational efficiency.

Advantages of Superscalar Architecture

Some of the key advantages offered by superscalar architecture are:

- Increased Instruction Throughput: Its ability to execute multiple instructions concurrently, often in a single clock cycle. This results in a substantial increase in instruction throughput compared to scalar processors, which execute one instruction at a time.

- Improved Performance: Superscalar processors excel in handling a wide range of tasks, from basic arithmetic operations to complex calculations and data manipulations. This improved performance is especially beneficial for applications that require extensive computational power, such as scientific simulations, 3D rendering, video encoding/decoding, and artificial intelligence tasks like deep learning.

- Efficient Resource Utilization: Superscalar processors feature multiple execution units, each specialized in executing specific types of instructions (e.g., arithmetic, memory access, control flow, etc.). This allows for efficient resource utilization, as the CPU can allocate and execute instructions optimizing the use of available hardware resources.

- Parallelism Exploitation: Superscalar architectures leverage instruction-level parallelism (ILP), which enables the concurrent execution of independent instructions. The CPU's instruction scheduler identifies and dispatches these independent instructions to different execution units simultaneously. This parallelism significantly reduces the time needed to complete a task and maximizes CPU utilization.

- Out-of-Order Execution: Many superscalar processors incorporate out-of-order execution, a feature that further enhances performance. In out-of-order execution, instructions are executed as soon as their dependencies are satisfied, rather than strictly following the sequential order of the program. This reduces pipeline stalls and keeps the CPU's execution units busy.

- Flexibility and Compatibility: Superscalar processors are highly versatile and compatible with a wide range of software applications and programming languages. They can execute both legacy single-threaded programs and modern multi-threaded applications efficiently.

- Scalability: Superscalar architecture can be scaled to accommodate different levels of complexity and performance requirements. Chip designers can add more execution units or improve existing ones to create processors tailored to specific needs, from low-power mobile devices to high-performance server CPUs.

- Energy Efficiency: While superscalar processors are known for their performance, they have also made strides in energy efficiency. Some superscalar architectures incorporate power-saving features like dynamic voltage and frequency scaling (DVFS) and clock gating, which help reduce power consumption during periods of low computational demand.

- Support for Advanced Compiler Techniques: Superscalar processors work hand in hand with advanced compiler techniques that can identify and schedule instructions for parallel execution. Compiler optimizations like loop unrolling, software pipelining, and instruction scheduling can further enhance the performance of superscalar processors.

- Handling Complex Branching:

Superscalar architectures often include advanced branch prediction mechanisms to minimize the performance impact of conditional branches. Efficient handling of branch instructions is crucial because incorrect branch predictions can lead to pipeline stalls and reduced throughput. By predicting branches accurately, superscalar processors maintain a high instruction throughput even in the presence of branching code.

Disadvantages of Superscalar Architecture

Some of the key disadvantages of superscalar architecture are:

- Complexity and Cost: Superscalar processors are inherently more complex than scalar or simpler architectures. The inclusion of multiple execution units, sophisticated instruction schedulers, and out-of-order execution mechanisms increases the chip's complexity and manufacturing cost.

- Heat Generation and Power Consumption: The increased complexity and the use of multiple execution units can result in higher heat generation and power consumption. This can be a significant concern for both mobile devices and data centres, where energy efficiency and cooling are critical.

- Diminishing Returns: Adding more execution units to a superscalar processor does not necessarily lead to a linear improvement in performance. As the number of execution units increases, the complexity of instruction scheduling and resource allocation also grows.

- Instruction Dependencies: Despite the ability to execute instructions out of order, superscalar processors still encounter dependencies between instructions that can cause pipeline stalls. Dependencies occur when one instruction relies on the result of a previous instruction, and this dependency must be resolved before the dependent instruction can proceed.

- Programming Complexity: Superscalar architectures rely heavily on compilers and hardware to exploit instruction-level parallelism. Writing software that fully leverages the capabilities of superscalar processors can be complex, and it often requires optimizing compilers to schedule instructions effectively.

- Code Size and Complexity: Superscalar processors may require larger and more complex code due to optimizations and instruction scheduling. This can result in increased memory usage, which may be a concern in embedded systems or environments with limited memory resources.

- Limited Impact on Certain Workloads: Workloads that are inherently serial or have limited opportunities for parallel execution may not see substantial performance improvements with superscalar architectures.

- Branch Mispredictions: Superscalar processors can still suffer from branch mispredictions, which occur when the processor incorrectly predicts the outcome of conditional branches. Mispredictions can lead to pipeline stalls and reduced performance, particularly in code with complex branching patterns.

- Resource Contention: In superscalar architectures, multiple execution units compete for shared resources such as registers and cache. This competition can lead to resource contention, which, if not managed efficiently, can result in performance bottlenecks.

- Complex Debugging: Debugging software running on superscalar processors can be challenging due to the out-of-order execution and complex instruction pipelines. Identifying the cause of performance issues or unexpected behaviour may require advanced debugging tools and techniques.

- Higher Latency for Memory Access: Despite the ability to execute multiple instructions simultaneously, superscalar processors may still face latency when accessing memory. Memory access delays can limit overall performance gains, especially in memory-bound applications.

- Limited Scalability: Superscalar processors may have limitations in terms of scalability. Adding more execution units and increasing complexity can reach a point of diminishing returns, and further improvements may require exploring alternative architectures or parallelism techniques.

- Compiler Dependency: Effective utilization of superscalar processors heavily relies on the compiler's ability to identify and schedule parallelizable instructions. Suboptimal compiler optimizations can lead to underutilization of the processor's capabilities.

- Instruction Cache Pressure: The increased number of execution units and out-of-order execution can put pressure on the instruction cache. Larger instruction caches may be required to accommodate the increased number of instructions in flight, potentially affecting chip area and power consumption.

- Synchronization Overheads: Coordinating the execution of multiple instructions in out-of-order processors can introduce synchronization overheads. Handling dependencies and ensuring correct program execution can sometimes lead to added complexity and delays.

- Compatibility Challenges:

Legacy software not optimized for superscalar architectures may not fully benefit from the advanced features, potentially limiting the advantages of superscalar processors for running older applications.

Conclusion

- Increased Instruction Throughput: Simultaneous execution of multiple instructions leads to higher performance.

- Improved Efficiency: Efficient resource utilization and parallelism exploitation enhance computational efficiency.

- Compatibility and Scalability: Versatile architecture compatible with various software and scalable for different needs.

- Energy Efficiency: Some superscalar processors incorporate power-saving features for reduced consumption.

- Support for Advanced Compiler Techniques: Compiler optimizations further enhance performance.

- Resource Management Challenge: Superscalar processors face complexities in managing shared resources efficiently, leading to potential bottlenecks and resource contention.

- Debugging Complexity: Debugging software on superscalar architectures can be intricate due to out-of-order execution and complex pipelines, demanding advanced debugging tools and techniques.

- Memory Access Latency: Despite multiple instruction execution units, memory access latency can still be a limiting factor in performance-critical applications.

- Limited Scalability: Scaling up superscalar processors can reach a point of diminishing returns, necessitating alternative approaches for further performance improvements.

- Legacy Software Challenges: Older, non-optimized software may not fully exploit the advantages of superscalar architectures, limiting their benefits for running legacy applications.

- Synchronization Overheads: Coordinating execution in out-of-order processors introduces synchronization overheads, which can lead to complexity and delays.