Building a Convolutional Neural Network with TensorFlow

Overview

Convolutional Neural Networks Tensorflow(CNNs) have become a potent tool for tackling challenging picture classification and identification tasks in the field of deep learning. CNNs have transformed several fields, including computer vision, pattern recognition, and even natural language processing, with their capacity to automatically learn hierarchical features from raw pixel data. CNNs have completely changed how humans view and interact with visual data, whether it is for object recognition in photographs, disease diagnosis, or satellite imagery analysis. This blog will provide you with a step-by-step rundown of the key ideas and methods involved in building a CNN model using TensorFlow, whether you're a novice or an experienced practitioner.

Convolutional Neural Networks Tensorflow

A particular kind of artificial neural network called a convolutional neural network Tensorlfow (CNN) is created to analyze and process visual input, such as pictures and movies. It has evolved into the foundation of contemporary deep learning for computer vision applications, allowing advances in picture categorization, object identification, and other visual recognition tasks.

For a brief overview of Convolutional Neural Networks Tensorflow, refer to the article in the Deep Learning Hub on Convolutional Neural Network Tensorflow.

Conventional neural networks handle input data as flat vectors, whereas CNNs maintain the spatial connections seen in pictures. They excel at recognizing regional trends and acquiring more intricate visual information representations through hierarchical learning. The structure and operation of the human visual cortex, where neurons are trained to recognize certain visual stimuli, served as inspiration for this.

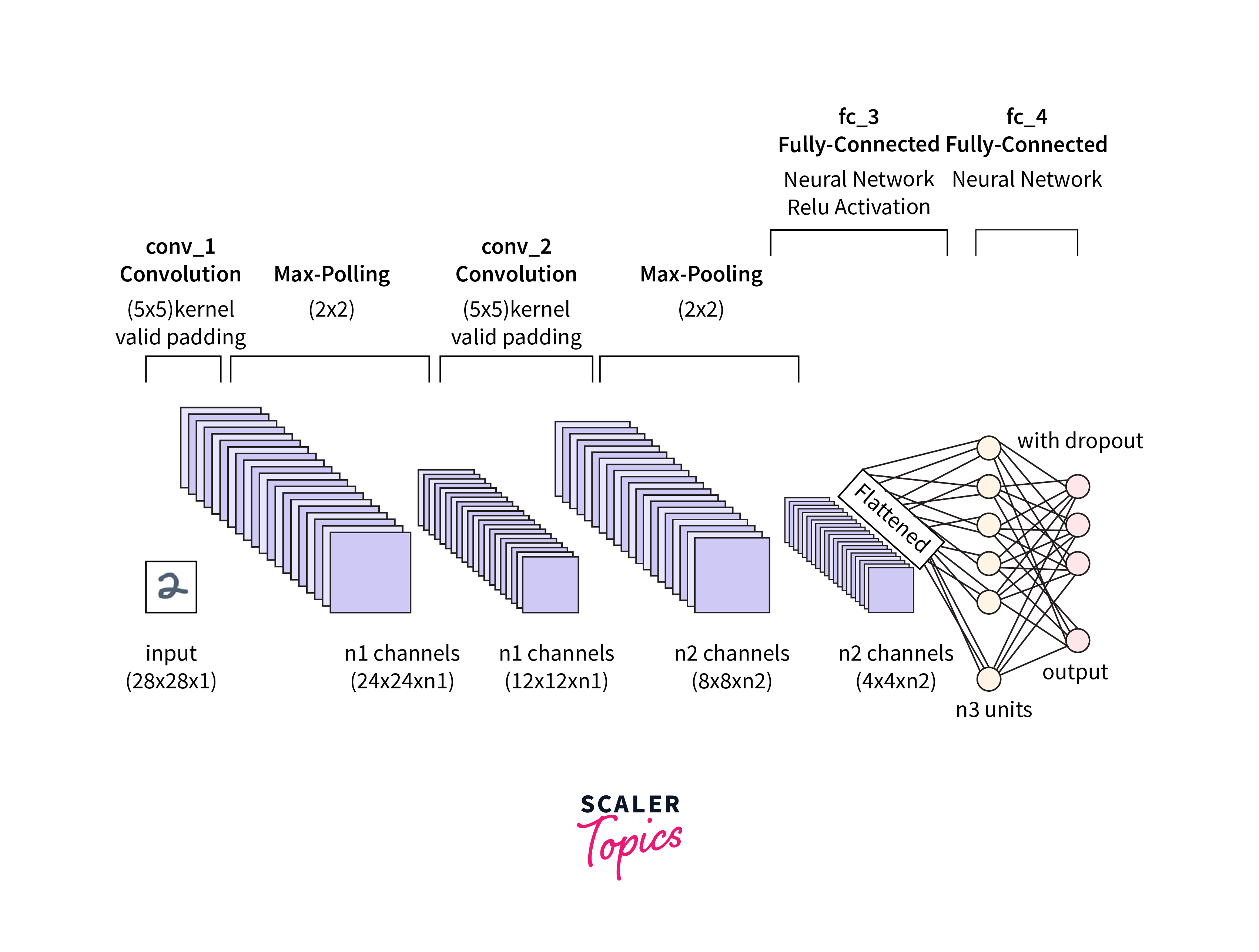

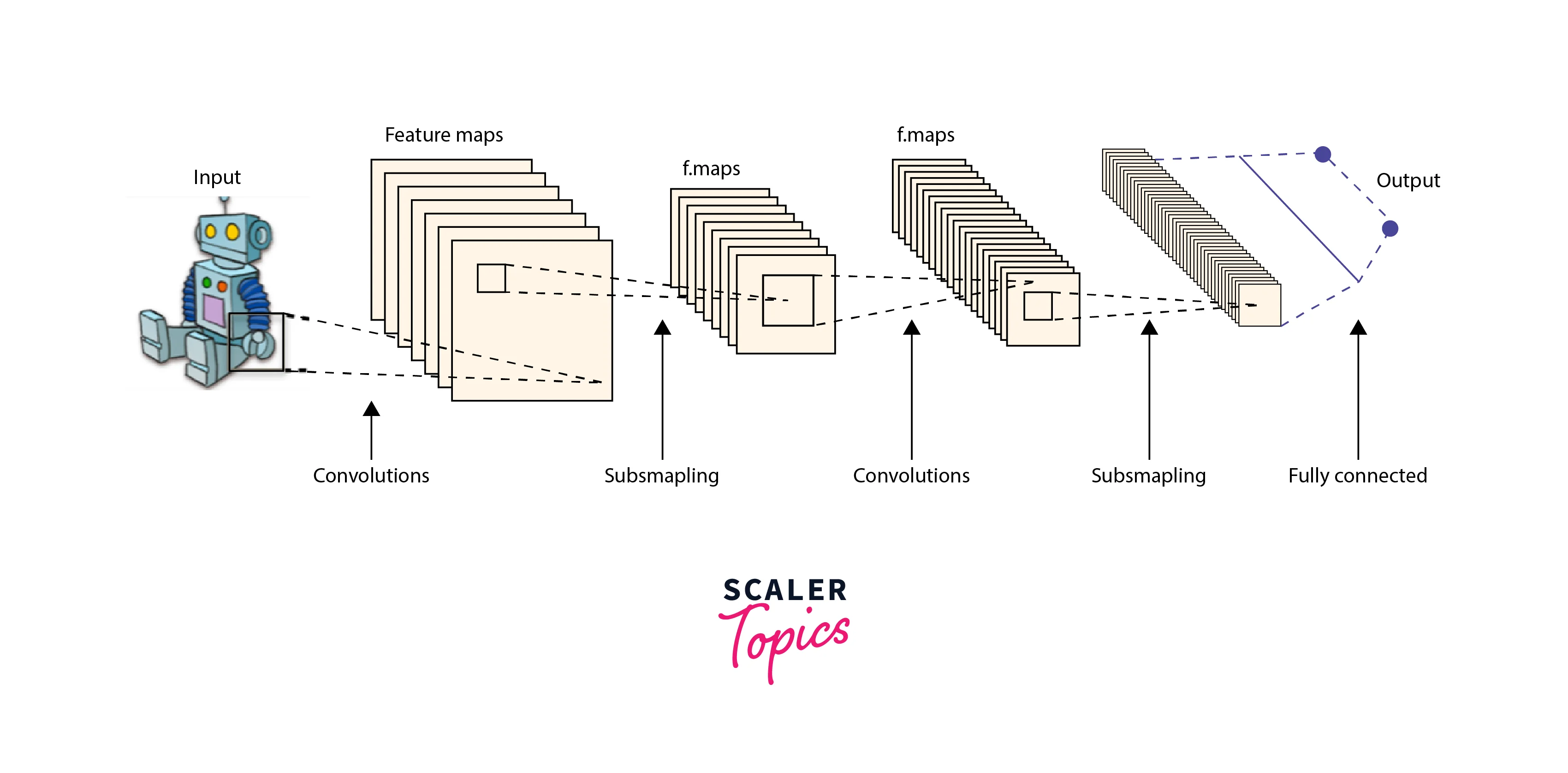

The essential elements of a CNN are:

-

Convolutional Layers:

These layers transform the input picture using a group of teachable filters, sometimes referred to as convolutional kernels. Each filter does a convolutional mathematical process, which produces feature maps and extracts local features by swiping across the picture. These feature maps draw attention to important patterns like edges, corners, or textures.

-

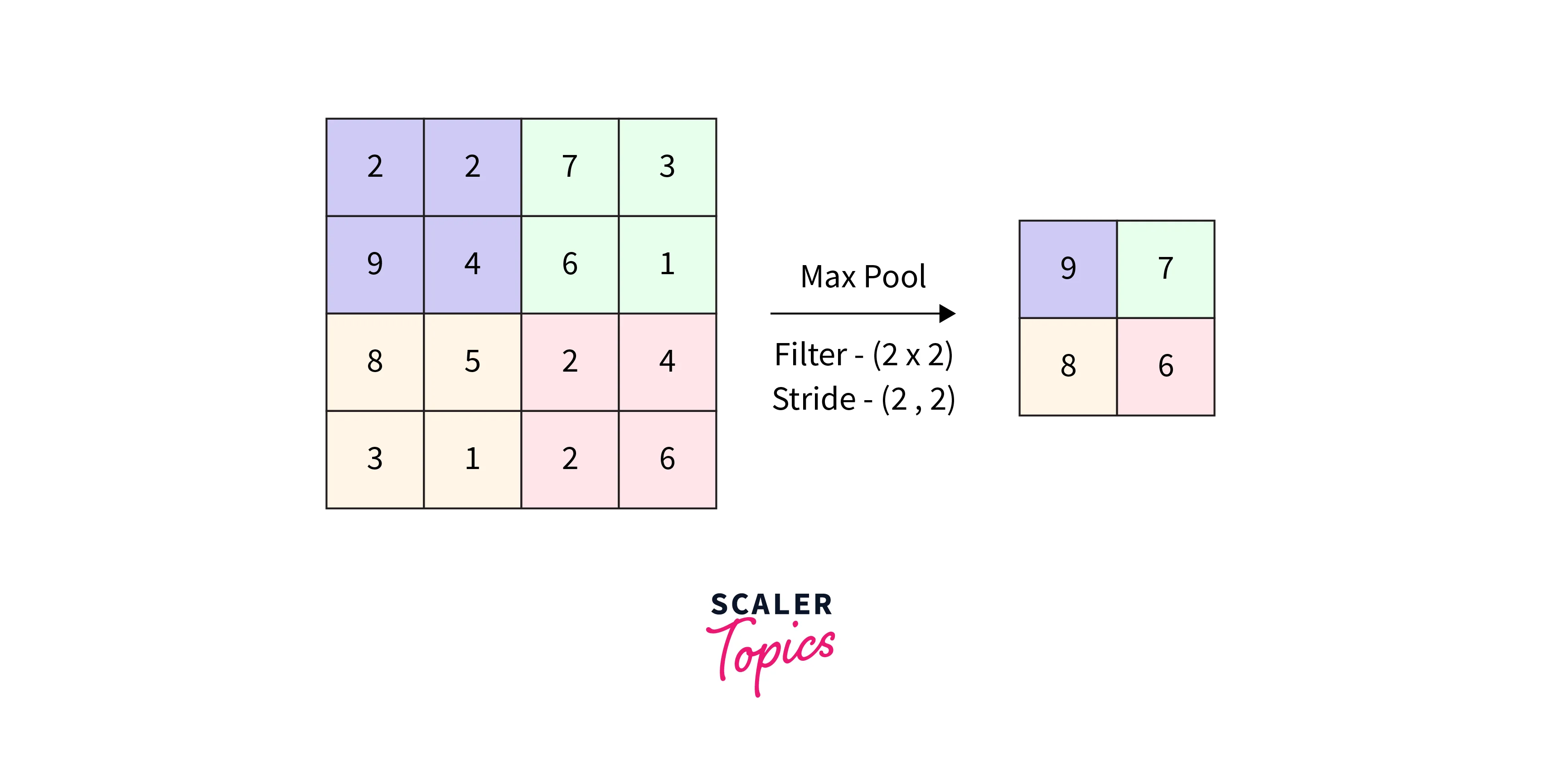

Pooling Layers:

These are frequently used to downsample the feature maps and lessen their spatial dimensionality after convolutional layers. The network's translation invariance is improved by pooling while the most important information is extracted. The most typical pooling procedure, known as max pooling, chooses the highest value possible inside a given area of the feature map.

-

Fully Connected Layers:

Fully Connected Layers: Fully connected layers are in charge of making the final classification or prediction in the CNN architecture. These layers enable high-level feature combinations and decision-making by linking every neuron in the preceding layer to every neuron in the next layer. Typically, a softmax function is used to generate a probability distribution over several classes from the output of fully connected layers.

Convolutional Neural Network Tensorflow use the backpropagation technique during training to modify the weights of the filters and fully connected layers according to the error signal.

Deep learning frameworks like TensorFlow, which offer the required resources for effectively building, training, and deploying Convolutional Neural Network Tensorflow models, can be used to develop CNNs.

Implementing CNN with TensorFlow

Before entering into the fascinating realm of TensorFlow Convolutional Neural Networks Tensorflow (CNN), it is critical to create a solid foundation and grasp the core ideas that drive this powerful technology. CNNs have transformed computer vision by allowing machines to recognize and interpret pictures with human-like precision.

In this article, we will go through the core ideas you must understand before embarking on your quest to create CNNs using TensorFlow. You'll be better prepared to understand the inner workings of CNNs and construct powerful computer vision applications if you understand these ideas.

Step 1. Neural Network Fundamentals

Step 2. Computer Vision Fundamentals

Step 3. CNNs (Convolutional Neural Networks Tensorflow)

Step 4. Architectures and Variants of CNN

Step 5. TensorFlow Fundamentals

Step 6. Creating the Environment

Step 7. Python Libraries Required for Computer Vision

Step 8. Choosing the Best Dataset

Importing TensorFlow

Use the following code to import TensorFlow

As soon as TensorFlow is imported, you may use its features and classes to create and train neural networks, including Convolutional Neural Networks Tensorflow (CNNs). It's vital to remember that installing TensorFlow in your environment is a prerequisite for importing it. Depending on your favorite package manager, you can install TensorFlow using pip or Conda.

Use the following command, for instance, to install TensorFlow using pip:

Download and Prepare the CIFAR10 Dataset

Step 1: Import the necessary libraries first.

Step 2: Load the CIFAR-10 dataset in step two.

Step 3: Normalise the pixel values in step three.

Step 4: Change the format of the labels to categorical

These actions will load the CIFAR-10 dataset, normalize it, and then convert the labels to categorical format.

Verify the Data

Here is an illustration of how to validate the data:

Step 1:

Examine the loaded data's form.

To check that the picture and label arrays' dimensions match the desired form, this will be displayed.

Step 2:

Visualize the data in step two.

To visually analyze the data, this code piece will provide a grid of example photographs and the labels that go with them.

Step 3:

Verify label encoding in step three.

To verify that they are appropriately encoded, this will print the unique labels that are included in the training set.

You may confirm that the CIFAR-10 dataset has been loaded and formatted correctly, as well as learn more about its structure, by carrying out these tests.

Create the Convolutional Base

You can use the following code to generate the convolutional foundation for a Convolutional Neural Network Tensorflow(CNN) using TensorFlow:

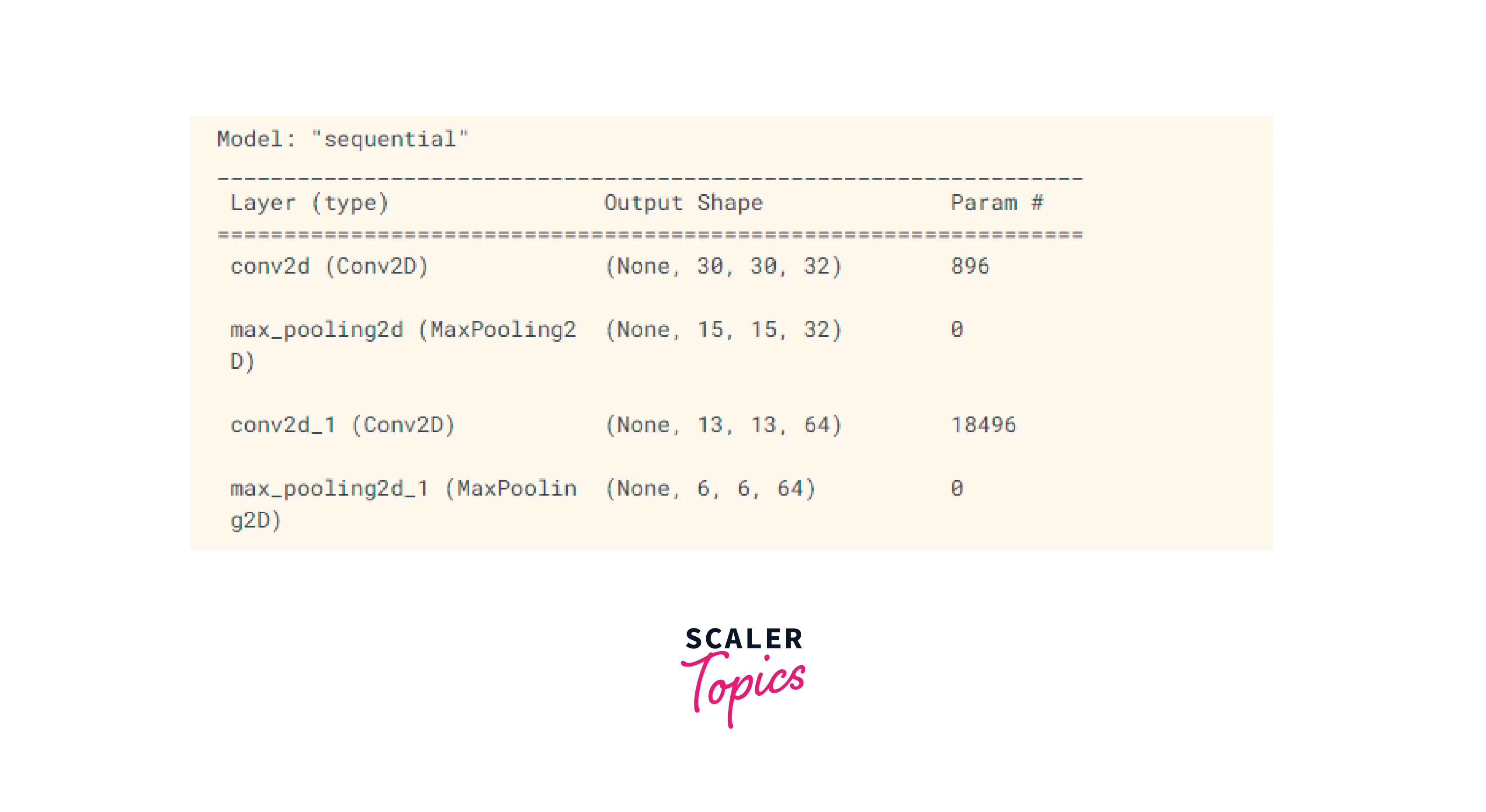

Using the Conv2D layer, we construct many convolutional layers while defining the number of filters, filter size, activation function, and input shape. The RGB picture dimensions and the number of color channels (32, 32, 3) are represented by the input shape, which is set to (32, 32, 3).

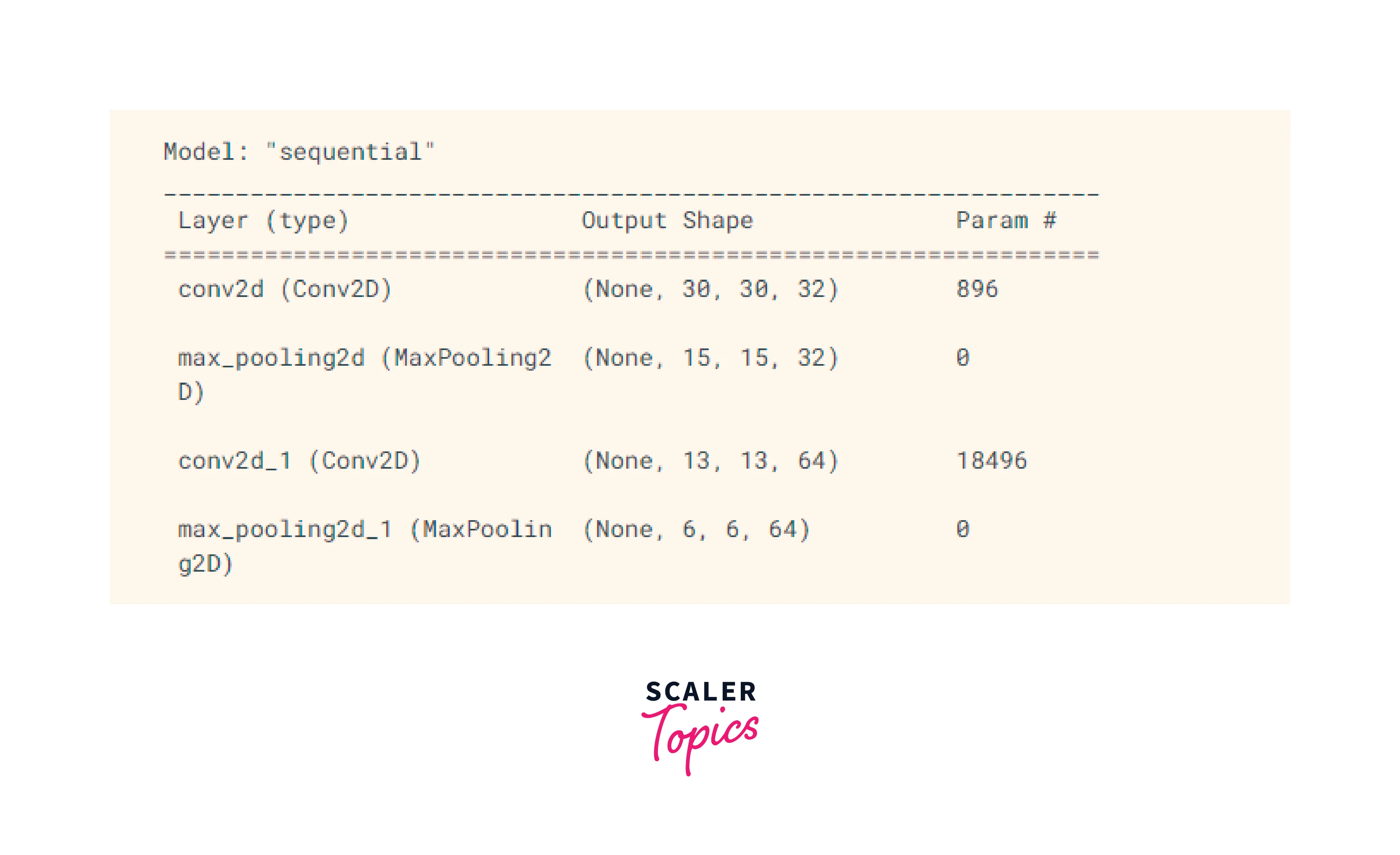

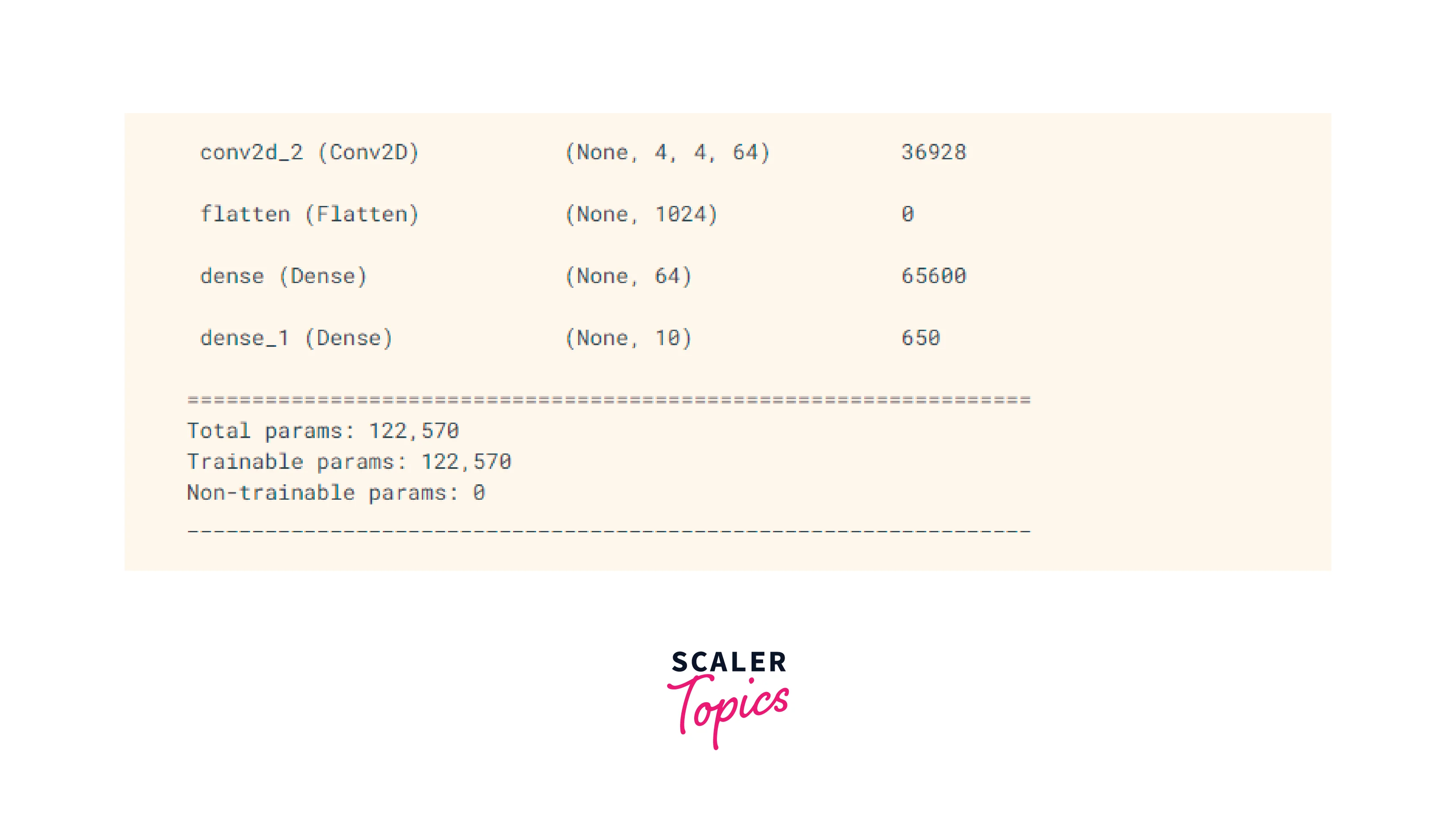

The conv_base.summary() command outputs the convolutional base summary, including the types of layers, output shapes, and the number of trainable parameters.

The convolutional foundation for your CNN model in TensorFlow may be made using this code. A final output layer and fully linked layers for classification or regression tasks may subsequently be added to this foundation.

Add Dense Layers on Top

You may change the code in TensorFlow as follows to add dense layers on top of the convolutional base:

You can add one or more dense layers using the Dense layer after the Flatten and Convolutional layers. The ReLU activation function is used in the first dense layer, which comprises 64 units.

The softmax activation function is used in the last dense layer, which consists of 10 units. This works well for jobs requiring the categorization of several classes, where each unit represents a different class, and the result is a probability distribution across all of the classes.

Compile and Train the Model

Use the following code as an example to compile and train the model with the convolutional basis in TensorFlow:

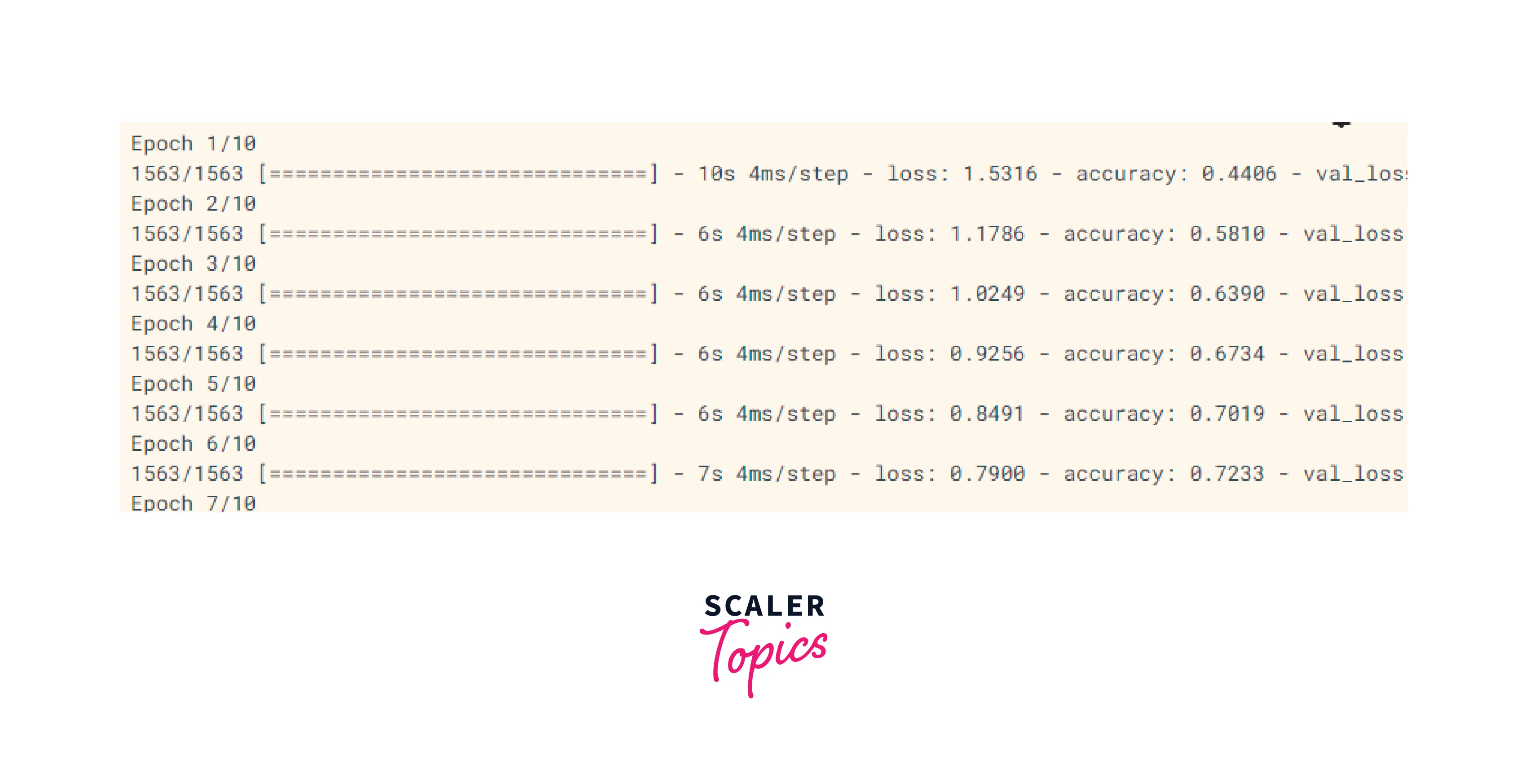

As previously, this code snippet begins by loading and getting ready the CIFAR-10 dataset. Then, using convolutional layers, pooling layers, and dense layers, we build the convolutional foundation with the appropriate design.

Model Architecture

- Convolutional layers are utilised for feature extraction, activation functions for nonlinearity, pooling layers for downsampling, and fully connected layers for prediction.

- Classification is enabled by the output layer with proper activation. The depth and breadth of the architecture exert an influence on performance, prompting a trade-off between complexity and available resources.

- Transfer learning and pre-trained models can help boost efficiency.

We use the compile function to build the model after it has been built. In this scenario, we define the optimizer ('Adam' in this case), the loss function (SparseCategoricalCrossentropy for multi-class classification), and the metrics to be assessed during training (accuracy).

Model training

- Selecting loss functions, optimizers, learning rates, and batch sizes, as well as using validation sets and data augmentation, are all part of the process.

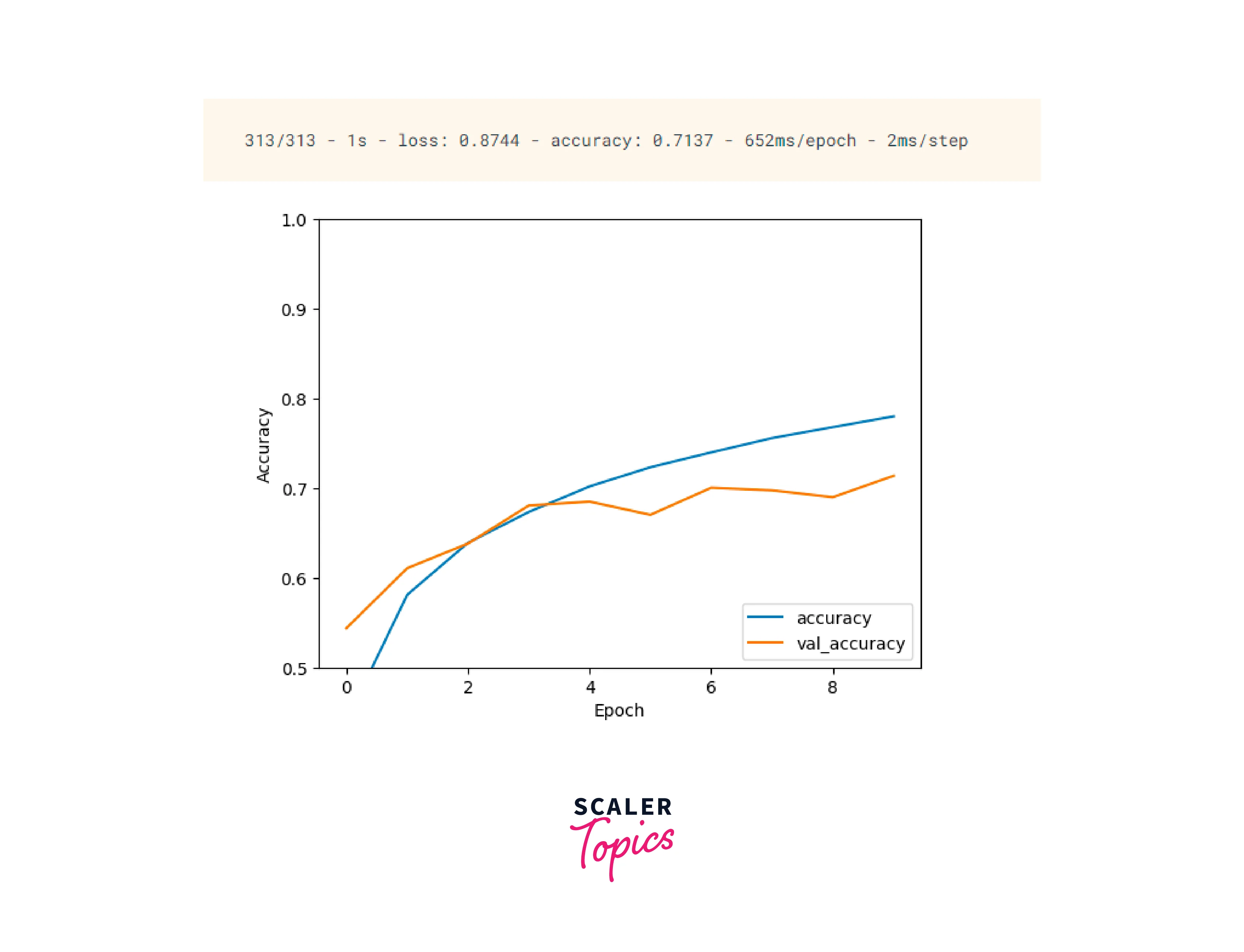

- Monitoring training progress assists in identifying problems and fine-tuning the model for peak performance.

- Strong computer vision systems could be developed with excellent accuracy and generalisation potential by efficiently training CNNs.

Evaluate the Model

You may use TensorFlow's 'evaluate' method to evaluate the training model and gauge how well it performed on the test dataset. Here is an illustration of how to assess the model:

We assume that the model has already been built, trained, and stored in this bit of code. We normalize the test pictures and load the CIFAR-10 test dataset.

Model evaluation

As we did during training, we now generate and compile the model using the required architecture and compile parameters.

If you've already stored the trained weights using the load_weights method, you can choose to load them.

Transfer Learning and Fine-Tuning in CNN with TensorFlow

Convolutional Neural Networks Tensorflow (CNNs) employ the potent techniques of transfer learning and fine-tuning to take advantage of previously trained models and apply them to new, comparable jobs. These methods allow us to use the information gained by models trained on huge datasets to solve our unique problem, even with little quantities of data.

-

Transfer Learning:

Transfer learning entails beginning with a model that has already been trained and altering or expanding it to suit a new task. The goal is to apply the pre-trained model's learned representations to our issue area. By doing this, we may take advantage of the pre-trained model's ability to generalize and lessen the requirement for significant training from the start.

Transfer learning procedures:

a. Pick a Pre-trained Model:

Pick a CNN model that has already been trained on a sizable dataset, such ImageNet. VGG, ResNet, Inception, or MobileNet are common options.

b. Freeze the Convolutional Base:

The weights of the pre-trained layers should be frozen in the convolutional base to stop them from changing during training. Only the newly added layers will be trained after this stage, protecting the learned representations.

c. Add Custom Layers:

On top of the convolutional base that has been frozen, add additional layers. These layers may be dropout layers, completely linked layers, or any other design that is appropriate for the particular purpose.

d. Model Training:

Make use of your dataset to train the new model. While keeping the pre-trained layers frozen, update the new layers' weights.

-

Fine-tuning:

The process of fine-tuning goes one step further by enabling some of the previously trained layers to become unfrozen and train alongside the newly inserted layers. By using this method, the learned representations may be tailored to the particular job at hand. When the new dataset and the original dataset that the pre-trained model was trained on are comparable, fine-tuning is very helpful.

Ways to Fine-Tune:

a. Pick a Pre-trained Model:

Pick a pre-trained CNN model that is similar to the task you want to perform and matches the issue domain.

b. Unfreeze Layers:

Unfreeze a few of the layers that have been pre-trained, usually those from later in the network's development. By updating such layers during training, the model may be adjusted to the current job.

c. Add Custom Layers:

Overlay fresh layers with those that have already been taught. Together with the frozen layers, these layers will be taught.

d. Train the Model:

Using your dataset, train the complete model, including both the previously trained layers and the additional layers that were added.

You may make use of the information and representations that pre-trained models have gained and apply them to your particular tasks by using transfer learning and fine-tuning approaches. When dealing with identical issues within the same domain or when working with limited data, this method is quite helpful. To make transfer learning and fine-tuning in CNNs easier to apply, TensorFlow offers tools and pre-trained models.

Transfer Learning with TensorFlow Hub

A potent method for using pre-trained models from TensorFlow Hub, a library of reusable machine learning models, is transfer learning with TensorFlow Hub. To make it simpler to discover a model that will work for your particular application, TensorFlow Hub offers a broad variety of pre-trained models that have been trained on different datasets and with different architectures.

An overview of using transfer learning with TensorFlow Hub is given below:

-

Add the required libraries:

-

Pre-trained model should be loaded from TensorFlow Hub:

-

Make the model and compile it:

-

Develop the model:

Testing

To assess a trained model's performance and gauge its correctness on unobserved data, testing is a crucial step. The evaluate() and predict() functions in TensorFlow may be used to evaluate a model. Here is an illustration of how to do testing:

-

Bring up the test dataset:

-

Tests should be run using evaluate():

-

Tests should be run using predict():

Conclusion

- Convolutional Neural Networks (CNNs) are effective tools for identifying and classifying images.

- The tools and features required for creating CNN models are provided by TensorFlow, a well-liked deep learning framework.

- To learn high-level representations and generate predictions, dense layers are layered on top of the convolutional basis.

- With TensorFlow Hub, transfer learning, and fine-tuning, pre-trained models may be used to boost performance and effectiveness.

- Researchers and practitioners may confidently tackle real-world image classification challenges by creating CNN models with TensorFlow.