Custom Layer and Loss Functions

Overview

Deep learning has revolutionized the field of machine learning by enabling complex models to learn patterns and features directly from raw data. In this context, the Keras library, with its intuitive interface and powerful abstractions, has gained immense popularity among researchers and practitioners. One of the key strengths of Keras is its flexibility in allowing the creation of custom layers and loss functions. In this article, we'll dive into custom layers and custom keras loss functions, exploring what they are, how to create them, and their significance in building sophisticated neural network architectures.

Introduction

Neural networks are constructed from layers, each responsible for a specific transformation of input data. Keras provides many predefined layers for various tasks, but in some scenarios, you might need to design your layers to address specific requirements. Similarly, while training a neural network, the choice of loss function heavily impacts the learning process. Custom layers and Custom loss functions can be tailored to capture domain-specific nuances and improve model performance. This article will provide a comprehensive guide to creating custom layers and loss functions in Keras.

What is a Custom Layer?

A custom layer in Keras refers to a layer that is created by the user to perform a specific operation on input data within a neural network. While Keras provides a wide range of built-in layers for various tasks like convolution, pooling, and dense transformations, there are scenarios where these standard layers may not fully meet the requirements of a particular problem. This is where custom layers come into play.

A custom layer is designed to cater to unique or specialized functionalities that are not directly covered by the standard Keras layers. This could involve intricate computations, complex transformations, or any other operations specific to the problem domain. By creating custom layers, you gain the ability to incorporate domain-specific knowledge and expertise into the architecture of your neural network, enabling you to design models that are more attuned to the intricacies of your data and problem. To understand custom layers we'll look into a case study.

[IMAGE {1} {INTRODUCTION} START SAMPLE]

[IMAGE {1} FINISH SAMPLE]

[IMAGE {1} FINISH SAMPLE]

Standard CNN architectures, like VGG16 or ResNet, are designed for image classification. They involve downsampling operations (such as max pooling) that significantly reduce the spatial dimensions of the feature maps. While this downsampling aids classification, it poses challenges for tasks like semantic segmentation, where fine-grained spatial information is essential.

To address the challenge of spatial detail preservation in semantic segmentation, a custom upsampling layer can be designed and integrated into the neural network architecture. One approach is to use bilinear upsampling, which scales up the feature maps while preserving smooth transitions between neighboring pixels. In order to implement the bilinear upsampling layer, we use the following steps:

- Design a custom layer that performs bilinear upsampling on the feature maps obtained from the encoder part of the network.

- Integrate this custom layer in the decoder part of the network, where upsampling is required to match the spatial dimensions with the input image.

While implementing the layer, we can preserve finer details in the feature maps, enhancing the segmantation accuracy and maintains the spatial consistency of the image. By developing custom layers tailored to the problem at hand, machine learning practitioners can enhance the capabilities of their models and achieve better results.

Understanding Custom Layers

Custom layers are a powerful feature in deep learning frameworks like Keras that enable you to define and implement your own neural network layer functionalities beyond the pre-defined options. These layers allow you to tailor your neural network architectures to your specific tasks, incorporate domain knowledge, and experiment with novel ideas. In this section, we'll delve deeper into the concepts and steps involved in understanding and creating custom layers.

At its core, a neural network layer is a mathematical function that transforms input data into a desired output format. Whether it's a convolutional layer extracting features from images or a recurrent layer processing sequences, each layer performs a specific computation. Custom layers in Keras follow a similar principle but with the added advantage of being fully customizable.

How to Create Custom Layers in Tensorflow?

To create a custom layer, you typically subclass the tf.keras.layers.Layer class and implement the necessary methods like __init__, build, and call. These methods define the layer's properties, its trainable weights, and the forward-pass logic, respectively. Once created, the custom layer can be used just like any built-in Keras layer when constructing your neural network architecture.

The init layer will help you initialize the class. It accepts parameters and converts them into variables that can be used within the class. Initializtaion of the class is done using 'super' keyword. 'units' is a local class variable.

The build class is used to specify the states. In the dense layer, the two states required are 'w' and 'b', for weights and biases. When the Dense layer is being created, we are not just creating one neuron of the network's hidden layer, rather multiple neurons in the layer needs to be initialized and given random weights and biases. Tensorflow has many built in functions to initialize these values.

The call is the last method that performs the computation. In the case of Dense layer, it multiplies the inputs with the weights, adds the biases and finally returns the output.

Create Custom Layer Functions

It is advisable to utilize the high-level API, tf.keras, for constructing neural networks. Nevertheless, it's worth noting that the majority of TensorFlow APIs can be employed alongside eager execution. Now, we will proceed to craft a fresh custom layer known as MyDenseLayer.

Imports

We'll start by importing the required libraries to create the custom layer.

TensorFlow library provides a range of tools and functions for building and training neural networks. Now we'll create our own Dense layer in the name of MyDenseLayer.

Output:

['my_dense_layer/kernel:0']

- The 'MyDenseLayer' class is defined, inheriting from tf.keras.layers.Layer.

- In the constructor (__init__), the num_outputs argument specifies the number of output units for the dense layer.

- The build method is overridden to define the layer's weights. Inside this method, the layer's kernel (weights) is created using add_weight.

- The call method is overridden to perform the forward pass of the layer. It computes the matrix multiplication of the input and the kernel (weights).

- An instance of the MyDenseLayer class is created with 10 output units.

- The layer is called with a dummy input tensor of shape [10, 5]. This triggers the build process, where the weights are created based on the input shape.

- The names of the trainable variables in the layer are printed using a list comprehension and the trainable_variables attribute.

Custom layers are not limited to simple operations like the one shown above. You can create complex custom architectures by stacking multiple custom layers together, just like standard Keras layers. This enables the design of unique neural network structures tailored to your specific problem domain.

Custom Layer Architectures

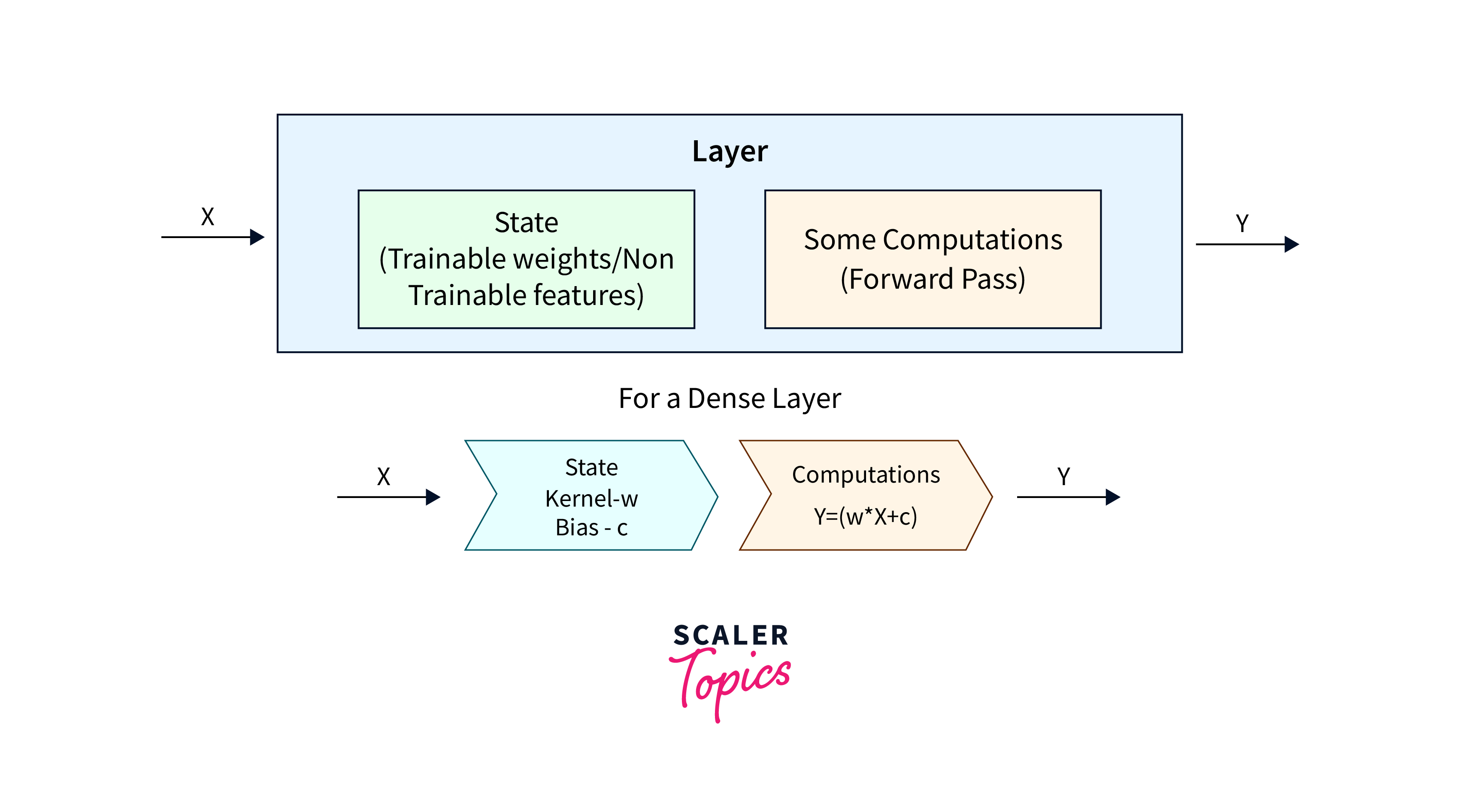

A layer is a class which recieves some parameters, passes through some computations, and passes out an output, as required by the neural network. A layer typically has two properties:

- State: A state is mostly the trainable features of the model during 'model.fit'. In a dense layer, the states constitute the weights and biases, however the parameters of a custom layer will differ according to the user, that can be even other pre-built layers. For example:

Here, we have a convolutional layer, and a batch normalization layer inside the custom layer. By this way, we can have other parameters than weights and biases to a custom layer.

- Computation: Computation is transforming the batch of input data into a batch of output data. The calculations will be happening inside the layer. For example, the dense layer perform the following computation,

Y = m * x + b, where y is the output, x is the input, and w and b are trainable weights and biases.

Advantages and Trade-offs of Custom Layers

Major advantages in creating a custom layer is:

- Incorporating Domain Knowledge: Custom layers enable you tom incorporate domain expertise into your models. You can add specific insights and knowledge about the data as a mathematical computation and add it to the data, potentially improving the model's ability to capture relevant patterns.

- Tailored Functionality: Instead of using the prebuilt functions given by the keras library, we can include our own intrepretations of code into to model for better processing of the data.

- Efficiency: By implementing custom operations efficiently, we can achieve better performance compared to composing multiple standard layers. Which can lead to better insights and decision making by the model.

However there are some trade-offs which we need to consider while implementing the custom layers, some of them are:

- Maintainance: Custom layers need to be maintained over time, especially as new versions of the framework are released. The layers must adapt to the updated versions.

- Complexity: Creating custom layers requires a high understanding of neural networks, and the model architecture. This can lead to more potential errors.

Understanding Custom Loss Functions

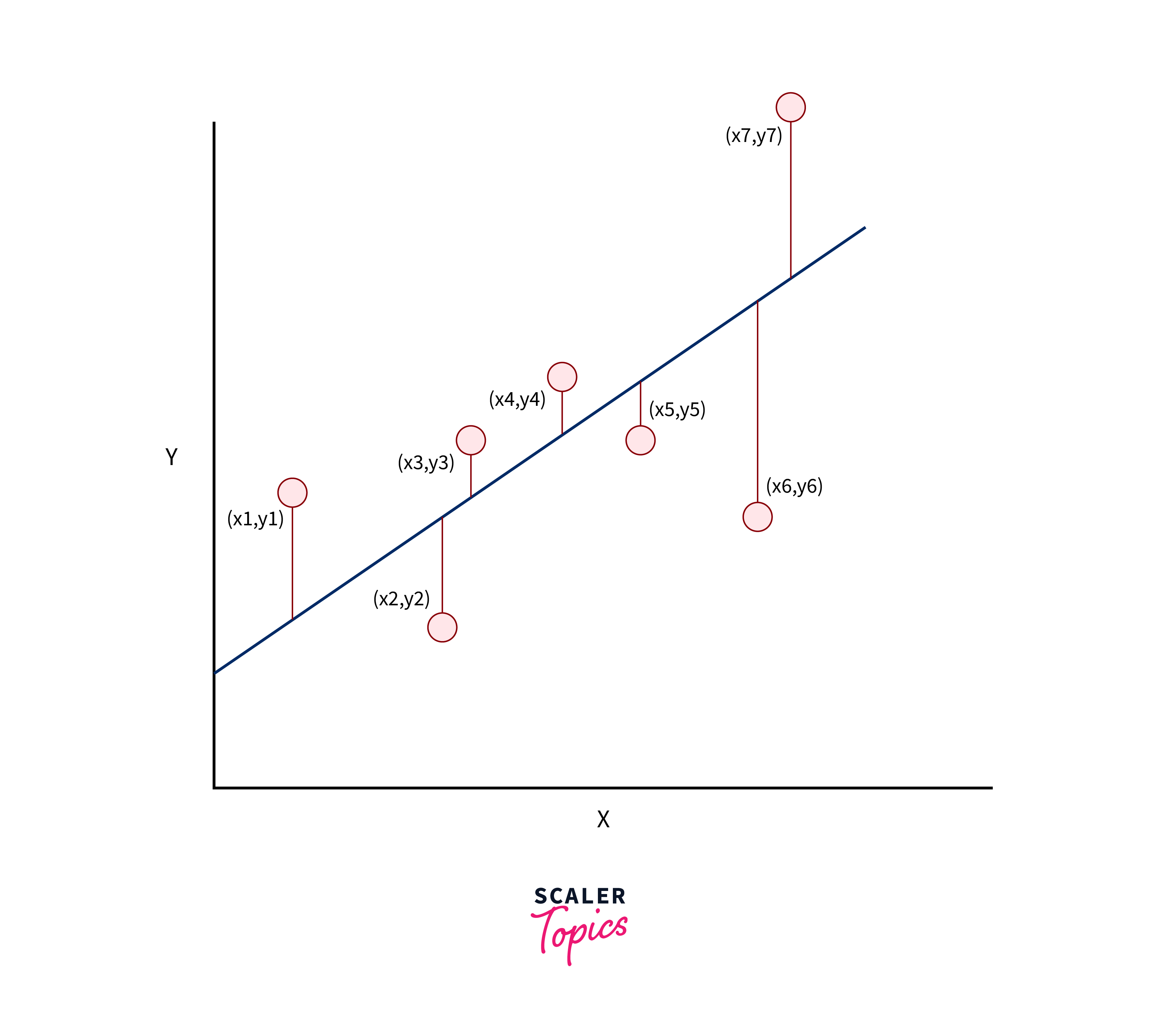

Loss functions (also known as objective functions or cost functions) quantify the difference between predicted values and ground truth labels. Custom loss functions can be invaluable when standard loss functions do not capture the nuances of your problem, or when you want to optimize for specific criteria.

Loss functions are one of the important parts of a machine learning model. Loss functions, otherwise called as cost functions, are special types of functions, which help us minimize the loss, and reach as close as possible to the expected output.

Loss is calculated by the model to get the gradients for the model weights and update the weights using backpropogation.

There are several built-in keras loss functions like binary crossentropy, categorical crossentropy, mean squared error, mean absolute error. However these functions will not always be effective in determining the performance of the model, therefore, some user defined loss functions can be used to improve the performance of the model.

For example, Dice Score or Intersection over Union(IoU) is an important metric in determining the loss of a segmentation model. However the dice score is not available in keras loss functions. So, we create a custom loss function for evaluating the dice score of the model. These custom loss functions will help better in evaluating and optimize the model.

Create Custom Loss Functions

In this section, we will create a custom loss function using the keras API. After a model is trained, it produces a predicted value of the target variable. For a loss function, the important parameters are the predicted value and the actual value. These two parameters must be there in a loss function.

The above is an example of a custom loss function. As mentioned the parameters are y_true and y_pred. These arguments are passed from the model itself at the time of training the model.

Now, we will create a custom loss function, we will create a custom categorical cross-entropy using our custom code using the keras API. We will use keras functions to get benefitted from Tensorflow's graph feature.

Like the above example, other loss functions can be written like custom loss, Jaccard loss and dice loss for segmentation tasks.

Advanced Concepts in Custom Loss Functions

Advanced custom loss functions can incorporate domain-specific knowledge. For example in image segmentation tasks, we would require dice loss, Jaccard loss which takes the intersection over union(IoU) for accurate segmentation. Custom loss functions are used in medical imaging autonomous driving and many other fields. They play a huge role in improving evaluation metrics of image segmentation models.

-

Dice Loss: The Dice loss measures the similarity between predicted and ground truth segmentations. It calculates the overlap between the two masks, providing a metric that considers both false positives and false negatives. The Dice coefficient is defined as Dice_loss = (2 * Intersection) / (Total pixels in both masks) and the Dice loss is calculated as 1 - Dice coefficient. Dice loss is used in medical imaging as IoU plays an important role in segmentation models used to detect pixel-based accurate images.

-

Multi-Task Losses: Autonomous driving systems often require models to predict multiple tasks simultaneously, such as object detection, lane detection, and speed estimation. Multi-task losses combine different loss components to optimize the model for each task while balancing their contributions.

Custom Functions in Transfer Learning and Domain Adaptation

Transfer learning and domain adaptation are techniques that leverage pre-trained models to improve the performance of machine learning tasks in scenarios where labeled data might be limited or the target domain is different from the source domain. Custom layers and loss functions play a significant role in enhancing the effectiveness of these techniques. Now, we'll see how to use custom layers and loss functions in transfer learning.

Let's consider an example of using transfer learning to classify medical images of different organs. You have a pre-trained model that was originally trained on a large dataset for general image classification tasks. However, you want to adapt this model to accurately classify different organs in medical images.

In this example:

- The pre-trained ResNet50 model is loaded as the base model.

- Custom layers are added on top of the base model to adapt it to the new task. These layers include global average pooling, dense layers, and dropout for regularization.

By fine-tuning the pre-trained model with custom layers and loss functions, you leverage the knowledge embedded in the pre-trained weights while adapting the model to your specific task and domain. This approach often leads to better performance compared to training from scratch, especially when labeled data is limited or the target domain differs from the source domain.

Best Practices and Tips for Custom Layer and Loss Functions

Using custom layers and custom keras loss functions can be rewarding and can be problematic upon not following best practices when using it on a model. Here are some of the best practices which you can follow while using a custom layers or keras loss functions.

- Thorough Testing: Test your custom layers and loss functions rigorously to ensure they are functioning as intended.

- Reuse: Encapsulate frequently used custom layers as reusable components to simplify future model development.

- Efficiency: Consider using TensorFlow's built-in functions for common operations to ensure optimal performance.

- Documentation: Provide clear documentation for your custom layers to facilitate collaboration and understanding.

- Validation: Validate the effectiveness of your custom loss function through experiments and comparisons with standard options.

Conclusion

- Custom layers and loss functions are essential tools in the arsenal of deep learning practitioners.

- They provide the flexibility to design intricate neural network architectures and tailor loss computations to the specifics of a given problem.

- By leveraging custom components, researchers and engineers can push the boundaries of what's achievable in machine learning, while also advancing the field as a whole.