Running Inference on Models in the Browser

Overview

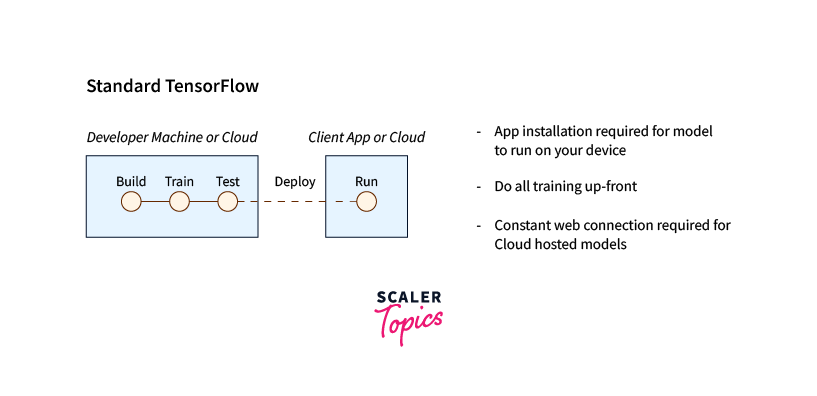

Traditionally, running machine learning models required significant computational resources and was often done on specialized hardware or cloud environments. As machine learning evolves, researchers and developers constantly seek new ways to make AI more accessible and efficient. One exciting development in this area is the ability to run inference on machine learning models in web browsers directly.

What is TensorFlow.js?

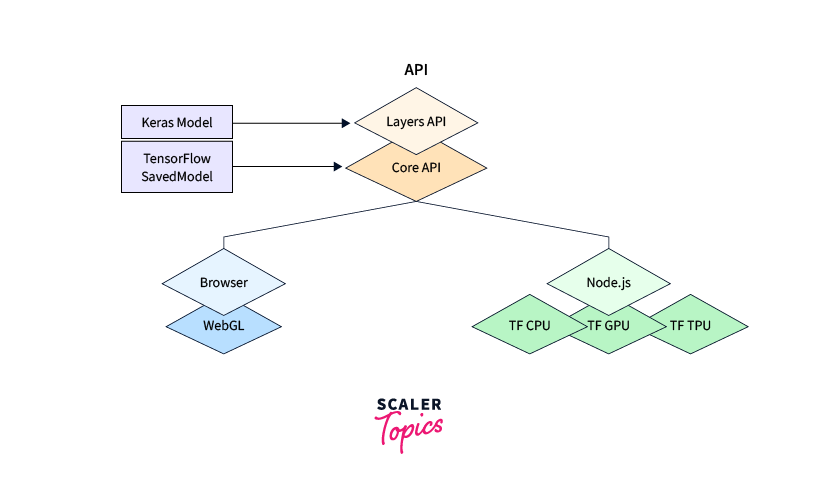

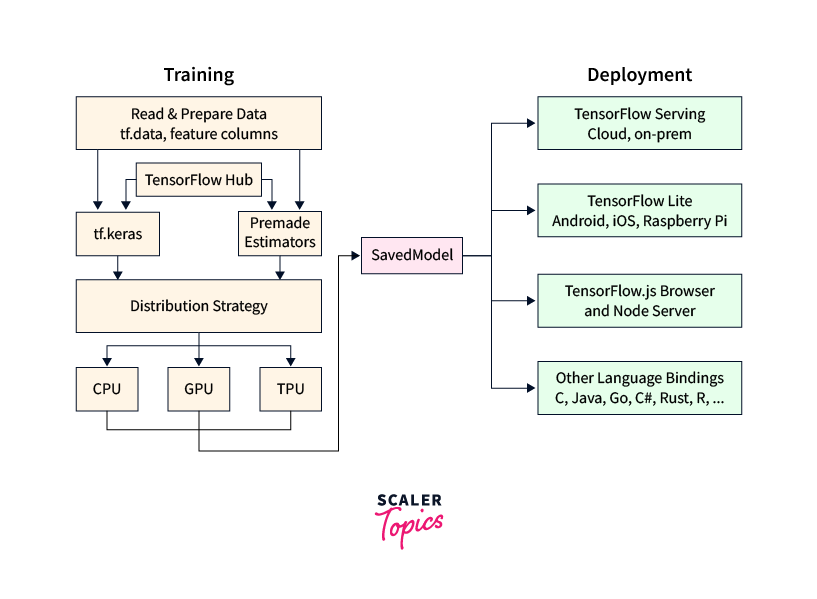

TensorFlow.js brings the capabilities of TensorFlow, a leading machine learning framework, to the browser environment. By executing ML models in the browser, developers can harness the power of AI directly in web applications, eliminating the need for server-side computation or cloud dependencies. We can also use Node.js, an open-source server-side Javascript run-time environment built on Chrome's JavaScript (V8)Engine. Tensorflow.js helps to create intelligent and responsive web experiences, opening up a whole new world of possibilities. By harnessing the power of TensorFlow.js and its rich ecosystem of tools and APIs, developers can leverage the capabilities of machine learning models without relying on external servers, providing real-time and personalized experiences to users.

TensorFlow.js comprises two main components:

TensorFlotw.js Core: This is the foundational infrastructure for building and executing machine learning models in JavaScriptIt includes a set of APIs that allow developers to define, train, and run models using tensors,TensorFlow.js Core provides low-level operations and utilities for mathematical computations, model manipulation, and data transformations.

TensorFlow.js Layers: It is built on top of TensorFlow.js Core and provides a higher-level API for defining and training neural networks. Tensorflow.js offers a familiar interface inspired by Keras,TensorFlow.js Layers include pre-defined layers, such as convolutional layers, dense layers, and recurrent layers, that can be used to construct neural networks easily.

Loading the Model in the Browser

Developers can convert models trained in popular formats, such as TensorFlow SavedModel or Keras, into formats compatible with TensorFlow.js. Once loaded, these models can be seamlessly integrated into web applications, allowing for real-time inference without requiring round-trip requests to external servers.

Step 1: Convert the Model

Before loading the model into the browser, we should check if it is compatible with TensorFlow.js, and on the negative hint, we should convert it. This conversion typically requires exporting the model from TensorFlow in a supported format, such as SavedModel or HDF5, and then converting it using the TensorFlow.js Converter tool. The TensorFlow.js converter has two components:

- A command line utility that converts Keras and TensorFlow models for use in TensorFlow.js.

- An API for loading and executing the model in the browser with TensorFlow.js.

Depending on which type of model you’re trying to convert, you’ll need to pass different arguments to the converter. For example, let’s say you have saved a Keras model named model.h5 to your tmp/ directory. To convert your model using the TensorFlow.js converter, you can run the following command:

This will convert the model at /tmp/model.h5 and output a model.json file along with binary weight files to your tmp/tfjs_model/ directory.

Step 2: Include TensorFlow.js Library

In the HTML file of the web application, include the TensorFlow.js library by adding the following script tag.

Step 3: Load the Model

In the JavaScript code, the tf.loadLayersModel() function is used to load the converted model. This function fetches the model file and returns a promise that resolves to the loaded model.

Replace path/to/model/model.json with the correct path to the model file on your server, or use a relative path if the model file is stored locally.

Run Inference in the Browser

Once the model is loaded, TensorFlow.js equips developers with the tools to run inference on models in the browser. Developers can pass input data to the models using its powerful APIs and receive real-time predictions or desired outputs. The browser becomes a stage where AI algorithms come to life, responding to user interactions with lightning-fast intelligence and delivering dynamic, personalized experiences.

Above, the runInference() function takes input data, converts it into a TensorFlow.js tensor, and then passes it to the loaded model for prediction. The predictions are obtained using the predict() method of the model, and the results are logged to the console.

Visualize and Interact with Model Outputs

With TensorFlow.js, developers can enchant users by visualizing and interacting with model outputs. Libraries like D3.js or Plotly.js can be utilized to create stunning visualizations that bring AI-driven insights to life. Users can explore data through interactive charts and graphs, unlocking hidden patterns and understanding the model's predictions more deeply.

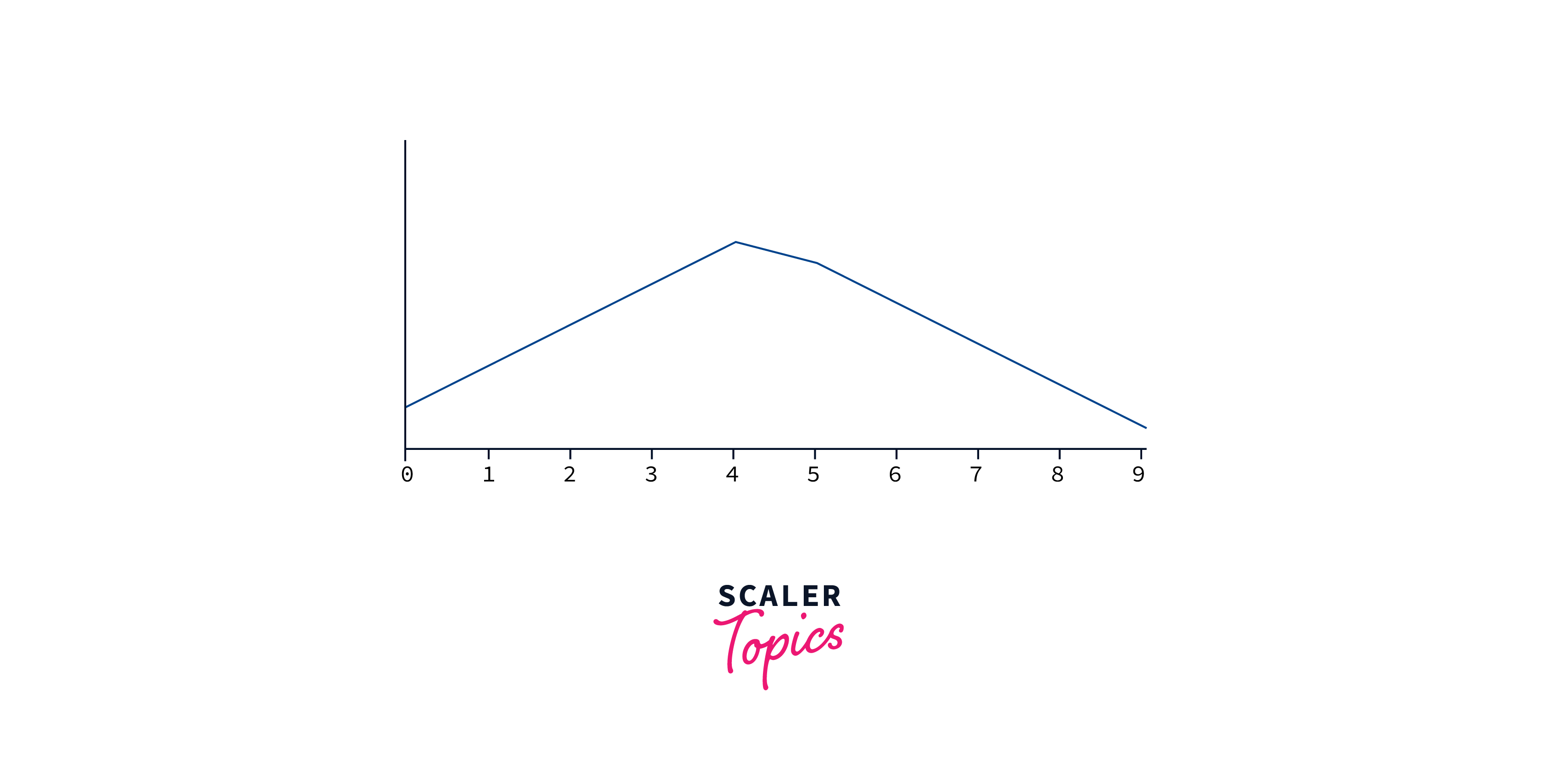

The code begins by defining two functions:

-

generateData(): This function generates sample data for demonstration purposes. It returns an object data with a property y, which is an array of model output values.

-

visualizeOutputs(data): This function takes the data object as an argument and visualizes the model outputs using D3.js. It creates an SVG element, defines a line generator using D3.js, and draws the line chart based on the provided data

-

The function defines a line generator using d3.line(). The line generator is responsible for creating the path for the line chart. It uses the data index i to calculate the x-coordinate, and the model output value d to calculate the y-coordinate.

-

Next, the function appends a path element path to the SVG. The path element is used to draw the line chart. It sets the data for the path to data.y using the datum method.

-

The d attribute of the path is set to the result of the line generator, which creates the actual path for the line chart based on the provided data.

We can use WebGL for high-performance numerical computations on GPUs

Optimize Performance and Efficiency

TensorFlow.js is not just about functionality, it also prioritizes performance and efficiency. The library leverages WebGL acceleration, tapping into the power of GPUs to expedite computations and provide a smooth user experience. Additionally, TensorFlow.js offers techniques like model quantization, allowing developers to reduce the model size and inference time without compromising accuracy.

-

const model = await tf.loadLayersModel('model/model.json');: This function loads a pre-trained machine learning model from the file model/model.json. The model is loaded as a LayersModel object provided by TensorFlow.js. The await keyword is used here to wait until the model is fully loaded before proceeding with the next instructions.

-

tf.setBackend('webgl');: The setBackend function from TensorFlow.js is used to set the computation backend to webgl. WebGL is a graphics library that can be used for GPU-accelerated computations, which can significantly speed up the computation for certain machine learning operations.

-

The loaded model is then used to perform a prediction on a sample input. In this case, a 2D tensor with the values [[0.1, 0.2, 0.3]] is provided as input to the model's prediction function. This step is included to trigger potential one-time setup costs when using WebGL as the backend.

-

console.log('Model optimized for WebGL acceleration');: After the necessary configurations and setup are done, the function logs a message to the console to indicate that the model has been optimized for WebGL acceleration.

-

optimizePerformance();: This line calls the optimizePerformance function, initiating the process of optimizing the model's performance using WebGL acceleration.

The code loads a pre-trained machine learning model using TensorFlow.js, sets the computation backend to WebGL, and then triggers a prediction with a sample input to ensure any one-time setup costs related to WebGL are handled. This process optimizes the model's performance for computations that can be accelerated by WebGL, potentially leading to faster and more efficient predictions.

It's essential to consider the model size and complexity when deploying to TensorFlow.js, as client-side inference has hardware and memory limitations compared to running on more powerful server-side hardware

Handle Model Updates and Versioning

As the AI landscape evolves, TensorFlow.js ensures seamless integration of model updates and versioning within web applications. Developers can smoothly transition between different model versions, facilitating continuous improvement and enabling applications to evolve alongside the advancements in machine learning.Now the latest Tensorflow.js is of version-4.8.0

-

const modelVersion = '1.0.0';: The code sets a constant modelVersion to the string 1.0.0, representing the current version of the machine learning model.

-

const model = await tf.loadLayersModel(model/${modelVersion}/model.json);: The code loads the current version of the machine learning model using TensorFlow.js' loadLayersModel function. It constructs the URL for the model's JSON file based on the modelVersion, and await is used to wait for the model to be fully loaded before proceeding.

-

const newModelVersion = '2.0.0';: The code sets a constant newModelVersion to the string 2.0.0, representing the new version of the machine learning model.

-

const newModel = await tf.loadLayersModel(model/${newModelVersion}/model.json);: The code loads the new version of the machine learning model using the same loadLayersModel function. It constructs the URL for the model's JSON file based on the newModelVersion, and await is used to wait for the new model to be fully loaded. Also involves updating the model's architecture or weights, modifying the data preprocessing or post-processing steps, or any other changes required to accommodate differences between the old and new model versions.

Note that these code examples use TensorFlow.js version 3.x and assume the availability of the required dependencies. And many libraries like JAX can also be used with tensorflow.js

Running Inference in the Browser Using Iris Data

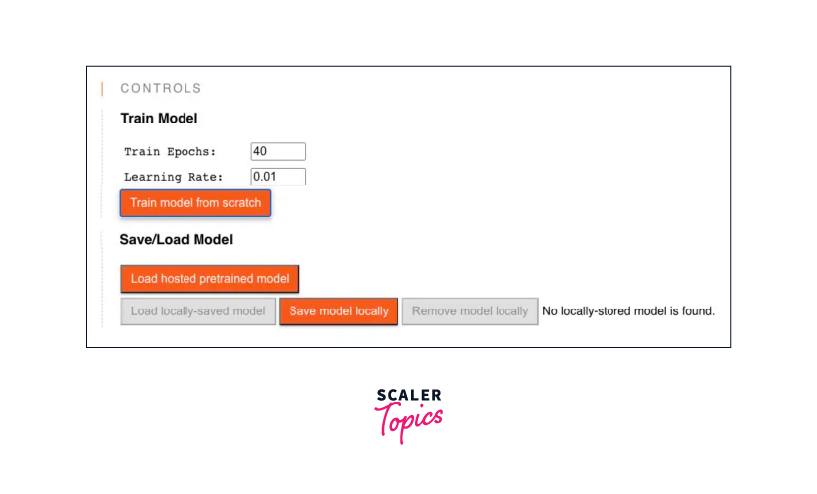

The above illustrates the inference of a TensorFlow model within a web environment, offering interactive capabilities for training the model with custom hyperparameters. Additionally, it provides the flexibility to load and save the model to a specified location, empowering users with control over the entire training process.

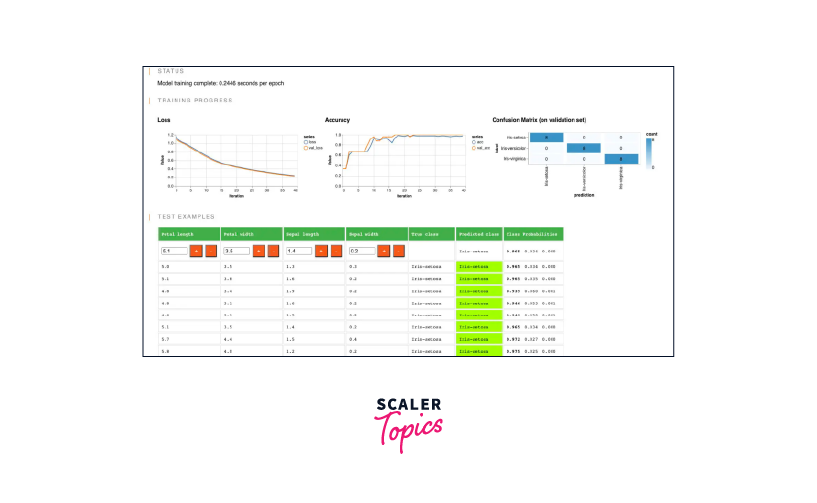

The image showcases essential visualizations, including the Loss and Accuracy metrics and a Confusion Matrix. These visualizations provide valuable insights into the model's performance, illustrating the predicted class labels and their corresponding probability distributions.

The displayed outcome results from training a new model based on one of the TensorFlow.js examples, utilizing the Iris dataset. Feel free to explore further examples and engage in a hands-on learning experience by visiting the repository here.

Conclusion

- TensorFlow.js enables running machine learning models directly in the browser using JavaScript, providing benefits like privacy, reduced latency, and offline capabilities.

- It offers functionalities to load pre-trained models, perform inference, visualize and interact with model outputs, optimize performance, and handle model updates and versioning.

- By leveraging TensorFlow.js, developers can build powerful browser-based applications incorporating machine learning capabilities, opening up new possibilities for web development.

- If you want to perform tensorflow distributed training you should use the Python version of tensorflow. And later you can export the trained model and load it into Tensorflow.js for deployment in the browser or Node.js