TensorFlow for Android

Overview

This technical blog delves into building mobile vision apps for Android using TensorFlow and TensorFlow Lite. We will cover essential aspects such as TensorFlow setup, model conversion, and deployment. Starting with setting up TensorFlow in Android Studio, we will demonstrate loading a pre-trained model and running inference. Next, we'll explore converting TensorFlow models to TensorFlow Lite format, including optional quantization for performance improvements. Building Android apps with TensorFlow Lite involves image capture, preprocessing, and running inference using the Interpreter. Additionally, we will discuss deployment strategies for distributing the app with the TensorFlow Lite model. The blog will also touch on performance optimization techniques and limitations and conclude with encouraging experimentation and innovation in mobile vision applications.

Introduction

In today's world, mobile devices have become powerful tools for running machine learning models on the go. With the advent of TensorFlow and TensorFlow Lite, developers can now bring the power of deep learning to Android applications, enabling them to perform complex vision tasks like image classification and object detection right on their smartphones. In this technical blog, we will walk through the process of building a mobile vision app for Android using TensorFlow Lite step by step.

Getting Started with TensorFlow for Android

With TensorFlow's lightweight variant, TensorFlow Lite, developers can seamlessly integrate pre-trained models into their Android applications. This enables features like image recognition, object detection, and natural language processing, all running efficiently on-device without requiring constant internet connectivity. Whether you're building a fitness app that tracks exercise form, a language translation tool, or any machine learning app, TensorFlow for Android opens up a new realm of possibilities. We shall delve into the simple steps to get started and discover the endless potential of AI in the palm of your hand.

Step - 1: Install TensorFlow Lite

Open your Android project in Android Studio and add the TensorFlow Lite dependency to your build.gradle file.

Step - 2: Load the Model

Create a new class called TensorFlowHelper.java to handle TensorFlow-related operations. Load a pre-trained TensorFlow model into your Android app using the TensorFlow Lite Interpreter.

Let's discuss the potential exceptions that might occur during image processing and how they should be handled:

-

FileNotFoundException:

This exception occurs when the specified model file ("model.tflite" in this case) cannot be found in the assets directory. -

IOException:

This exception can occur during various file operations, such as when reading the model file or setting up the interpreter. -

InterpreterException:

This exception can occur during interpreter initialization or inference if there are issues with the model or the input data. -

OpenCVException:

If you're using OpenCV for image processing, OpenCV-specific exceptions might occur due to issues like invalid image formats, incorrect parameters, or unavailable resources. -

TFLiteRuntimeException:

This is a runtime exception specific to TensorFlow Lite. It can occur during interpreter initialization, during inference, or due to issues with the model or input/output tensors.It's generally recommended to handle exceptions gracefully by logging or printing the error information and taking appropriate action based on the specific exception.

TensorFlow Lite and Model Conversion

Before we can use a TensorFlow model on Android with TensorFlow Lite, we need to convert the model to the Lite format. The conversion process typically involves two steps:

Step - 1: Convert the Model to TensorFlow Lite

To convert a TensorFlow model (usually in SavedModel format or a Keras model) to TensorFlow Lite, we use the TensorFlow Lite Converter.

Step - 2: Save the Converted Model

Once the model is converted to TensorFlow Lite format, we can save it to a file for later use in Android.

To get a better-optimized model, you can apply quantization techniques to reduce the model size further and improve inference speed.

Building Android Apps with TensorFlow

Now that our TensorFlow Lite model is ready, let's integrate it into our Android app for mobile vision tasks.

Step - 1: Capturing Images

In your app's main activity, add a button or any other UI element to capture images using the device's camera.

-

Import Statements:

The code starts with importing necessary classes from various packages, including TensorFlow Lite, Android core classes, and the custom model class. -

MainActivity Class:

This is the main activity class that represents the user interface and functionality of the Android application.-

AppCompatActivity:

This is the base class for activities in Android that provides compatibility with older versions of Android through the AndroidX library. It provides essential methods and features required to create and manage the user interface of your app. Inherit from this class to create your app's main activity. -

onCreate(Bundle savedInstanceState):

This method is a crucial part of the Android Activity lifecycle. It's called when the activity is first created. You use this method to initialize your activity, set up the user interface, and perform any necessary setup tasks. In your code, you're setting the content view using setContentView, initializing UI elements (buttons, image view, and text view), and setting up click listeners for buttons. -

Button:

This UI element represents a clickable button in your app's user interface. In your code, you have buttons for capturing images using the camera (camera button) and selecting images from the gallery (gallery button). Click listeners attached to these buttons define what happens when they are clicked. -

ImageView:

This UI element is used to display images in your app's user interface. You'll use this element to show the captured or selected images. In your code, it's the container where the captured or selected image will be displayed. -

TextView:

This UI element is used to display text in your app's user interface. In your code, the result TextView is used to display the result of your mobile vision task, which will be generated using your TensorFlow Lite model. -

Intent:

An object that represents an action to be performed, such as opening the camera or gallery. Intents allow you to launch activities, start services, send broadcasts, and more. -

PackageManager:

This class provides methods to query and manipulate application packages on the Android device. You use it to check whether your app has the required camera permission before launching the camera. -

MediaStore:

This class provides access to media files, including images and videos. You're using it to interact with the device's camera and image gallery, enabling you to capture images or select images from the gallery. -

Manifest.permission:

This object represents various permissions that an Android app can request. You're checking and requesting the camera permission using Manifest.permission.CAMERA to ensure that the app has the necessary permission before attempting to use the camera.

-

-

Member Variables:

- Button camera, gallery:

These are references to two buttons in the user interface, one for capturing an image with the camera and the other for selecting an image from the gallery. - ImageView imageView:

This refers to an ImageView widget that displays the selected or captured image. - TextView result:

This refers to a TextView widget displaying the result or prediction from the machine learning model. - int imageSize = 32;:

This variable sets the image size that will be processed by the machine learning model. It is set to 32, meaning the image will be resized to 32x32 pixels before feeding it to the model. - onCreate() Method:

This method is executed when the activity is created. It sets the content view to the XML layout file "activity_main" using setContentView(). It also initializes the member variables by finding their corresponding views from the XML layout using findViewById(). - OnClickListener for Camera Button:

This listener is attached to the "camera" button. When the button clicks, it checks if the app can access the camera. If permission is granted, it creates an intent for capturing an image using the device's camera (Intent.ACTION_IMAGE_CAPTURE) and starts the camera activity using startActivityForResult(). The constant 3 is used as the request code for the result, which will be used later to handle the captured image. - OnClickListener for Gallery Button:

This listener is attached to the "gallery" button. When clicked, the button creates an intent to pick an image from the device's gallery (Intent.ACTION_PICK) and starts the gallery activity using startActivityForResult(). The constant 1 is used as the request code for the result, which will be used later to handle the selected image.

- Button camera, gallery:

Step - 2: Preprocessing Images

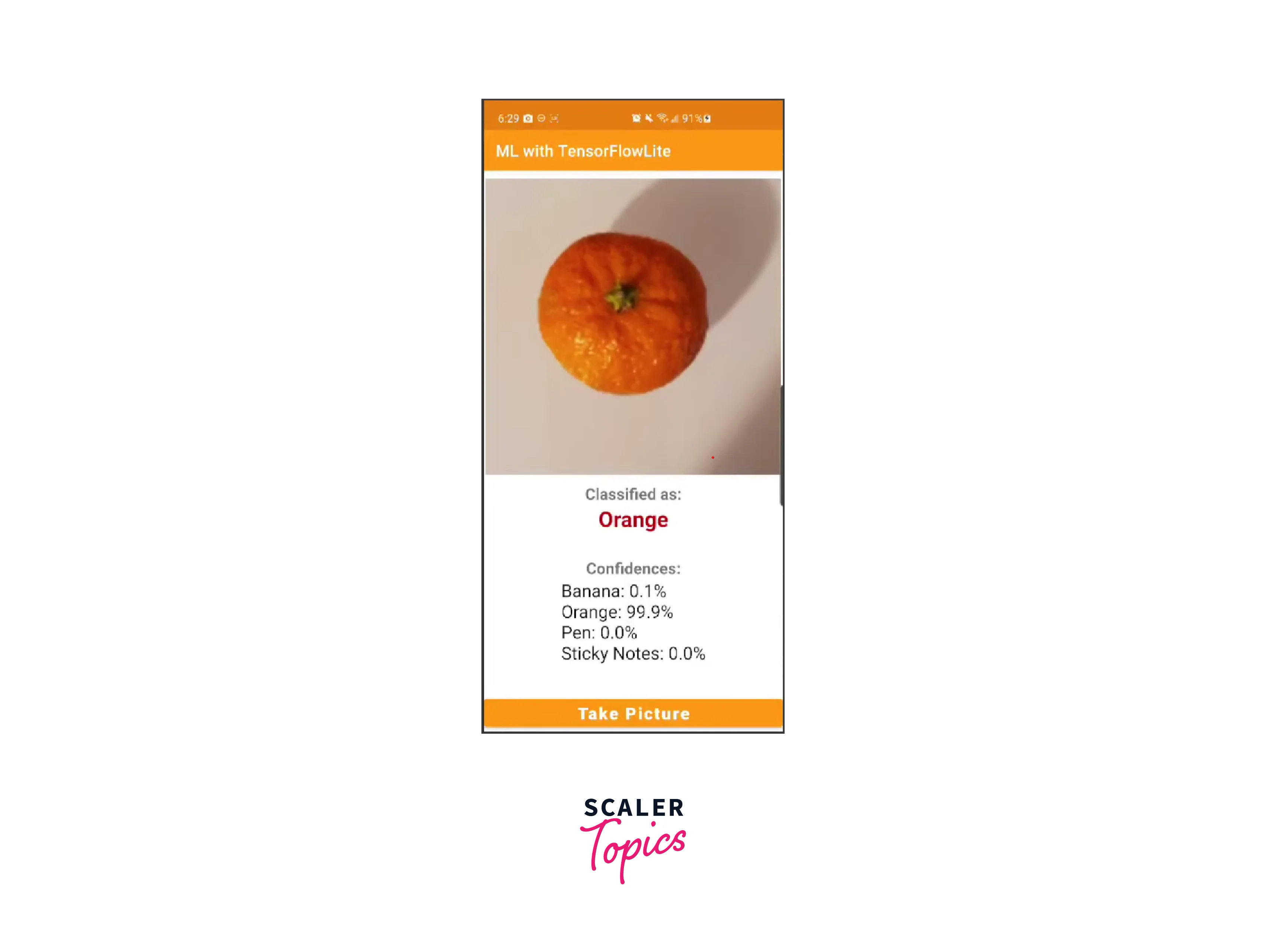

Create a method to preprocess the captured image before feeding it into the TensorFlow Lite model. Let us see about bitmap which is essential for the preprocessing. The classifyImage(Bitmap image) method serves the purpose of performing image classification using a pre-trained machine learning model, particularly designed for identifying specific objects or classes within an image.

This method takes a Bitmap image as input and follows a series of steps to preprocess the image, run it through the model, and determine the most likely class the image belongs to. The intention is to provide a simplified way to leverage machine learning techniques for automated image recognition tasks, allowing developers to integrate image classification capabilities into their applications. The method encapsulates the complexity of preprocessing and model inference, abstracting those details away from the developer and offering a higher-level interface for utilizing the machine learning model's capabilities.

Step - 3: Running Inference

Inside the TensorFlowHelper class, add a method to run inference on the preprocessed image.

Output:

Deploying TensorFlow Models on Android

Deploying TensorFlow models on Android involves integrating the TensorFlow Lite models into Android apps and distributing the apps to end-users. In this technical perspective, we will discuss the steps and considerations for deploying TensorFlow models on Android devices.

Model Conversion and Optimization

Before deploying a TensorFlow model on Android, it must be converted into TensorFlow Lite format. Additionally, model optimization techniques like quantization can be applied to reduce the model size and improve inference speed. The converted and optimized model should be saved in a .tflite file. Let's see this concept in brief.

Step - 1: Model Conversion

Assume you have a trained TensorFlow model in the SavedModel format and want to convert it to TensorFlow Lite:

Step - 2: Model Quantization

Let's demonstrate how to apply quantization to a TensorFlow Lite model:

Step - 3: Saving the Converted and Optimized Model

Remember that these examples provide a simplified overview. Actual implementation details may differ based on the TensorFlow version, the model's architecture, and the specific optimization techniques used.If, you want to know more check out more on optimization techniques go to link.

-

Android Studio Setup:

To start the deployment process, ensure you have Android Studio installed on your development machine. Create a new Android project or open an existing one.Add TensorFlow Lite Dependency:

Include the TensorFlow Lite dependency in your Android project's build.gradle file.

After loading the model and integrating the app, let's move on to the deployment phase

-

APK Deployment:

To distribute your Android app with the TensorFlow Lite model, generate a signed APK and publish it on the Google Play Store or any other app distribution platform. Users can download and install the app on their Android devices. -

On-device Model Update:

Consider implementing an update mechanism within your app to allow users to replace the existing model with a newer version. You can continuously improve the model and deliver updated user features. -

Testing and Optimization:

Thoroughly test the app with the TensorFlow Lite model on various Android devices to ensure it functions as expected. Optimize the model and the app based on performance and user feedback.

TensorFlow for Mobile Vision Tasks

TensorFlow is a powerful deep-learning framework that allows developers to build and deploy machine-learning models on various platforms, including mobile devices. TensorFlow offers several pre-trained models and tools to make developing vision-related tasks for mobile applications easier. Some common vision tasks in mobile using TensorFlow include image classification, object detection, image segmentation, and style transfer.

-

TensorFlow Lite:

- TensorFlow Lite is optimized for on-device inference, making it well-suited for mobile and edge devices.

- It supports various vision-related tasks, including image classification, object detection, image segmentation, and style transfer.

- TensorFlow Lite models are usually smaller and faster than their full TensorFlow counterparts, enabling real-time inference on mobile devices.

-

TensorFlow Lite Model Preparation:

- To use TensorFlow Lite for vision tasks, you need to prepare a TensorFlow model and then convert it to a TensorFlow Lite model using the TensorFlow Lite converter.

- You can train a model from scratch using TensorFlow or use pre-trained models available in the TensorFlow model zoo.

-

Integration in Android App:

-

Once you have the TensorFlow Lite model, integrate it into your Android app.

-

Place the TensorFlow Lite model file (usually with a .tflite extension) in the assets directory of your Android project.

-

Include the TensorFlow Lite dependencies in your app's build.gradle file:

-

-

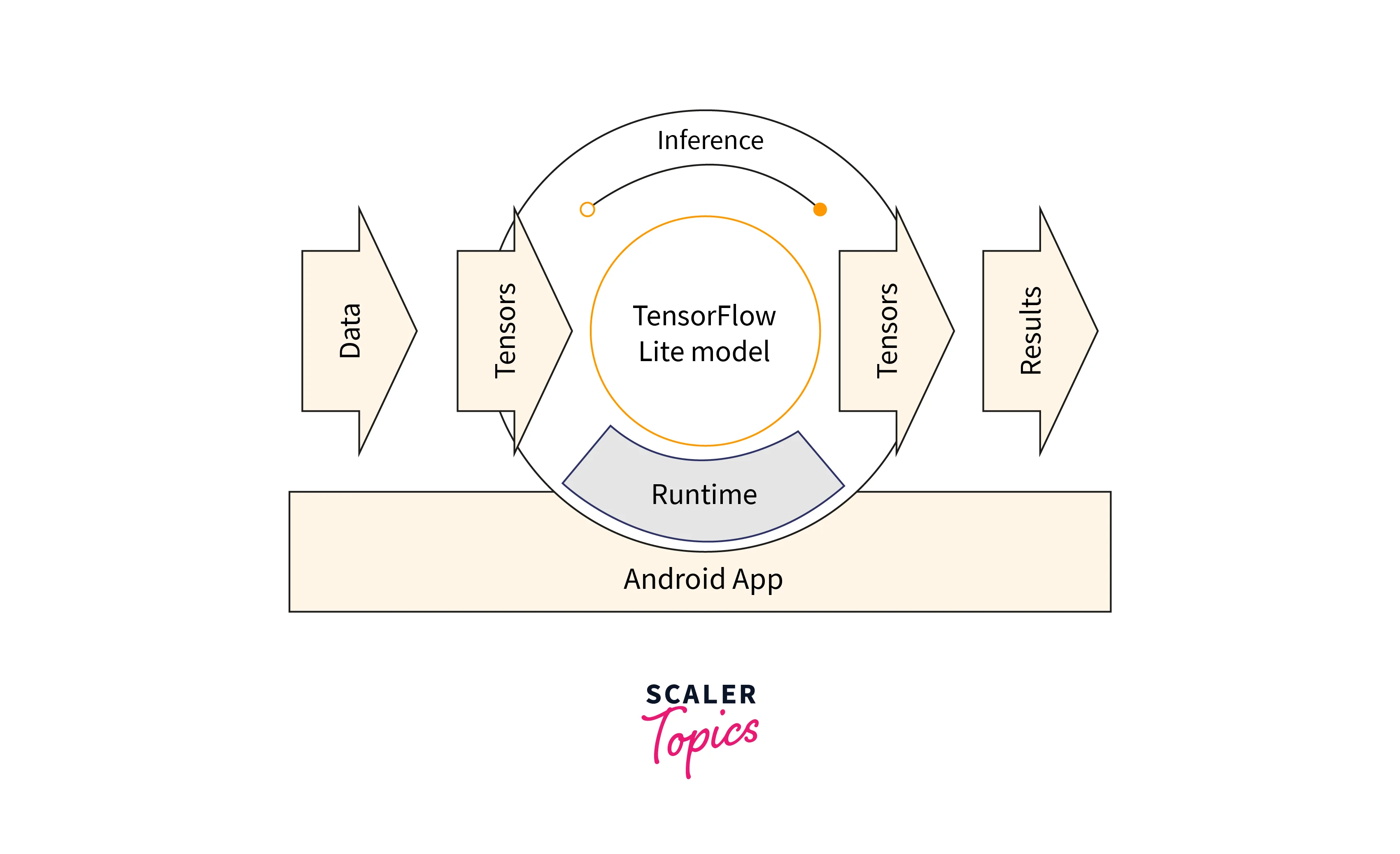

TensorFlow Lite Inference:

- Load the TensorFlow Lite model in your Android app and perform inference on input data.

- The interpreter class can load and run inference on the model. The input and output data will depend on the specific vision task.

-

Performance Optimization:

To optimize the performance of TensorFlow Lite models on Android, consider techniques like model quantization, pruning, compression, and hardware acceleration (e.g., GPU or NNAPI).

By leveraging TensorFlow Lite in Android, you can create powerful mobile applications that can perform vision-related tasks directly on the device without internet connectivity or cloud services. TensorFlow Lite's optimized execution ensures efficient and real-time inference, making it suitable for various vision applications on Android devices.

Performance Optimization and Deployment

While TensorFlow enables developers to run deep learning models on mobile devices, performance optimization, and deployment are critical to ensuring smooth and efficient execution on resource-constrained devices. Some techniques and considerations for optimizing and deploying TensorFlow models on mobile include:

- Model Quantization:

Reducing the precision of model weights and activations (e.g., from 32-bit floating-point to 8-bit fixed-point) to reduce memory footprint and improve inference speed. - Model Pruning:

Removing unnecessary connections or filters in the model to reduce the number of parameters and improve inference speed. - Model Compression:

Employing techniques like quantization-aware training, weight sharing, or knowledge distillation to create smaller models without significant loss in accuracy. - Hardware Acceleration:

Utilizing hardware-specific optimizations like TensorFlow Lite GPU delegate, Neural Network API (NNAPI), or Metal delegate for iOS to leverage hardware acceleration for inference. - Model Splitting:

Partitioning a large model into smaller sub-models and loading them dynamically as needed to save memory. - TensorFlow Lite:

Using TensorFlow Lite, which is a lightweight version of TensorFlow specifically designed for mobile and embedded devices. TensorFlow Lite provides a set of tools and libraries for deploying machine learning models on mobile platforms efficiently. - Multi-Threaded Inference:

Running inference on multiple threads to fully utilize the CPU resources and improve overall inference speed. - Data Preprocessing:

Optimizing data preprocessing steps to reduce the time taken to prepare input data for the model.

Limitations

While TensorFlow for Mobile Vision Tasks is powerful, customizable, and user-friendly there are some limitations to consider:

- Limited Hardware Resources:

Mobile devices typically have limited computational power, memory, and battery life, making it challenging to run complex models efficiently. - Network Connectivity:

Deep learning models may require network connectivity to access cloud-based services or models, which may not always be available or reliable. - Model Size:

Larger models may not be practical for deployment on mobile devices due to limited storage capacity. - Latency:

Running deep learning models on mobile may introduce some latency, affecting real-time applications. - Compatibility:

Different mobile devices may have varying levels of support for specific hardware optimizations or features, impacting model performance across devices. - Privacy and Security:

Deploying models on mobile devices may raise privacy and security concerns, especially for applications dealing with sensitive data.

Developers need to strike a balance between model complexity, performance, and resource constraints when deploying TensorFlow models on mobile devices. Careful consideration of the target platform and optimizations can help deliver powerful vision applications while ensuring a smooth user experience on mobile devices.

Conclusion

In summary, TensorFlow for mobile vision tasks presents a multitude of advantages. Its flexibility and power are standout features that empower developers to create cutting-edge applications. Here's why you should consider TensorFlow for your mobile vision projects:

- Customizable Model Architecture:

Tailor your models to the specific demands of your vision task, whether it's classification, detection, or recognition. - Efficient Start with Pre-trained Models:

Utilize pre-trained models to kickstart your projects, saving time and computational resources. - Smooth Deployment with TensorFlow and TensorFlow Lite:

Seamlessly optimize models for mobile devices, ensuring efficient and fast inference. - Real-time On-device Inference:

Enable low-latency, privacy-preserving, and offline functionality for a seamless user experience. - Edge Innovation with TensorFlow:

Push the boundaries of mobile vision capabilities at the edge of computation.

These advantages collectively make TensorFlow a compelling choice for your mobile vision endeavors.