Building Your First Neural Network Using TensorFlow

Overview

Neural Networks (NN) are a class of machine learning models inspired by the structure and functioning of the human brain. They are composed of interconnected nodes, known as neurons, organized in layers. Each neuron takes input, applies a mathematical operation, and produces an output that is passed to the next layer. Neural Networks are capable of learning and making predictions based on the patterns and relationships in the input data.

Setting up TensorFlow (for Both CPU and GPU)

To set up TensorFlow, you first need to install it on your system. TensorFlow supports both CPU and GPU computations. For CPU-only setups, you can install TensorFlow using pip, a package management system for Python.

-

Install Python:

Make sure you have Python installed on your system. TensorFlow requires Python 3.7 or later.

-

Install TensorFlow:

Open a terminal or command prompt and use the following command to install TensorFlow with CPU support

However, for GPU support, you need to ensure that you have compatible hardware and drivers, and then install the appropriate version of TensorFlow with GPU support. This allows you to leverage the computational power of your GPU for faster training and inference.

You can verify your TensorFlow installation by opening a Python interpreter or running a Python script and importing TensorFlow:

Output:

If TensorFlow is installed correctly, it will print the version number.

Understanding Neural Networks

Imagine a neural network as a group of friends who want to learn how to identify different animals. They start with no knowledge but want to learn from examples. To achieve this, they pass around pictures of animals and share their observations with each other.

Here's a basic example:

Let's say we want to build a simple neural network that can distinguish between apples and oranges based on their weight and color. We have the following data:

| Weights | Color | Fruit |

|---|---|---|

| 150 | Red | Apple |

| 120 | Orange | Orange |

| 130 | Red | Apple |

| 140 | Orange | Orange |

| 160 | Red | Apple |

The neural network will learn from this data and try to predict the fruit type (Apple or Orange) based on weight and color.

Step 1: Input Layer

The neural network takes two input features: weight and color.

Step 2: Hidden Layer

Next, we have a hidden layer where the neurons process the input data and learn patterns.

Step 3: Output Layer

Finally, we have an output layer that gives us the predicted fruit type.

Step 4: Learning

The network iteratively adjusts its internal parameters (weights and biases) during training to minimize prediction errors and improve accuracy.

Step 5: Prediction

Once trained, the neural network can make predictions on new data by passing it through the network and obtaining the output.

For instance, if we provide the neural network with a new example: Weight = 155 grams and Color = Red, it might predict that the fruit is an Apple.

This is a basic overview of neural networks and how they can learn to solve simple tasks. As tasks become more complex, neural networks can be expanded with more layers and neurons to learn intricate patterns and make more accurate predictions.

Neural networks consist of interconnected units called neurons, organized into layers, and use activation functions to introduce non-linearity.

-

Neurons:

Neurons are the basic building blocks of a neural network. Each neuron takes input, processes it, and produces an output. These outputs are then passed to other neurons in the network, forming connections that allow the network to learn patterns and relationships in the data.

-

Layers:

Neurons are grouped into layers. A neural network typically has an input layer where data is fed in, one or more hidden layers where intermediate processing occurs, and an output layer where the final predictions are produced. Information flows from the input layer through the hidden layers to the output layer during the learning process.

-

Activation Functions:

Activation functions introduce non-linearity to the neural network, allowing it to model complex relationships in data. After processing inputs, each neuron applies an activation function to the result before passing it to the next layer. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid.

Data Preparation

Data preparation is an important step before training a neural network. It involves collecting the necessary data, cleaning it by handling missing values and outliers, splitting it into training, validation, and testing sets, encoding it into a suitable format for the neural network, and optionally augmenting the data to increase its diversity. These steps ensure that the data is ready to be used effectively in training the neural network.

This blog provides a fast-paced overview of a complete TensorFlow program using the tf.keras high-level API for building and training models. The key steps are explained as we go along to give you a quick understanding of the process.

Fashion MNIST is a dataset commonly used as a replacement for the classic MNIST dataset in computer vision tasks. It serves as a popular starting point for testing and debugging machine learning programs in the field of computer vision. While the original MNIST dataset consists of images of handwritten digits (0, 1, 2, etc.), Fashion MNIST offers a similar format but focuses on articles of clothing instead.

The Fashion MNIST dataset provides a slightly more challenging problem compared to MNIST, making it suitable for testing the performance of algorithms. The dataset includes 60,000 images that are used for training a tensorflow neural network and 10,000 images for evaluating how accurately the network has learned to classify the images. TensorFlow, a popular machine learning framework, allows easy access to the Fashion MNIST dataset.

To load the Fashion MNIST dataset in TensorFlow, you can use the following code:

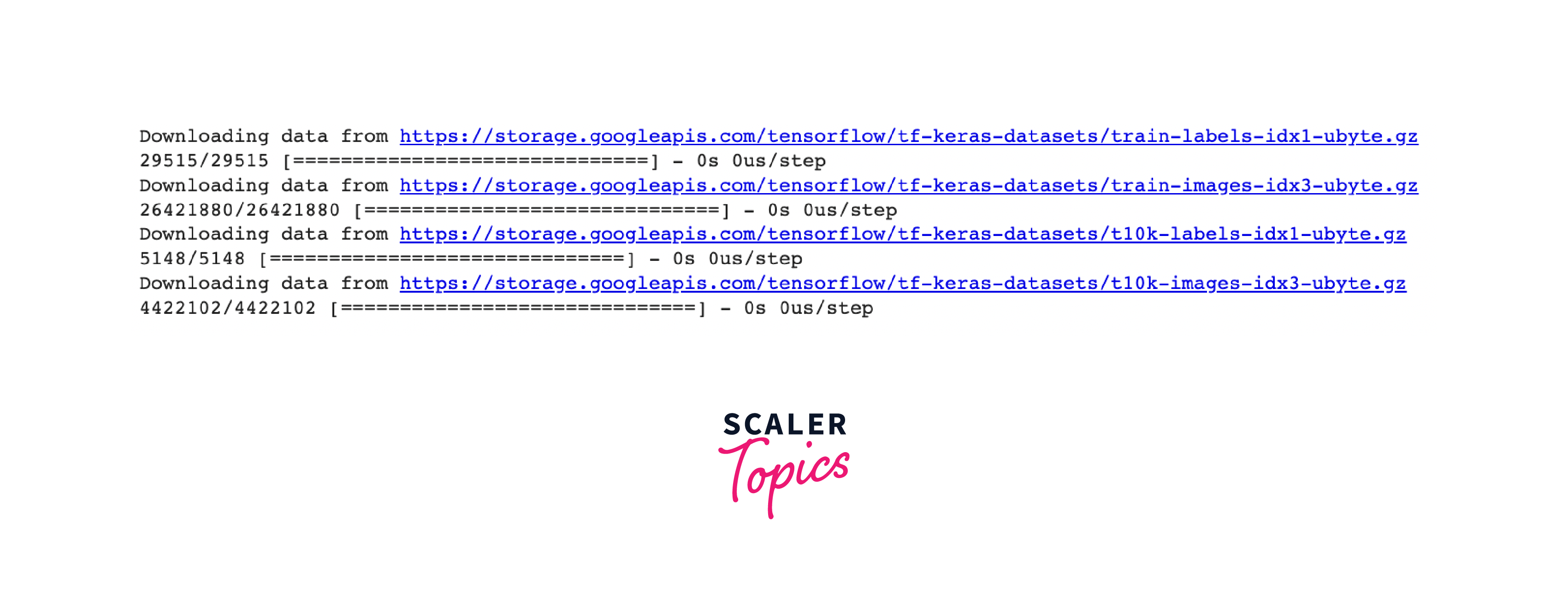

Output:

Loading the dataset returns four NumPy arrays:

-

train_images and train_labels:

These arrays comprise the training set, which is the data used to train the model. The train_images array contains the images, and the train_labels array contains the corresponding labels or categories for each image.

-

test_images and test_labels:

These arrays represent the test set, which is used to evaluate the model's performance. The test_images array holds the test images, and the test_labels array contains the respective labels or categories for each test image.

Building a Neural Network Model

To build a tensorflow neural network, you need to configure its layers and compile the model with appropriate settings.

The layers are the fundamental building blocks of a neural network, responsible for extracting meaningful representations from the input data. In TensorFlow, layers are connected to form a sequence that processes the data.

To set up the layers, you define them within a Sequential model, specifying the type and parameters of each layer.

Step 1: Import the necessary libraries

Step 2: Prepare your data

Ensure the data is properly preprocessed and split into training and testing sets. Neural networks work best with normalized data, so scaling features to a similar range is recommended.We have already prepared our Fashion MNIST dataset.

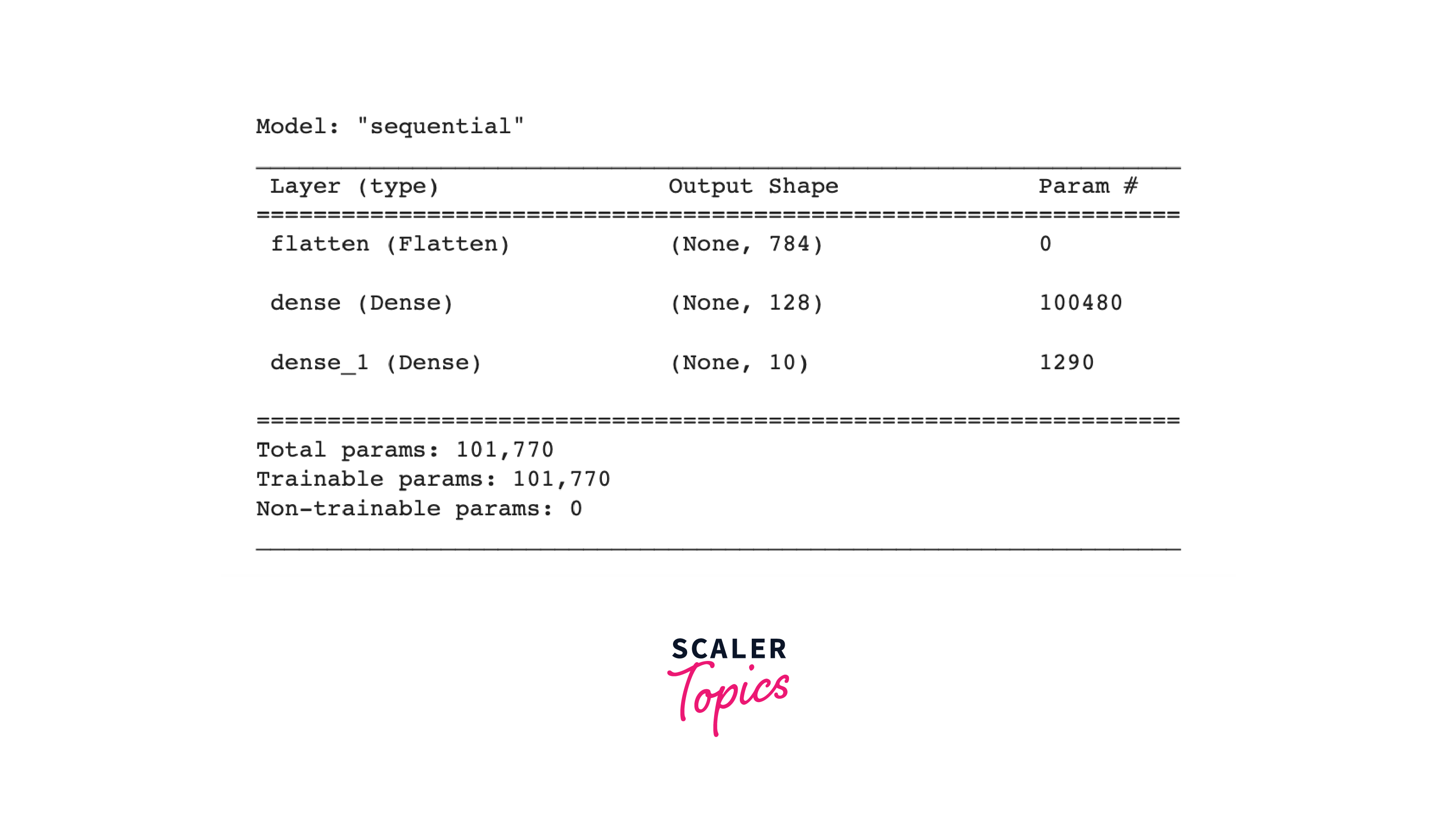

Step 3: Create a Sequential model and Add layers to the model

In the above code, the model is constructed with three layers. The first layer, Flatten, transforms the input data, which is a 2D array representing an image (28x28 pixels), into a 1D array (784 pixels). This layer reshapes the image pixels without any learnable parameters.

The next two layers are Dense layers, also known as fully connected layers. The first Dense layer has 128 neurons and applies the Rectified Linear Unit (ReLU) activation function. The second Dense layer has 10 neurons, corresponding to the 10 classes in the Fashion MNIST dataset.

After setting up the layers, you need to compile the model to specify additional settings for training. During compilation, you define the following:

Loss function: This measures the model's accuracy during training and guides it towards better predictions. In the example, SparseCategoricalCrossentropy is used as the loss function. It computes the difference between predicted and true labels.

Optimizer: This determines how the model is updated based on the training data and the calculated loss. The adam optimizer, a popular choice, is used in the example.

Metrics: These are used to monitor the training progress. In this case, the metric selected is accuracy, which measures the fraction of correctly classified images.

With the model compiled, it is ready for training. You can then proceed with training the model on the Fashion MNIST dataset and evaluating its performance.

Model Training

To train the tensorflow neural network model, you need to perform the following steps:

Step 1: Feed the training data:

In this step, you provide the training data to the model. For instance, in the example code, the training images and corresponding labels are passed to the model using the model.fit() method.

Step 2: Model learns associations:

During training, the model learns to associate the images with their respective labels. It adjusts its internal parameters based on the provided training data to improve its predictions.

Step 3: Make predictions on the test set:

After training, you can evaluate the model's performance by asking it to make predictions on a separate test set. In the example code, the test images are stored in the test_images array.

Step 4: Compare predictions with labels:

To verify the accuracy of the model's predictions, you compare them against the true labels from the test set, stored in the test_labels array.

In the above code, the model.fit() method is called with the training images (train_images) and their corresponding labels (train_labels). The epochs parameter specifies the number of times the model will iterate over the entire training dataset during training.

During training, the tensorflow neural network model adjusts its parameters using optimization algorithms and the provided loss function to minimize the discrepancy between predicted and true labels. As a result, the model learns to make more accurate predictions.

Output:

As the model trains, the loss and accuracy metrics are displayed. This model reaches an accuracy of about 0.84 (or 84%) on the training data.

After training, you can move on to evaluating the model's performance and fine-tuning it, if necessary, to achieve better results.

Evaluating and Fine-tuning the Model

Output:

The test dataset shows slightly lower accuracy compared to the training dataset, indicating the presence of overfitting. Overfitting occurs when a machine learning model is better at predicting the training data than new, unseen inputs. It happens when the model excessively focuses on the intricacies and noise present in the training data, leading to reduced performance on new data.

Output:

Fine-Tuning the Model

Fine-tuning in TensorFlow refers to the process of taking a pre-trained model and adapting it to a new, specific task or dataset. It is a common technique used in transfer learning, where the knowledge gained from a source task or dataset is leveraged to improve performance on a target task or dataset.

The general steps involved in fine-tuning a model in TensorFlow are as follows:

Step 1: Selecting a pre-trained model:

Choose a pre-trained model that has been trained on a large and relevant dataset.

Step 2: Modifying the model:

Remove the last layer(s) of the pre-trained model, as they are typically task-specific. These layers are replaced with new layers that are specific to the target task.

Step 3: Freezing the base layers:

Freeze the weights of the base layers in the pre-trained model to prevent them from being updated during training. This allows the model to retain the learned features from the source task while only updating the weights of the newly added layers.

Step 4: Defining the loss function:

Specify a suitable loss function for the target task, depending on whether it is a classification, regression, or other problem.

Step 5: Training the model:

Initialize the weights of the new layers randomly and train the model using the target dataset. During training, only the weights of the new layers are updated, while the base layers remain frozen.

Step 6: Unfreezing and fine-tuning:

Once the new layers have been trained for a sufficient number of epochs, gradually unfreeze some of the base layers and continue training the model with a lower learning rate. This allows the model to fine-tune the learned features to better align with the target task.

Step 7: Evaluating the model:

After training is complete, evaluate the performance of the fine-tuned model on a validation or test dataset to assess its effectiveness.

You can use the to_categorical function from tensorflow.keras.utils to convert your target labels into the one-hot encoded format for solving the incompatibility issue between the shapes of the target labels and the predicted output of your model

OUTPUT:

OUTPUT:

Deploying the Model

TensorFlow offers multiple options for deploying and serving neural network models. Whether you choose to deploy as a standalone application using Flask or Django, utilize TensorFlow Serving for scalable serving, or leverage TensorFlow.js for browser or server-side JavaScript applications, TensorFlow provides a flexible deployment ecosystem to suit various scenarios.

Deploying on TensorFlow.js:

If you want to deploy your TensorFlow neural network model on the web browser or Node.js, TensorFlow.js provides a JavaScript library for running TensorFlow models directly in the browser or on the server. You can convert your trained TensorFlow model into TensorFlow.js format using the tensorflowjs_converter tool. Then, you can use the TensorFlow.js library to load the converted model and make predictions in JavaScript. Here's an example of deploying a model with TensorFlow.js:

With TensorFlow.js, you can integrate your TensorFlow model into web applications or server-side JavaScript applications.

Conclusion

- TensorFlow provides a robust framework for building and training neural networks.

- It optimizes computations for efficient training and inference on GPUs and TPUs.

- TensorFlow allows for customization and fine-tuning of network architecture, activation functions, and optimization algorithms.

- It offers pre-trained models and transfer learning, enabling faster training and improved performance on new tasks or datasets.

- TensorFlow supports diverse deployment options, including standalone applications, TensorFlow Serving, and TensorFlow.js for web and server-side deployment.