TinyML - Getting Started with TensorFlow Lite for Microcontrollers

Overview

TinyML, short for Tiny Machine Learning, revolutionizes edge computing by deploying efficient machine learning models onto microcontrollers and other resource-limited devices. Through model optimization techniques like quantization and pruning, TinyML adapts complex models for constrained hardware. This facilitates on-device inference, enabling real-time decision-making without relying on cloud resources.

In this blog, let us see about it's working principle, examples and applications.

What is TinyML?

TinyML refers to "Tiny Machine Learning" and represents the application of machine learning (ML) techniques on extremely resource-constrained devices, such as microcontrollers and other small embedded systems. These devices often have limited memory, processing power, and energy resources, making traditional machine learning models impractical to run on them.

TinyML aims to bring the power of machine learning to these edge devices, enabling them to perform tasks that require intelligent decision-making without relying on constant connectivity to the cloud or more powerful hardware.

How does TinyML Work?

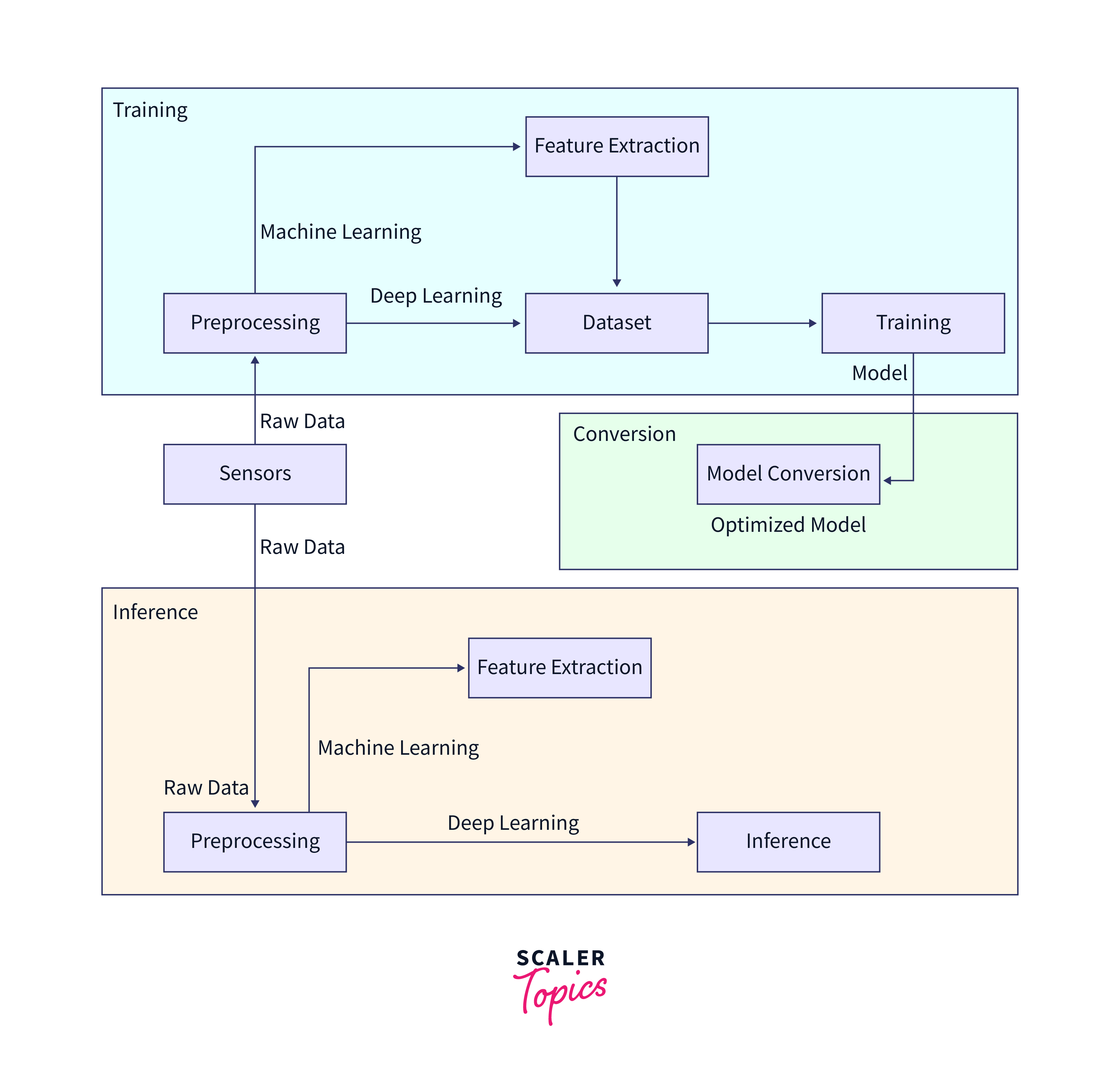

TinyML operates by deploying compact and efficient machine learning models directly onto edge devices. Here's a detailed breakdown of how TinyML works:

Model Optimization

To make machine learning models suitable for deployment on tiny devices, extensive optimization is required. This includes techniques such as:

-

Model Quantization:

Converting model weights from floating-point numbers to fixed-point or integer representations, which reduces memory and computation requirements.

-

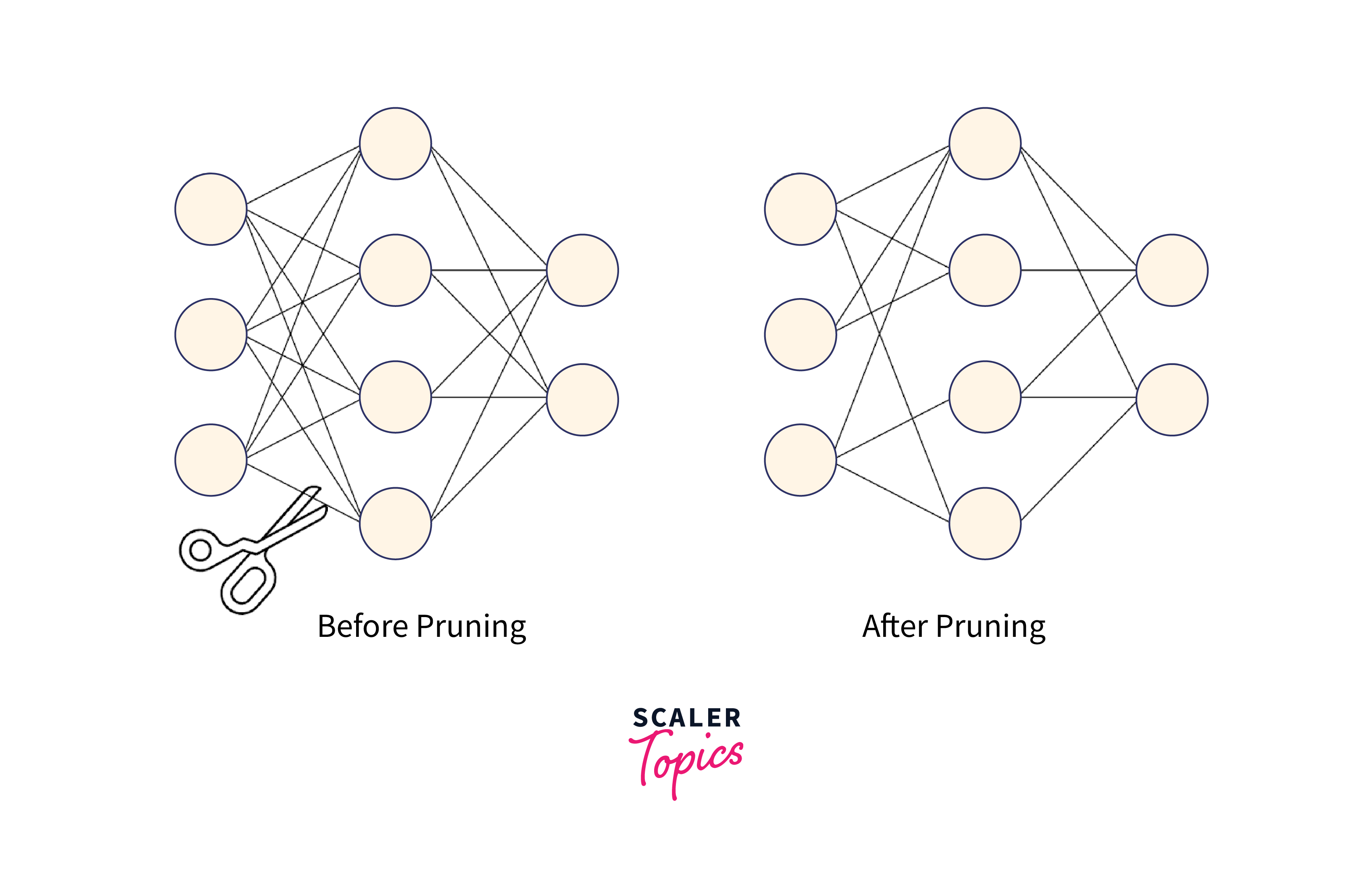

Model Pruning:

Removing unnecessary connections or neurons from neural networks to reduce the model's size and computational demands.

-

Model Compression:

Using techniques like Huffman coding or other compression methods to minimize the storage requirements of the model.

Hardware Compatibility

TinyML models need to be compatible with the hardware constraints of the target device. This involves considerations like:

-

Memory Constraints:

Ensuring that the model's memory footprint fits within the limited RAM available on the device.

-

Computational Power:

Creating models that can execute efficiently on the device's low-power processors without causing significant delays.

-

Energy Efficiency:

Designing models that consume minimal energy, as battery life is a crucial concern for many edge devices.

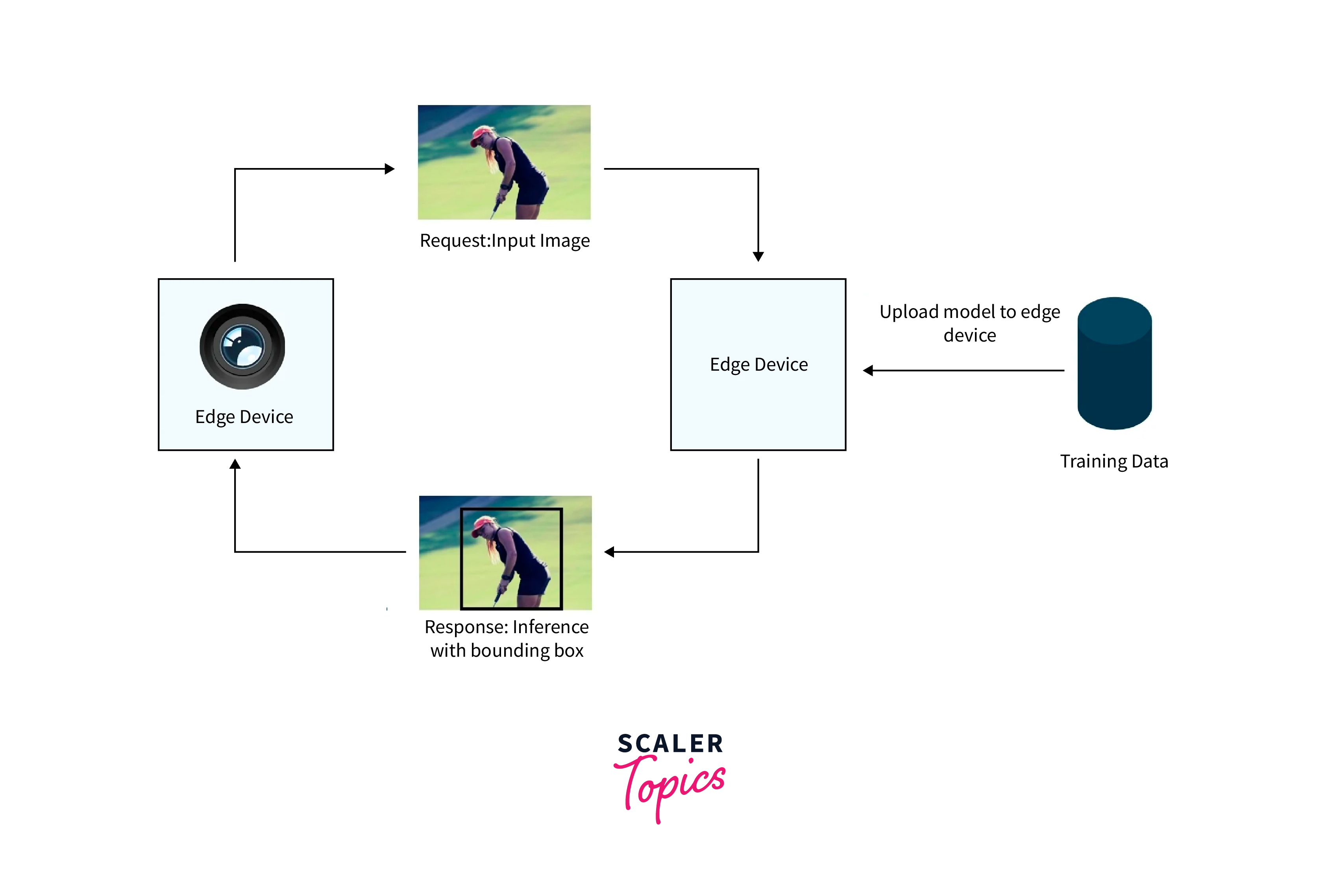

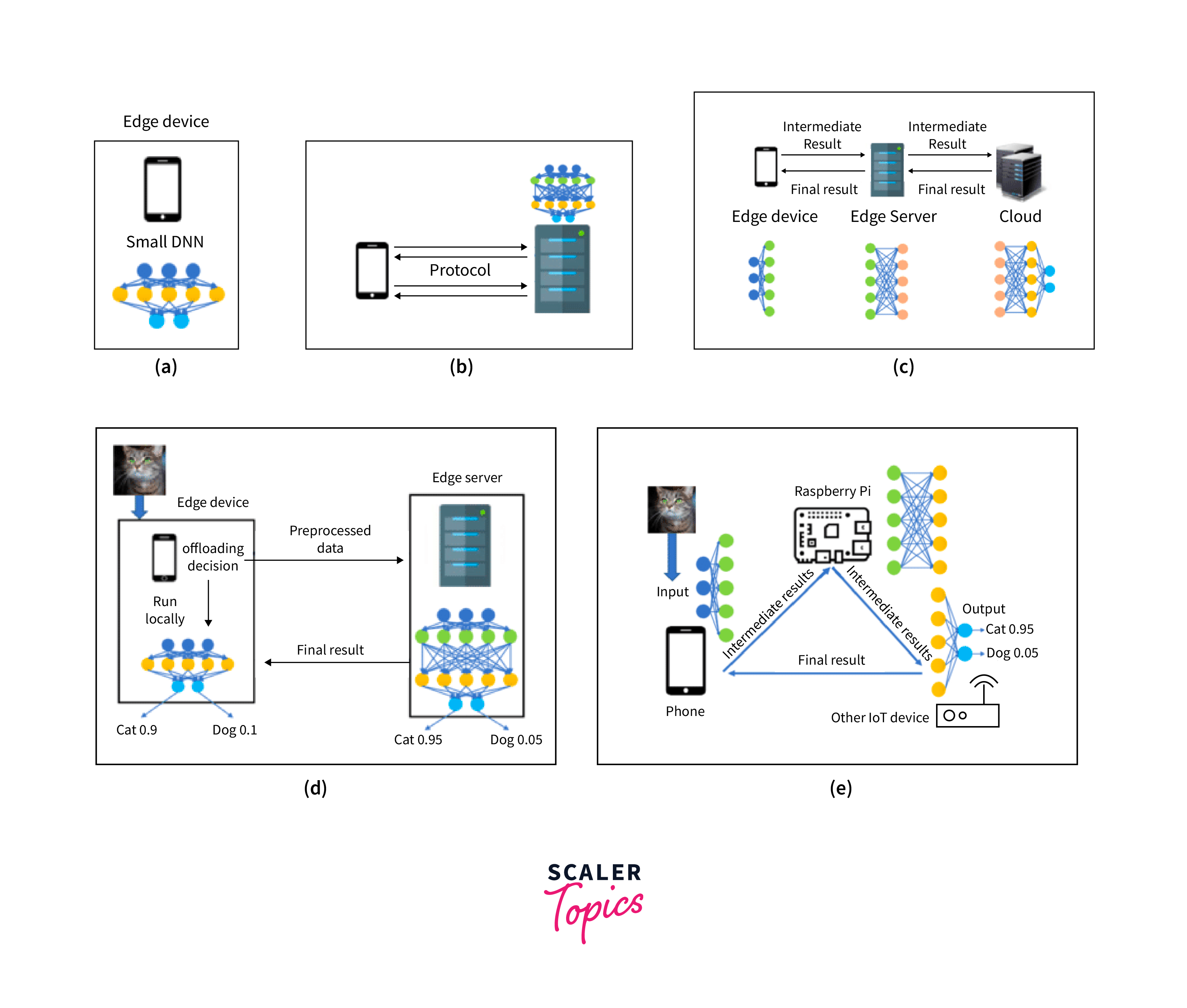

Edge Inference

Once the optimized model is deployed onto the device, it can perform inference directly at the edge, without relying on cloud servers for decision-making. Here's how edge inference works:

-

Data Collection:

The device gathers input data from its sensors or external sources, which could be images, sensor readings, audio, etc.

-

Preprocessing:

The input data is preprocessed to be compatible with the model's input format. This might involve resizing images, normalizing data, or other transformations.

-

Inference:

The preprocessed data is fed into the optimized machine learning model, which performs calculations to produce a prediction or outcome.

-

Output:

The model's prediction or result is then used for making decisions or controlling the device's actions.

How to Get Started with TinyML in TensorFlow Lite?

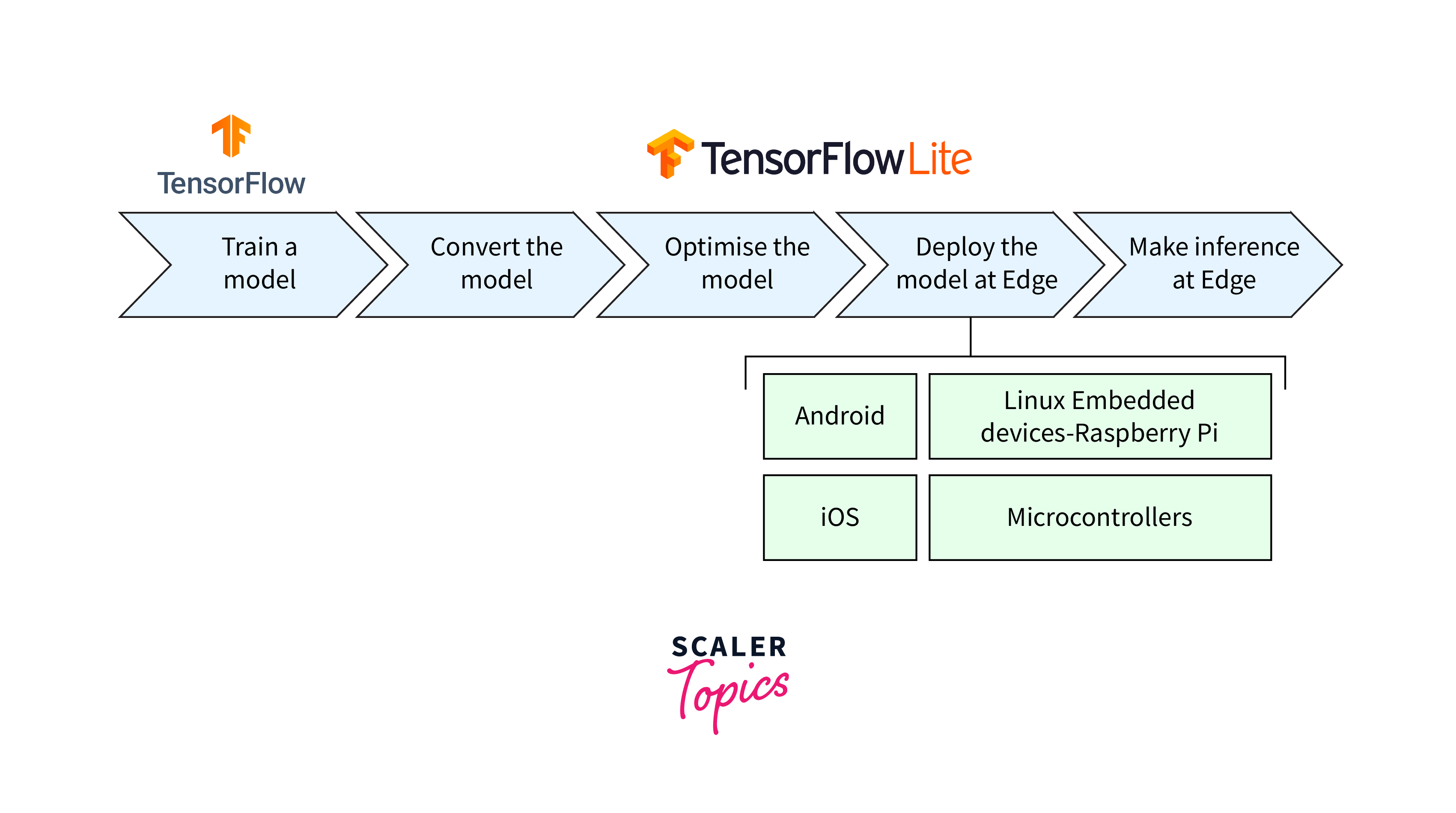

Getting started with TinyML using TensorFlow Lite involves several key steps to enable the deployment of machine learning models on resource-constrained devices. Here's a comprehensive overview to guide you through the process:

Step 1: Understanding TensorFlow Lite:

- TensorFlow Lite is a lightweight framework developed by Google that allows you to deploy machine learning models on edge devices.

- It offers tools and optimizations specifically designed for efficient inference on constrained hardware.

Step 2: Prerequisites:

- Before you begin, ensure you have a good understanding of machine learning fundamentals and basic programming skills in a language such as Python.

- Familiarity with TensorFlow or TensorFlow Lite will be beneficial.

Step 3: Setup:

- Install TensorFlow and TensorFlow Lite on your development machine.

- Consider setting up a virtual environment to manage your project dependencies.

Step 4: Model Selection or Creation:

- Choose a model architecture suitable for your application. Start with simpler models like MobileNet or fully connected networks.

- Train the model using TensorFlow on a computer with sufficient resources. Ensure the model performs well and meets your accuracy requirements.

Step 5: Model Optimization:

- Quantize the model to convert floating-point weights to integer or fixed-point representations.

- Prune the model to remove unnecessary connections or layers.

- Use post-training quantization to further reduce the model's size while maintaining accuracy.

Step 6: Conversion to TensorFlow Lite Format:

- Convert the optimized TensorFlow model to the TensorFlow Lite format using the TensorFlow Lite Converter. This generates a .tflite file, which is the deployment-ready model.

Step 7: Model Deployment:

- Integrate the TensorFlow Lite library into your target hardware platform. This could be a microcontroller or an edge device.

- Import the .tflite model into your application code.

Step 8: Inference on the Edge:

- Collect input data from sensors or external sources.

- Preprocess the input data to match the input format expected by the TensorFlow Lite model.

- Run inference using the TensorFlow Lite interpreter, which processes the input and produces predictions.

Step 9: Interfacing with Hardware:

- Configure the I/O interfaces and communication protocols required to interface the device with sensors or actuators, depending on your application's requirements.

Step 10: Testing and Iteration:

- Test the deployed model on the target device, considering factors like inference speed, memory usage, and accuracy.

- Fine-tune the model or optimizations if necessary and repeat the conversion and deployment steps.

Step 11: Documentation and Community Resources:

- TensorFlow Lite provides detailed documentation and guides for various aspects of the process.

- Explore online communities, forums, and GitHub repositories for code samples, tutorials, and troubleshooting.

Step 12: Advanced Techniques:

As you become more proficient, explore advanced techniques like custom operators, model quantization-aware training, and model distillation to further enhance performance.

Remember that TinyML development involves a learning curve, and it's common to encounter challenges along the way. However, with persistence and the support of the TensorFlow Lite community, you can unlock the potential of machine learning on edge devices and create innovative applications that make use of real-time, on-device intelligence.

Supported Machine Learning Models in TinyML

TinyML aims to deploy machine learning models on resource-constrained devices. While not all machine learning models are suitable for such devices, there are several model architectures that have been optimized and proven effective in the TinyML space. Here are some of the supported machine learning models commonly used in TinyML, along with examples:

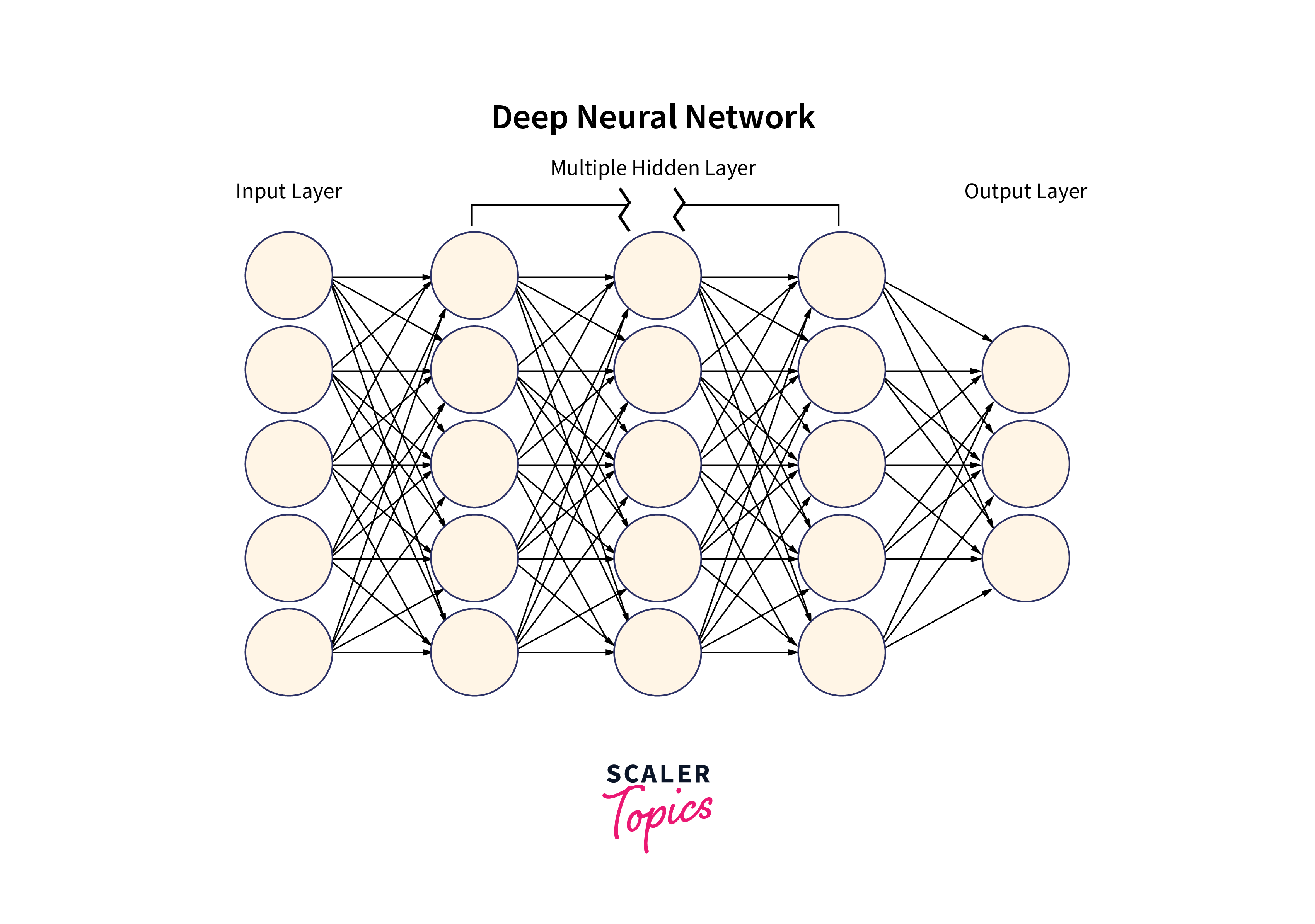

Fully Connected Networks (FCNs)

Fully connected networks, also known as dense networks, consist of layers where each neuron is connected to every neuron in the previous and subsequent layers.

Example:

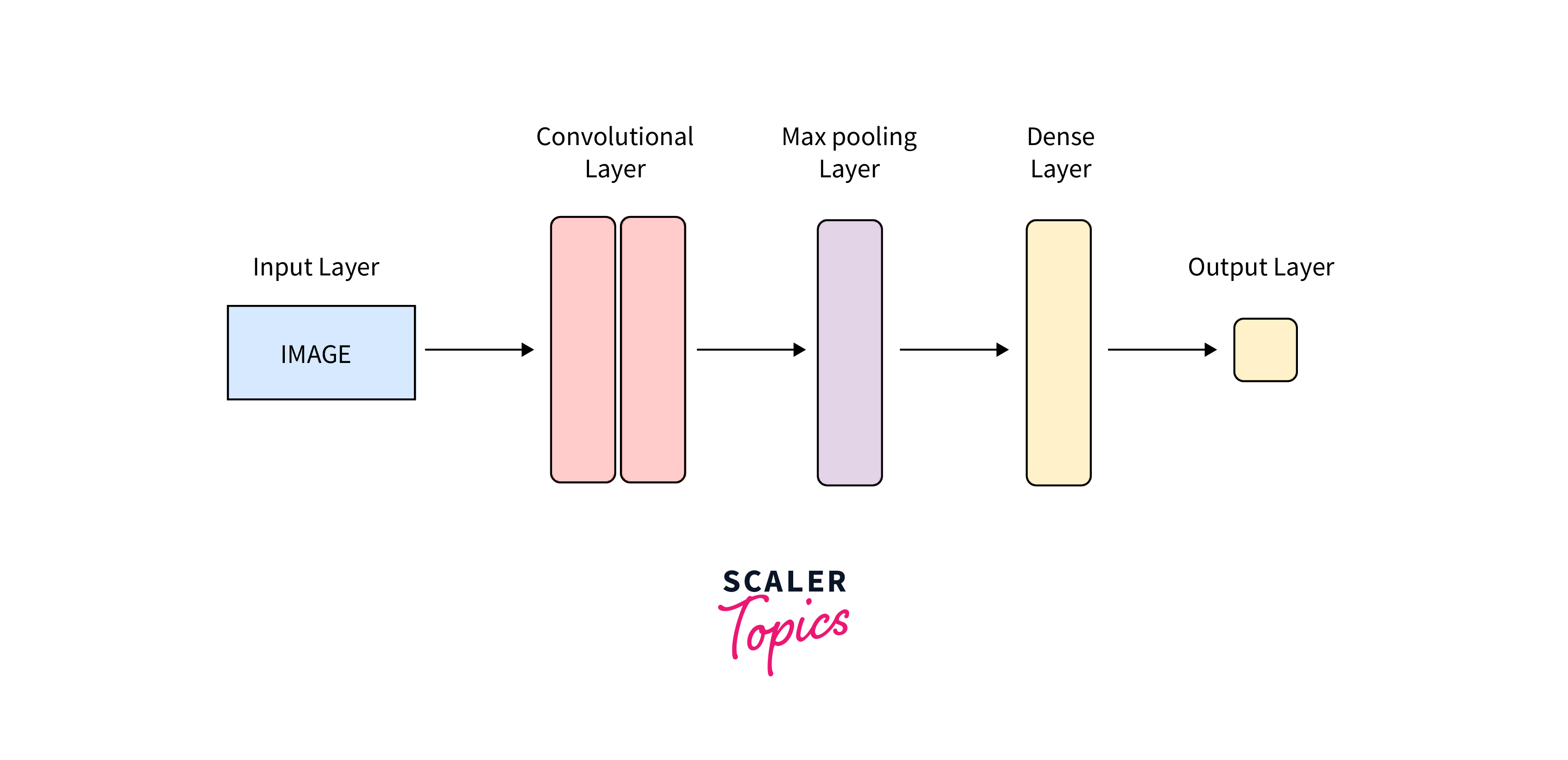

Convolutional Neural Networks (CNNs)

CNNs are particularly effective for image and spatial data, leveraging convolutional layers to capture patterns and features.

Example:

Recurrent Neural Networks (RNNs)

RNNs are suitable for sequential data, such as time-series or natural language data.

Example:

Quantized Neural Networks

Quantization converts model weights from floating-point precision to fixed-point or integer precision, reducing memory and computation requirements.

Example:

Sparse Neural Networks

Sparse models use techniques like pruning to remove unnecessary connections, reducing model size and computation.

Example:

Support Vector Machines (SVMs):

SVMs are effective for binary classification tasks, offering a compact representation for decision boundaries.

Example:

Decision Trees and Random Forests

Decision trees and random forests are interpretable models suitable for tasks like classification and regression.

Example:

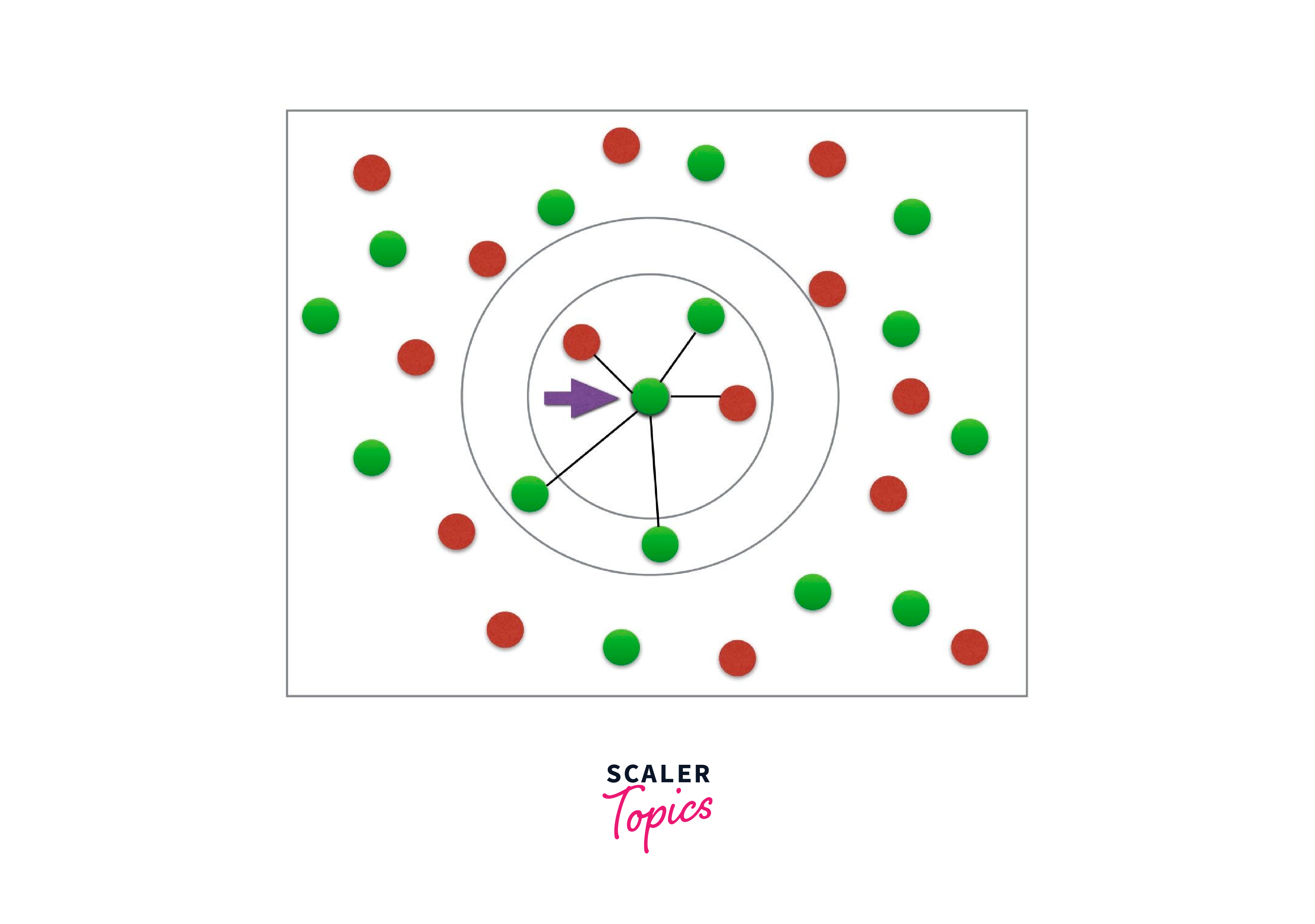

K-Nearest Neighbors (KNN)

KNN is a simple instance-based learning algorithm for classification and regression tasks.

Example:

Naive Bayes:

Naive Bayes is a probabilistic algorithm suitable for text classification and simple data.

Example:

Linear Regression

Linear regression models are suitable for predicting continuous values based on input features.

Example:

These are just a few examples of supported machine learning models in the TinyML domain. The choice of model depends on the specific task, available resources, and performance requirements of your target edge device. It's essential to consider the trade-offs between model complexity, accuracy, inference speed, and memory usage to select the most appropriate model for your TinyML application.

TinyML Examples

In this section, we'll embark on a journey into the world of TinyML, exploring what it is, its significance, and why it's gaining momentum across industries. We'll delve into some beginner-friendly examples that demonstrate how you can implement TinyML in your projects, even with limited computational resources.

Here are three beginner-friendly TinyML examples with explanations, code, and expected outputs.

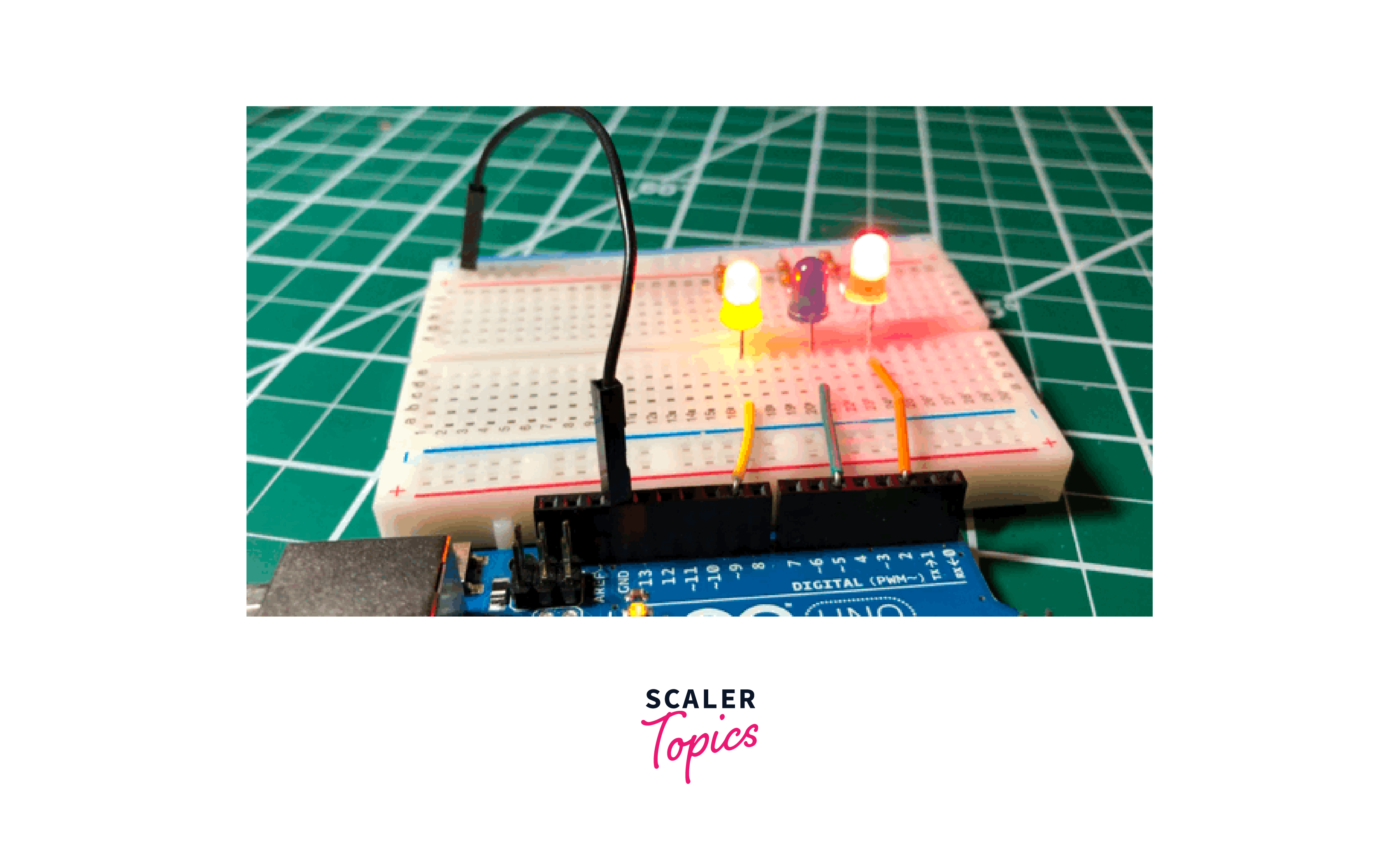

Example 1: LED Blinking using TinyML (Arduino)

Explanation: This example shows how to blink an LED using a simple machine learning model deployed on an Arduino. The model predicts whether the light should be on or off based on a threshold.

Code:

Expected Output:

The LED connected to the Arduino's built-in LED pin will blink based on the predictions of the TinyML model. If the prediction is above 0.5, the LED will be turned on; otherwise, it will be turned off.

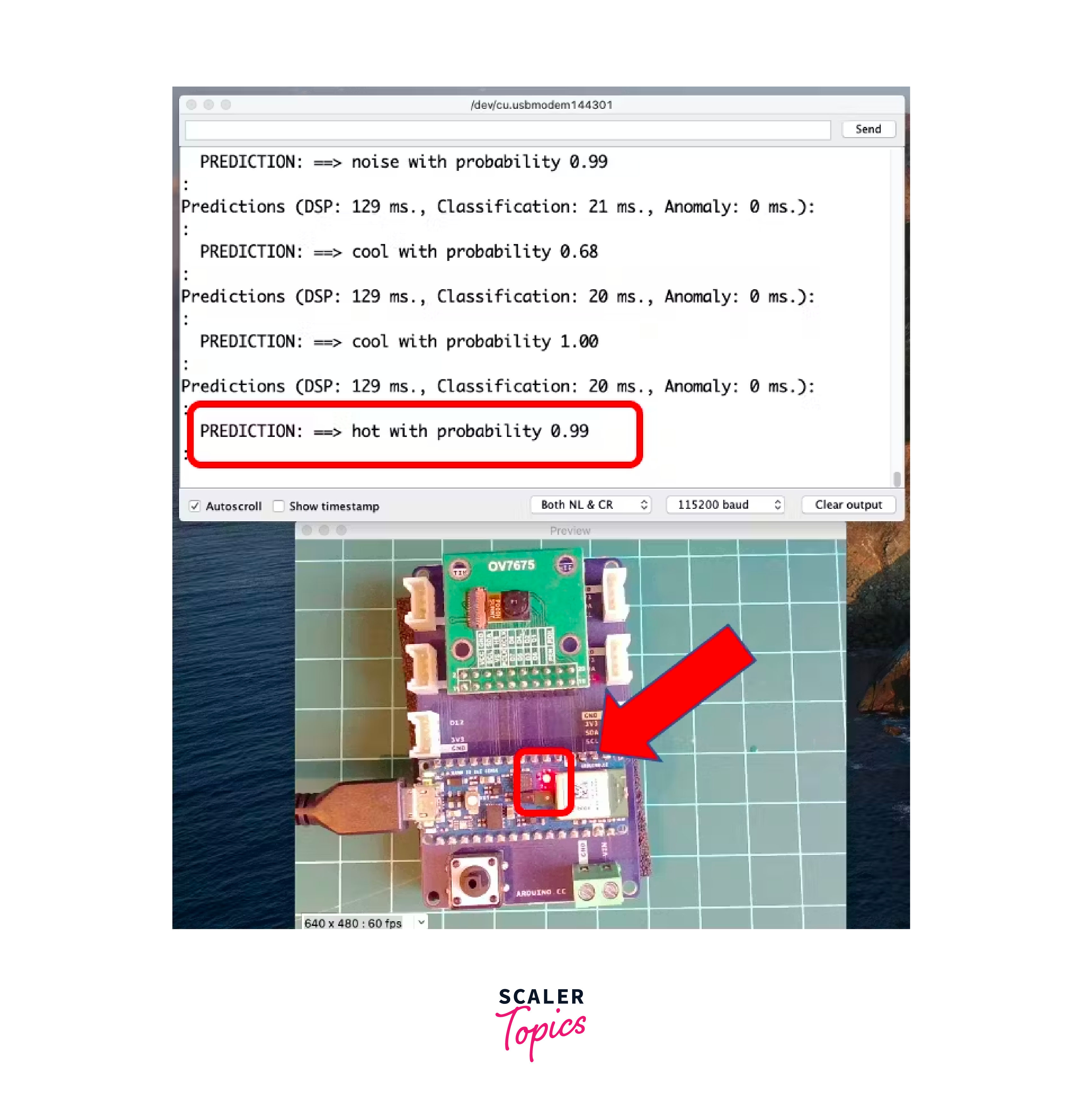

Example 2: Temperature Prediction using TinyML (Arduino)

Explanation: This example demonstrates how to predict a temperature value using a linear regression model deployed on an Arduino.

Code:

Expected Output:

The Arduino will read a sensor value, use the TinyML model to predict a temperature value, and then print the predicted temperature to the serial monitor.

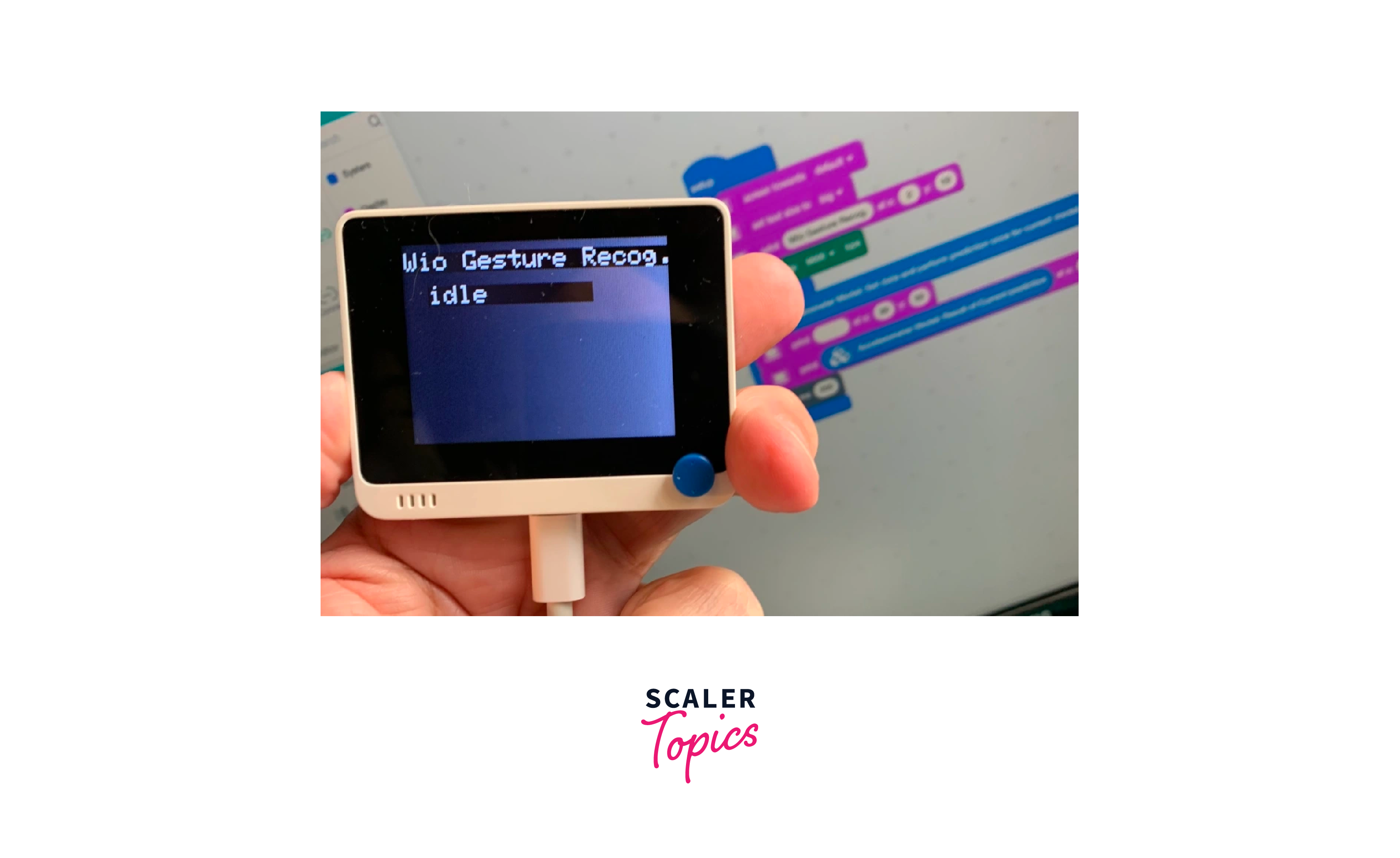

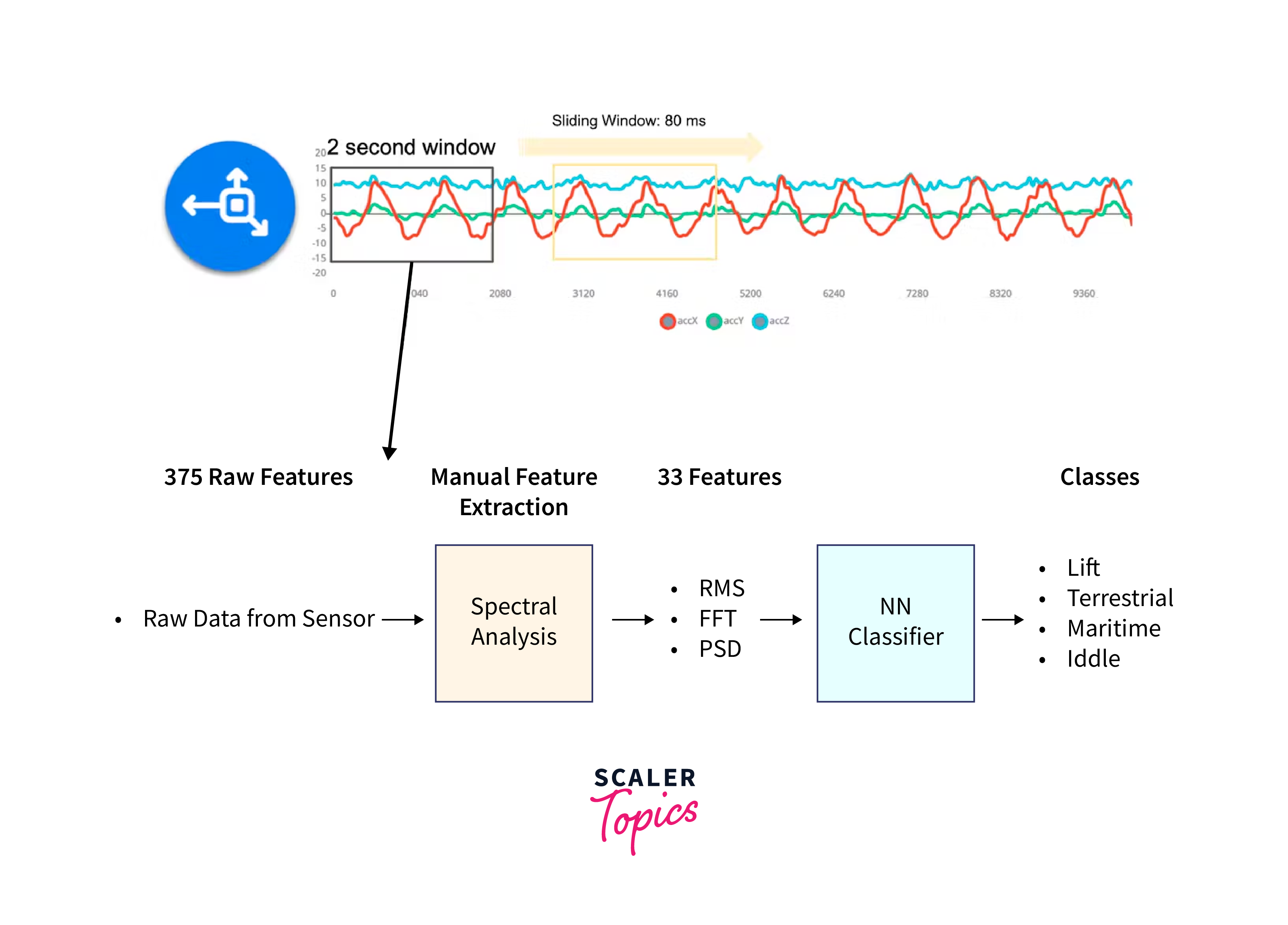

Example 3: Motion Detection using TinyML (Arduino)

Explanation: This example showcases how to detect motion using accelerometer data and a simple machine learning model deployed on an Arduino.

Code:

Expected Output:

The Arduino will read accelerometer data, use the TinyML model to predict whether motion is detected, and print a message to the serial monitor if motion is detected.

Applications of TensorFlow for Microcontrollers (TinyML)

TinyML, is a branch of machine learning that focuses on deploying lightweight machine learning models onto resource-constrained microcontroller devices. This opens up a world of possibilities for embedding intelligence directly into a wide range of devices and applications. Here's a comprehensive overview of the applications of TensorFlow for Microcontrollers:

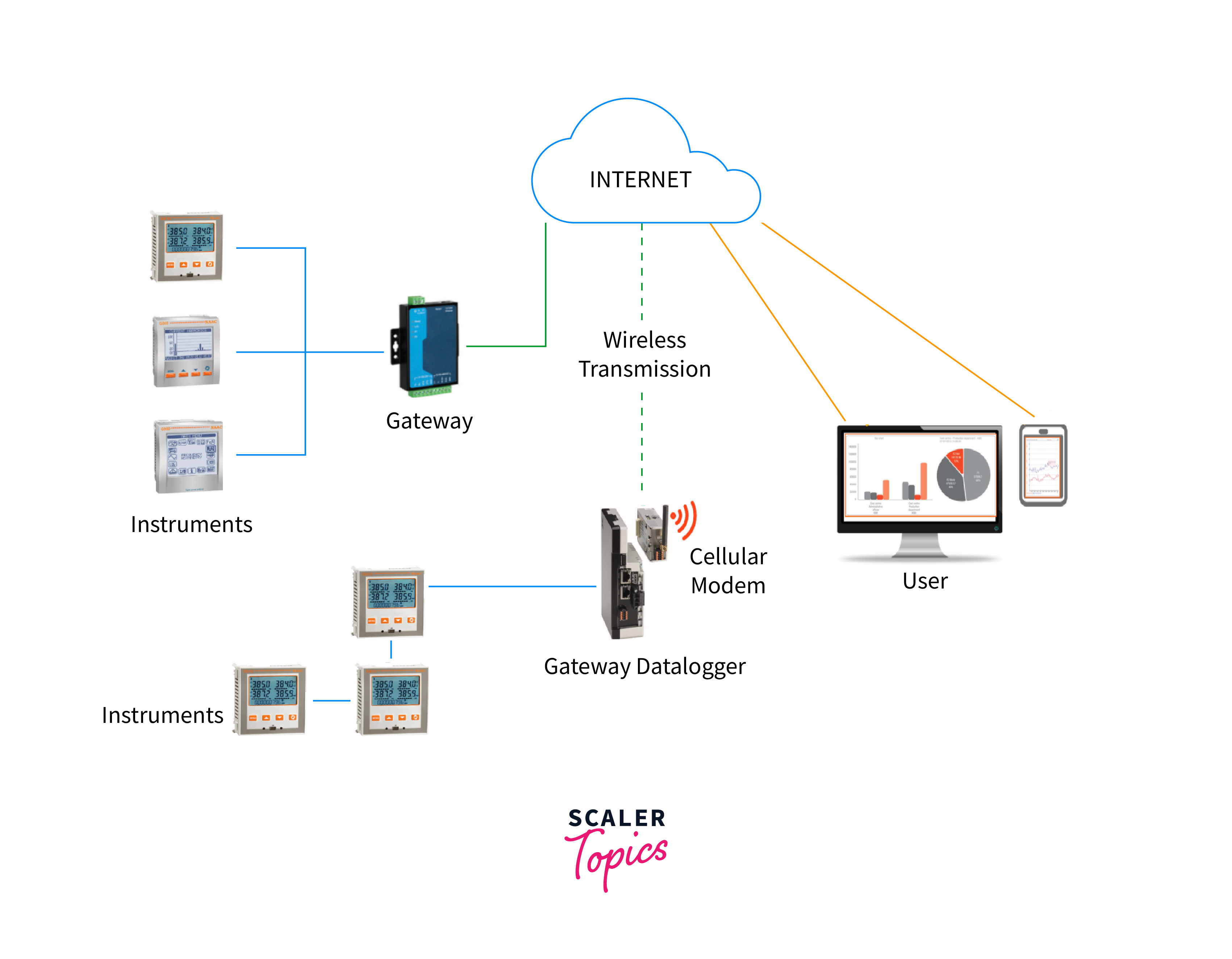

Internet of Things (IoT):

-

Smart Home Devices:

TinyML enables energy-efficient and real-time processing for devices like smart thermostats, security cameras, and lighting systems.

-

Wearable Technology:

Wearables like fitness trackers and health monitors can process sensor data locally for quicker insights and improved privacy.

-

Asset Tracking:

Devices can locally analyze sensor data for tracking assets, optimizing supply chains, and enhancing inventory management.

Health and Medical Devices:

-

Remote Patient Monitoring:

TinyML empowers wearable medical devices to analyze physiological data and alert medical professionals in real-time.

-

Portable Diagnostics:

Handheld medical devices can quickly process data and provide instant diagnostic results, improving patient care in remote areas.

-

Prosthetics and Assistive Devices:

Embedded machine learning can enhance the functionality of prosthetics and assistive devices by adapting to users' needs.

Agriculture:

-

Precision Farming:

Sensors in the field can gather data on soil conditions, weather, and crop health, allowing for optimized irrigation, fertilization, and pest control.

-

Livestock Management:

Wearable devices on animals can monitor health metrics and provide early detection of illness or distress.

Industrial Automation:

-

Predictive Maintenance:

TinyML can analyze sensor data from machinery to predict maintenance needs, minimizing downtime and increasing efficiency.

-

Quality Control:

Devices can identify defects and anomalies in manufacturing processes, ensuring product quality.

Energy Management:

-

Smart Energy Grids:

Sensors and devices can analyze energy consumption patterns and optimize distribution for efficient energy usage.

-

Energy Harvesting:

TinyML can help manage energy harvesting systems by analyzing environmental data to maximize energy capture.

Automotive:

-

Driver Assistance:

Embedded machine learning can enable real-time analysis of road conditions and driver behavior for enhanced safety.

-

In-Car Personalization:

TinyML can tailor in-car settings and entertainment options based on individual preferences.

Edge Computing:

-

Local Processing:

TinyML reduces latency by performing data analysis locally, critical for real-time applications.

-

Privacy and Security:

Data remains on-device, reducing the need for transmitting sensitive information to the cloud.

Consumer Electronics:

-

Gesture and Voice Recognition:

TinyML can power gesture and voice recognition systems, enhancing user interfaces.

-

Low-Power AI Cameras:

Cameras with embedded machine learning can identify and classify objects without constant cloud connectivity.

Conclusion

- TinyML, or Tiny Machine Learning, enables resource-constrained devices like microcontrollers to perform intelligent tasks locally.

- It enables smart home devices, predictive maintenance, environmental monitoring, and personalized consumer electronics.

- As technology evolves, TinyML continues transforming industries by making devices smarter, more efficient, and more responsive.