CNN Transfer Learning

Overview

In this article, we’ll delve into transfer learning within convolutional neural networks (CNNs). Transfer learning is one of the powerful techniques that allow us to make use of knowledge acquired from pre-trained models and apply it to tackle new, similar tasks. We’ll thoroughly explore the principles of transfer learning, highlighting its numerous benefits. Additionally, we’ll guide you through the process of performing transfer learning in CNNs using the TensorFlow framework. By employing pre-trained models and the Fashion MNIST dataset, we’ll walk you through the necessary steps, such as data preparation, model training, and evaluation, to successfully execute transfer learning.

Introduction

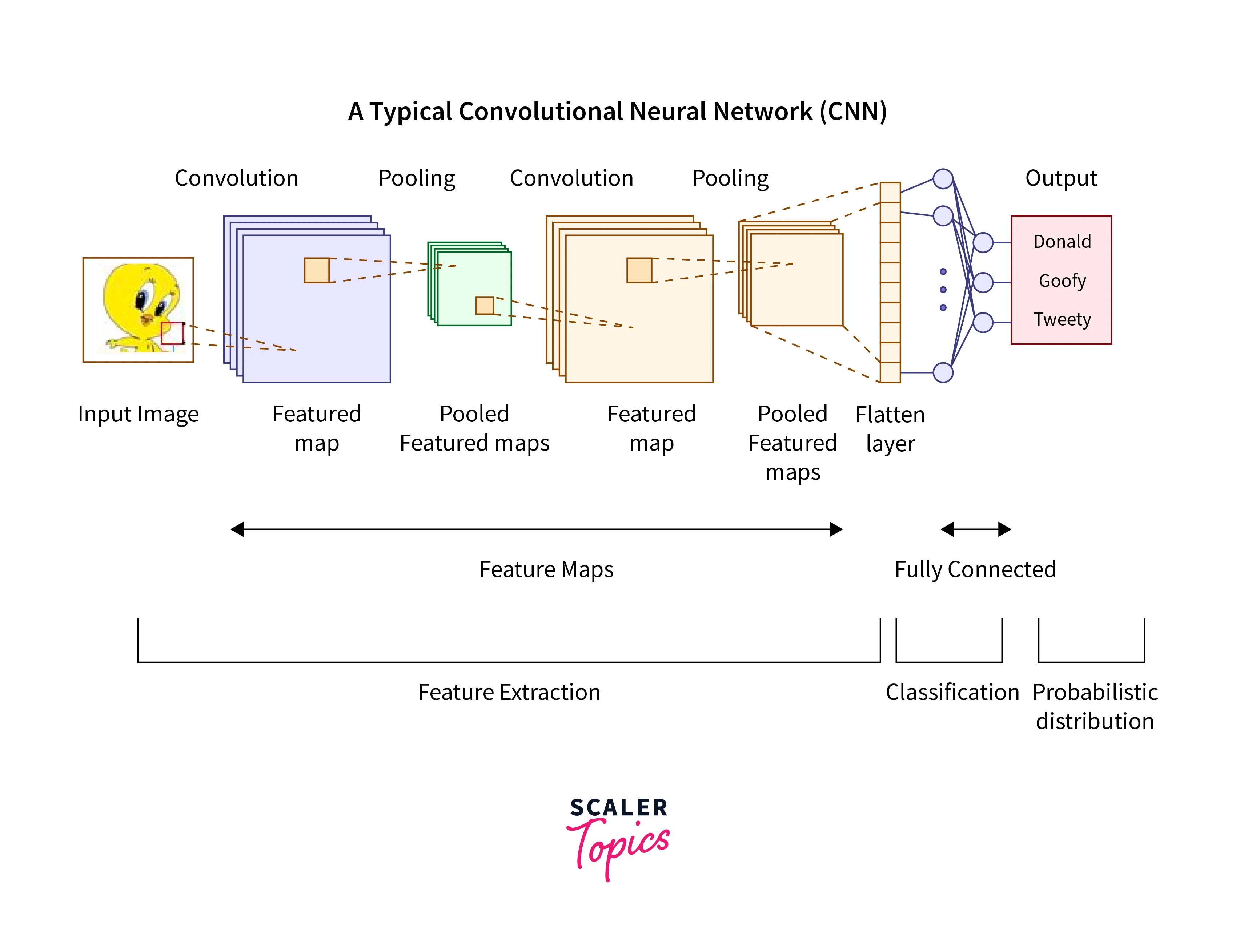

Convolutional neural networks (CNNs) have revolutionized computer vision tasks by achieving state-of-the-art performance in various applications, including object detection, image classification, and image segmentation. CNNs excel at automatically learning hierarchical representations from raw image data, enabling them to capture complex visual patterns and make accurate predictions.

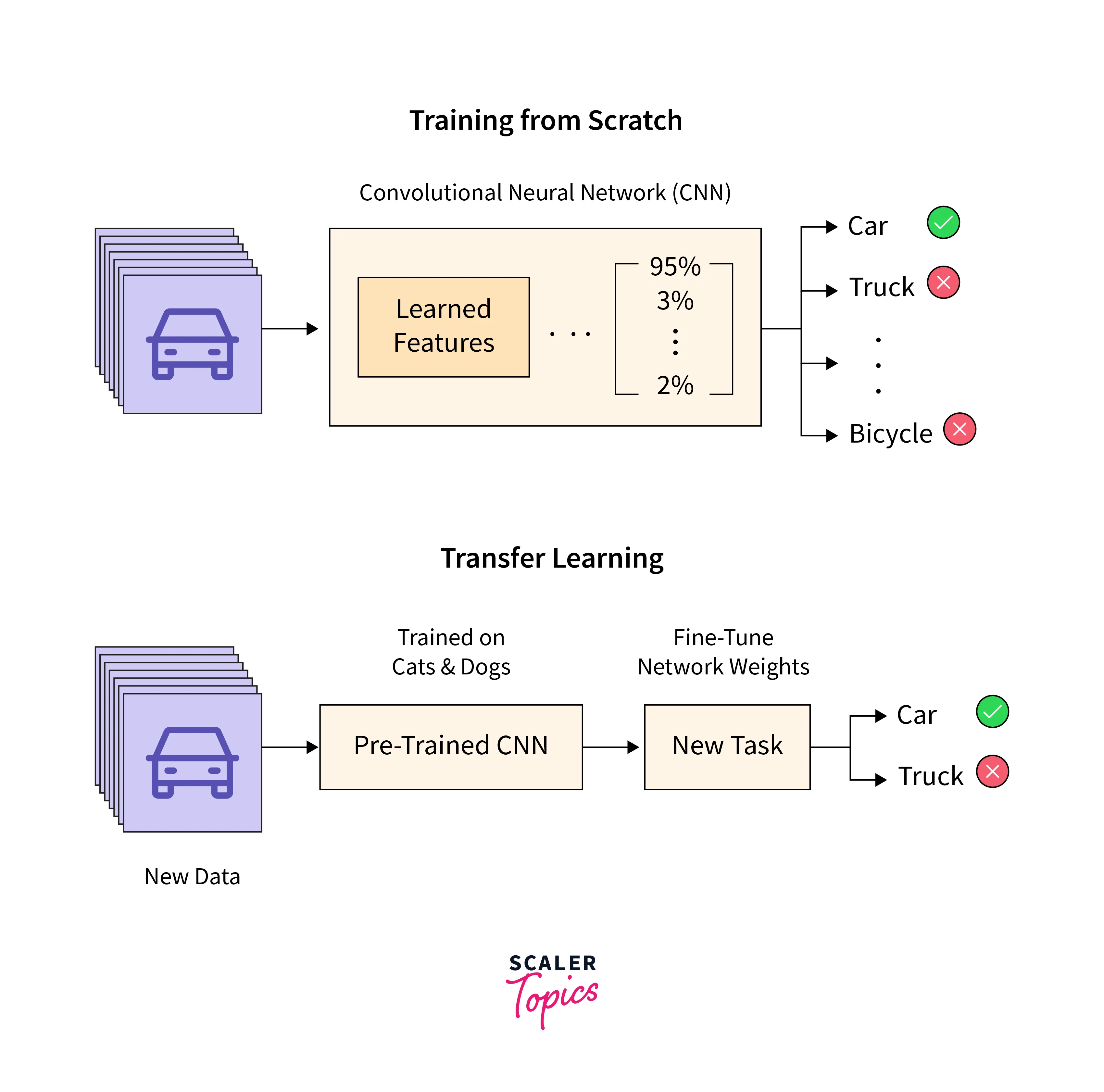

However, training CNNs from scratch can be resource-intensive and time-consuming. Building a CNN model requires a significant amount of labelled training data and substantial computational power. In many real-world scenarios, obtaining large labelled datasets can be challenging or expensive. Moreover, training CNNs from scratch might lead to suboptimal results due to the limitations of the available data.

Transfer learning provides a practical solution to overcome these challenges. Instead of training a CNN model from scratch, transfer learning enables us to leverage the knowledge and representations learned from pre-trained models that were trained on large-scale datasets, such as ImageNet. These pre-trained models have already captured a wide range of visual patterns and features, making them powerful feature extractors.

With transfer learning, we can take advantage of the pre-trained models' learned representations and adapt them to new, similar tasks or datasets. By reusing the pre-trained models' weights, we can significantly reduce the computational burden and training time. Transfer learning allows us to benefit from the domain-specific knowledge encoded in the pre-trained models, even when the available labelled data for our specific task is limited.

What is Transfer Learning?

Transfer learning is an ML technique where knowledge gained from solving one task is applied to a different but related task. In the context of CNNs, transfer learning involves using the weights learned by a pre-trained model on a large dataset (such as ImageNet) and fine-tuning them on a smaller, task-specific dataset. Transfer learning freezes the convolutional layers from a pre-trained model and uses it in real-time inference.

The purpose of freezing the convolutional layers is to retain the learned knowledge from the pre-trained model. These layers have already been trained on a large-scale dataset (such as ImageNet) and have learned to recognize general visual patterns and features. By freezing them, we ensure that this valuable knowledge is preserved and not altered while adapting the model to a new, task-specific dataset.

When we freeze the convolutional layers in transfer learning, it means that we prevent them from being updated or trained during the fine-tuning process. Instead, we keep the weights and parameters of these layers fixed and unchanged.

Advantages of Transfer Learning

The purpose of freezing the convolutional layers is to retain the learned knowledge from the pre-trained model. These layers have already been trained on a large-scale dataset (such as ImageNet) and have learned to recognize general visual patterns and features. By freezing them, we ensure that this valuable knowledge is preserved and not altered while adapting the model to a new, task-specific dataset.

Freezing the convolutional layers serves multiple purposes:

- Preventing Overfitting:

Pre-trained models are trained on massive datasets, which enables them to learn generalized representations of various visual features. Freezing the convolutional layers prevents them from being overly tuned to the specific task at hand, reducing the risk of overfitting on a smaller dataset. - Speeding up Training:

Training the convolutional layers from scratch can be computationally expensive and time-consuming. By freezing these layers, we save computation time during the fine-tuning process since the gradients for these layers do not need to be computed or updated. - Leveraging Generalized Representations:

The frozen convolutional layers have already learned to recognize a wide range of visual patterns. By preserving these generalized representations, we provide a strong foundation for the model to extract relevant features from the new dataset.

While the convolutional layers remain frozen, we typically add new trainable layers on top of them to form a new classifier specific to the task at hand. These new layers are trained to adapt the pre-trained model's features to the target dataset, allowing the model to learn task-specific patterns and make accurate predictions.

By freezing the convolutional layers and focusing on training only the newly added layers, we strike a balance between leveraging the pre-trained knowledge and adapting it to the specific requirements of the new dataset.

Pre-Trained Models for Transfer Learning

In the realm of transfer learning in convolutional neural networks (CNNs), numerous pre-trained models are readily available. These models, including VGG16, ResNet, and Inception, have been trained on massive datasets like ImageNet. They have acquired the ability to recognize a diverse range of visual patterns and features, making them valuable starting points for transfer learning tasks.

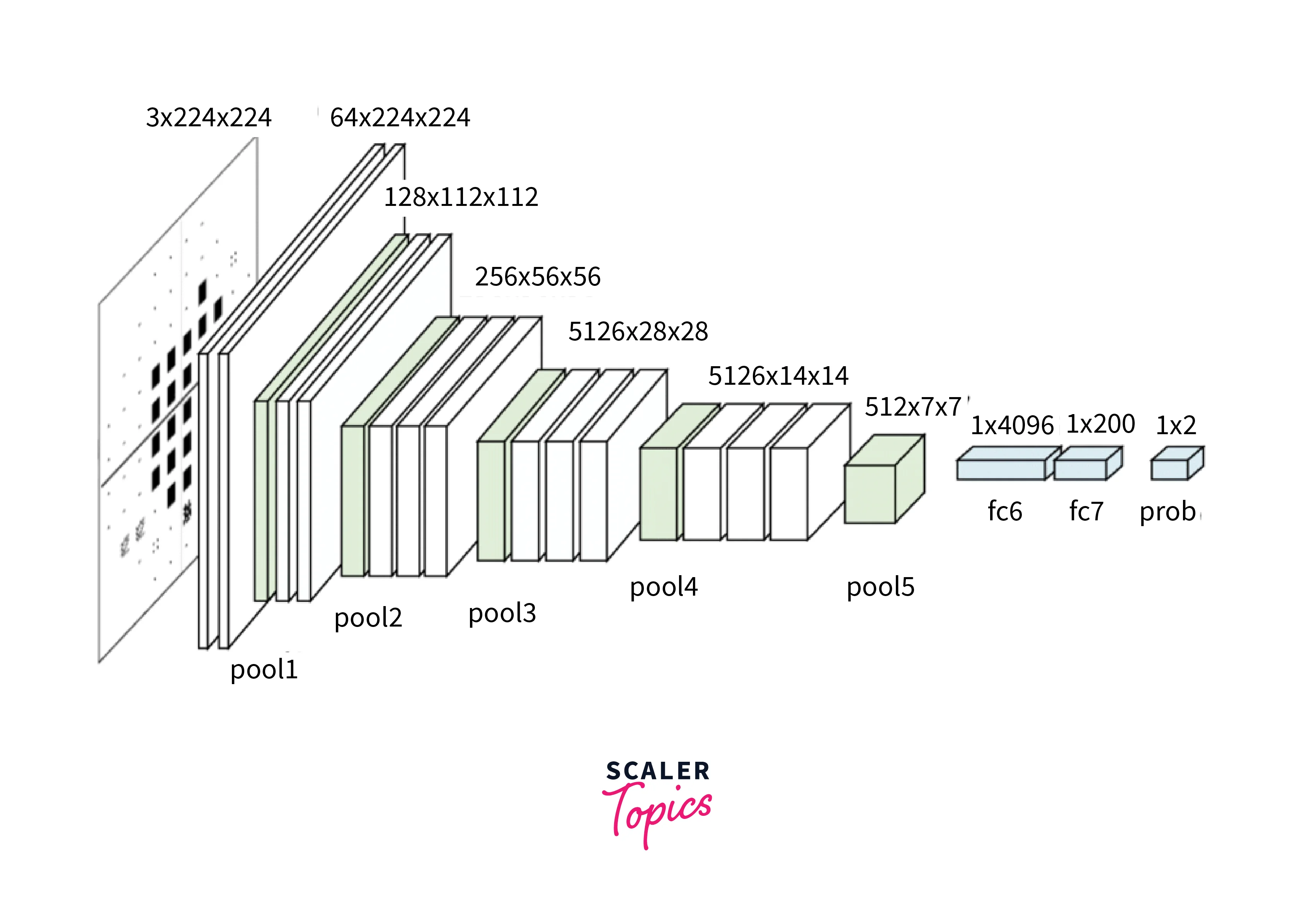

VGG16

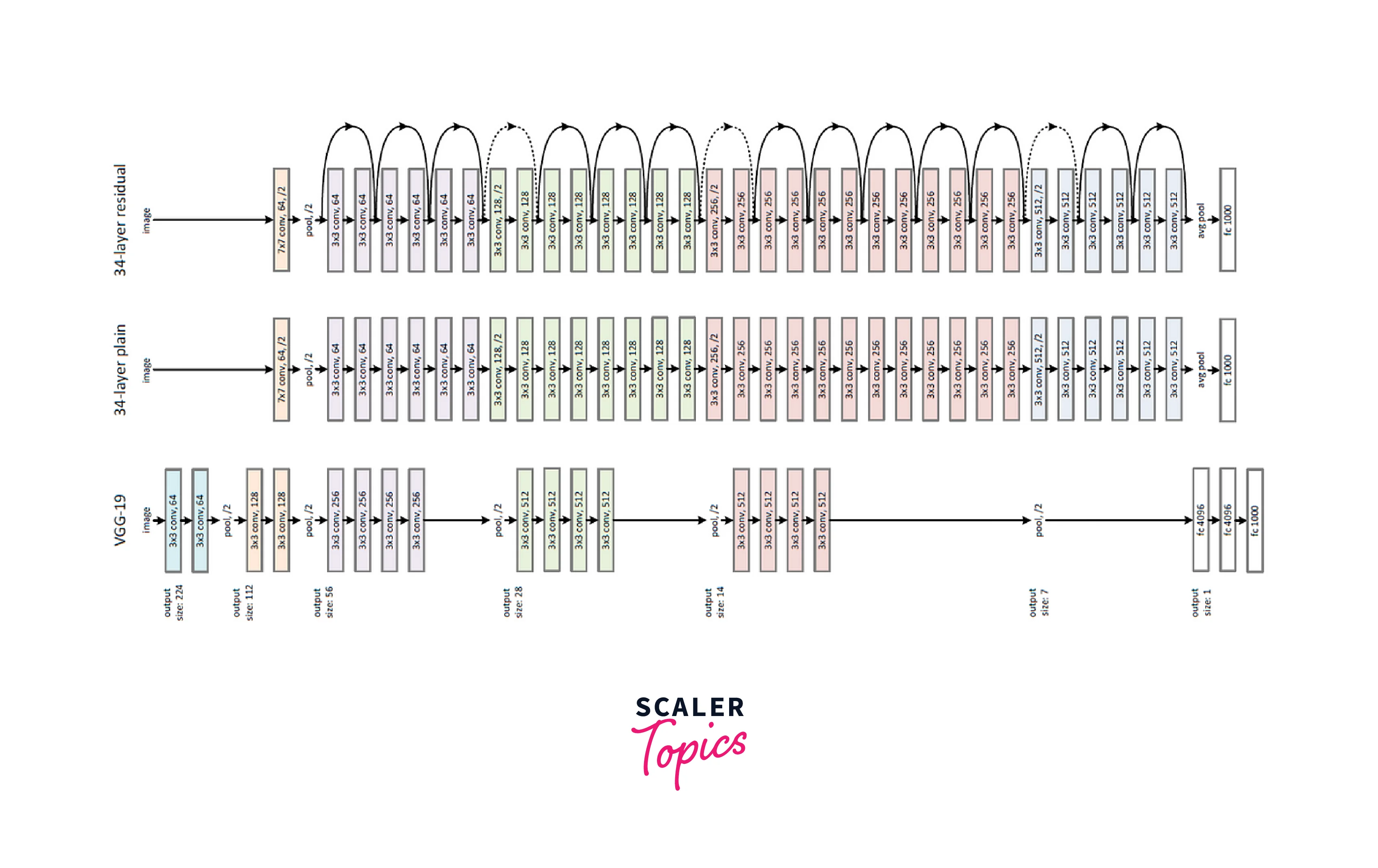

VGG16 is a well-known pre-trained CNN architecture developed by the Visual Geometry Group at the University of Oxford. It consists of 16 layers, including 13 convolutional layers and 3 fully connected layers. VGG16's strength lies in its simplicity and effectiveness. Despite its straightforward architecture, it has demonstrated impressive performance in image classification tasks. By utilizing the pre-trained weights of VGG16, we can leverage its ability to extract meaningful features from images.

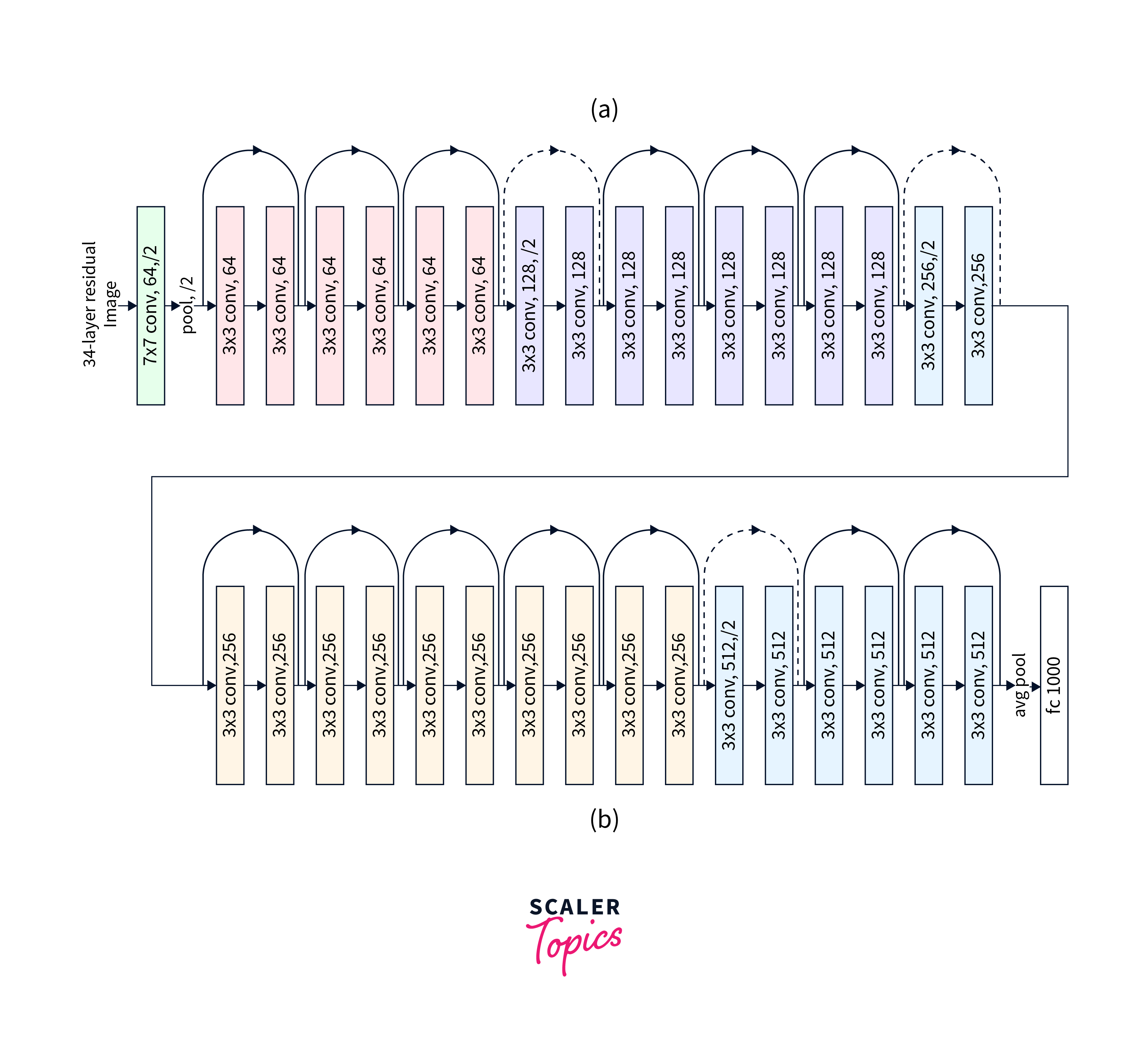

ResNet

ResNet, short for Residual Network, is a groundbreaking CNN architecture that introduced the concept of residual learning. ResNet addresses the degradation problem commonly encountered in very deep networks by introducing skip connections, allowing the model to learn residual mappings. This architectural innovation enables the training of extremely deep CNNs with improved accuracy and performance. By utilizing the pre-trained ResNet models, we can leverage their depth and skip connections to benefit our transfer learning tasks.

Inception

Inception, also known as GoogLeNet, is a CNN architecture developed by Google. It is characterized by its inception modules, which consist of parallel convolutional layers with different filter sizes and pooling operations. The inception modules enable the network to capture multi-scale features at various levels of abstraction. The Inception architecture has proven to be highly effective in image recognition tasks. By utilizing pre-trained Inception models, we can leverage their ability to capture diverse and multi-scale visual patterns.

These pre-trained models, among others, have been trained on large-scale datasets like ImageNet, containing millions of labelled images across thousands of classes. The extensive training process has enabled these models to learn rich representations of visual patterns, allowing them to generalize well to various computer vision tasks.

By leveraging these pre-trained models as starting points, we can benefit from their learned knowledge and adapt them to new, similar tasks through transfer learning. Instead of training a CNN model from scratch, we can fine-tune these pre-trained models on our specific task or dataset, leading to improved performance, faster convergence, and reduced computational resources.

Feature Extraction with Pre-Trained Models

One common approach to transfer learning in cnn is feature extraction. In this method, we use the pre-trained model as a fixed feature extractor and add a new classifier on top. The pre-trained model's convolutional layers are frozen, preventing them from being updated during training, while the newly added classifier is trained to classify the specific task at hand.

Feature Extraction

Once the pre-trained model is loaded, we can use the model for extracting features from the images. For example, in VGG16 model, feature extraction happens from the last dense layer of the network, which has 4096 features.

Using Extracted Features

The extracted features can be used for various tasks like object detection, image retrival and image similarity. We can use extracted features to find similar images in a dataset.

Data Preparation for Transfer Learning

To perform transfer learning, we need to prepare the data. For this article, we will use the Fashion MNIST dataset, a collection of grayscale images representing different clothing items. The following steps outline the data preparation process:

Step 1: Imports

First, we need to import the necessary libraries and modules:

Step 2: Setting up TensorFlow

Next, we set up TensorFlow and check the availability of a GPU for improved training performance:

Step 3: Data Preparation

In this tutorial, we will use a dataset containing images of cats and dogs.

Output:

To create a test set, we will first calculate the number of batches in the validation set using the tf.data.experimental.cardinality function. Then, we will set aside 20% of these batches to form our test set.

Output:

Step 4: Configure the Dataset for Performance

To optimize the dataset's performance, we'll implement buffered prefetching, which allows loading images from disk without causing I/O to become blocked.

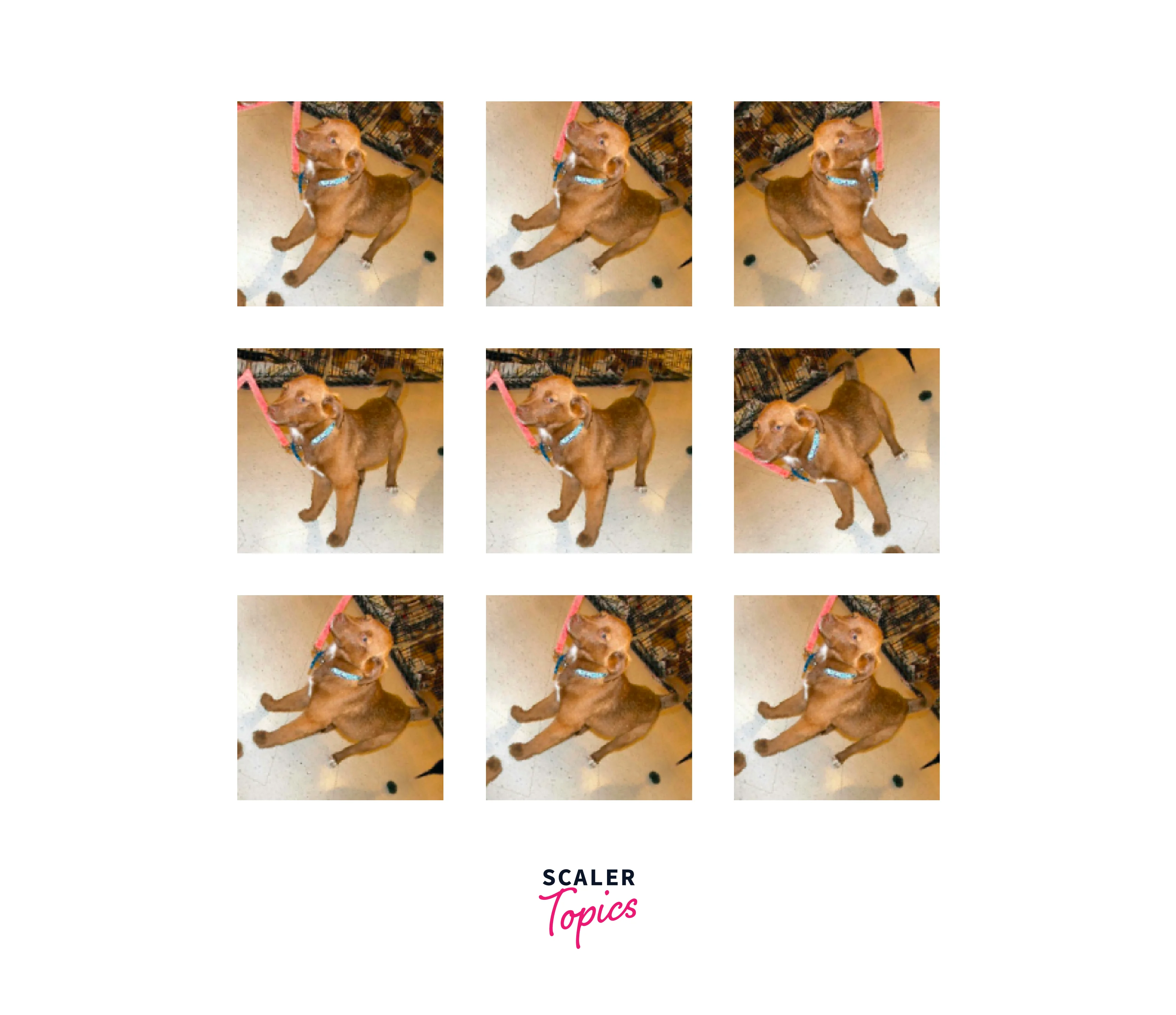

Step 5: Data Augmentation

When you don't have a large image dataset, it's a good practice to artificially introduce sample diversity by applying random yet realistic transformations to the training images, such as rotation and horizontal flipping. This helps expose the model to different aspects of the training data and reduces overfitting.

Now, we can see the augmented dataset using the following code.

Output:

Step 6: Rescale Pixel Values

Upon downloading tf.keras.applications.MobileNetV2 as the base model, it's important to note that the model requires pixel values within the range of [-1, 1]. However, your current images have pixel values in the range of [0, 255]. To address this, you can utilize the preprocessing method that comes with the model to properly rescale the pixel values before using them as inputs.

Note: Alternatively, you could rescale pixel values from [0, 255] to [-1, 1] using tf.keras.layers.Rescaling.

CNN Transfer Learning with TensorFlow

Now, let's proceed with the steps involved in CNN transfer learning using TensorFlow.

Step 1: Model Building

We start by loading a pre-trained model, in this case, VGG16, and freezing its convolutional layers:

Output:

Step 2: Freeze the Convolutional Base

It is important to freeze the convolutional base before you compile and train the model by setting the layer.trainable = False.

Now, we'll check the model architecture using model.summary().

Next, we add a new classifier on top of the pre-trained model:

Output:

Step 3: Model Compilation and Training

We compile the model and train it using the dataset:

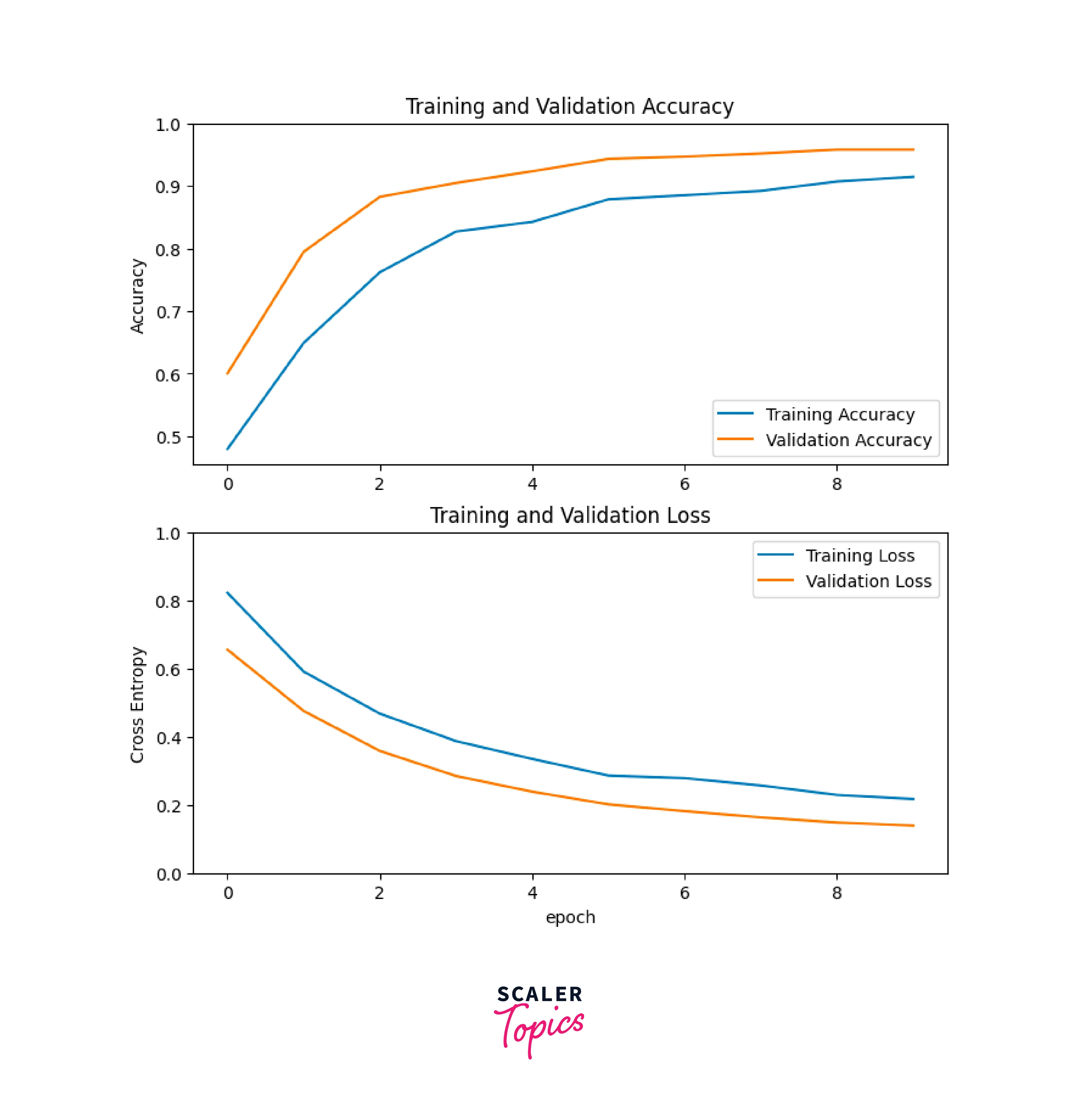

Step 4: Model Evaluation and Analysis

Finally, we evaluate the model performance on the test dataset and analyze its performance:

Output:

Conclusion

- Transfer learning in CNNs offers a powerful approach to leverage pre-trained models and achieve better performance in image classification tasks.

- By utilizing the knowledge captured by models trained on large-scale datasets, we can save time, resources, and still obtain impressive results.

- TensorFlow provides a user-friendly framework for implementing transfer learning, making it accessible and efficient.