Transfer Learning for Text Using TensorFlow

Overview

Transfer learning is a technique commonly used in machine learning to leverage knowledge gained from one task and apply it to a different but related task. In the context of text, transfer learning involves utilizing pre-trained models that have been trained on large amounts of text data to extract useful features and representations. These pre-trained models have learned general language patterns and can be fine-tuned or used as feature extractors for specific downstream tasks, such as text classification, sentiment analysis, or named entity recognition.

In this article, we will explore transfer learning tensorflow for text, one of the most popular deep learning frameworks. We will cover the basics of transfer learning for text, pre-trained models commonly used in transfer learning, feature extraction with pre-trained models, and data preparation steps required for transfer learning.

What is Transfer Learning for Text?

Text data is abundant and complex, making it challenging to build effective models from scratch, especially when dealing with limited training data. Transfer learning for text addresses this issue by leveraging the knowledge encoded in pre-trained models and transferring it to new tasks, reducing the need for extensive training from scratch.

By using pre-trained models, you can benefit from the representations and linguistic knowledge** they have learned from vast amounts of text data. This allows you to achieve better performance even with limited training data, as the models have already captured useful features and semantic relationships.

Pre-Trained Models for Transfer Learning

There are several popular pre-trained models for transfer learning tensorflow for text, and they are often based on architectures like Transformers, which have revolutionized natural language processing (NLP). Some widely used pre-trained models include:

-

BERT (Bidirectional Encoder Representations from Transformers):

BERT models capture contextual information by training a deep bidirectional transformer model on a large corpus. These models have achieved state-of-the-art results on various NLP tasks.

-

GPT (Generative Pre-trained Transformer):

GPT models are trained to generate coherent and contextually relevant text. They have a left-to-right architecture, making them suitable for tasks like text generation and completion.

-

RoBERTa (Robustly Optimized BERT):

RoBERTa builds upon BERT and introduces modifications to improve its performance. It uses larger batch sizes, more training data, and removes the next sentence prediction task used in BERT.

Feature Extraction with Pre-Trained Models

One common approach in transfer learning for text is to use pre-trained models as feature extractors. This involves using the pre-trained model to encode input text into fixed-length vectors, which can then be used as input to a downstream task-specific model.

To extract features from pre-trained models like BERT, GPT, or RoBERTa, you can follow these general steps:

-

Tokenization:

Split the input text into individual tokens (words or subwords) and convert them into the corresponding token IDs compatible with the pre-trained model.

-

Input Formatting:

Add special tokens like [CLS] (classification) and [SEP] (separator) to mark the beginning and end of sentences, respectively. Depending on the model, you might need to add additional tokens for specific tasks.

-

Encoding:

Pass the formatted input through the pre-trained model, which will generate contextualized representations for each token.

-

Pooling or Aggregation:

Depending on the task, you may need to aggregate the token representations into a fixed-length representation. Common aggregation methods include mean pooling or using the representation of the [CLS] token.

Data Preparation for Transfer Learning

When preparing data for transfer learning tensorflow, there are a few important steps to consider:

-

Task-Specific Data:

Collect or prepare a dataset specific to your downstream task. This dataset should be labeled and representative of the target task you want to solve (e.g., sentiment analysis, text classification).

-

Splitting the Data:

Divide the dataset into training, validation, and testing sets. The training set is used to fine-tune the pre-trained model, while the validation set helps with hyperparameter tuning and model selection. The testing set is used for the final evaluation.

-

Text Preprocessing:

Perform standard text preprocessing steps, such as lowercasing, removing punctuation, handling special characters, and tokenizing the text into words or subwords.

-

Tokenization and Padding:

Tokenize the text into tokens compatible with the pre-trained model and pad/truncate the sequences to a fixed length to ensure consistent input dimensions.

-

Creating Input Features:

Convert the tokenized and padded sequences into input features suitable for the pre-trained model. This typically involves converting tokens to token IDs, creating attention masks, and segment or type IDs if necessary.

By following these steps, you can prepare your data for transfer learning and utilize pre-trained models effectively for your text-related tasks in TensorFlow.

Transfer Learning for Text using TensorFlow

Step 1: Imports:

Import the necessary libraries for transfer learning tensorflow.

Step 2: Setting up TensorFlow

Next, we set the log level of TensorFlow to ERROR to reduce unnecessary output during training.

Step 3: Data Preparation

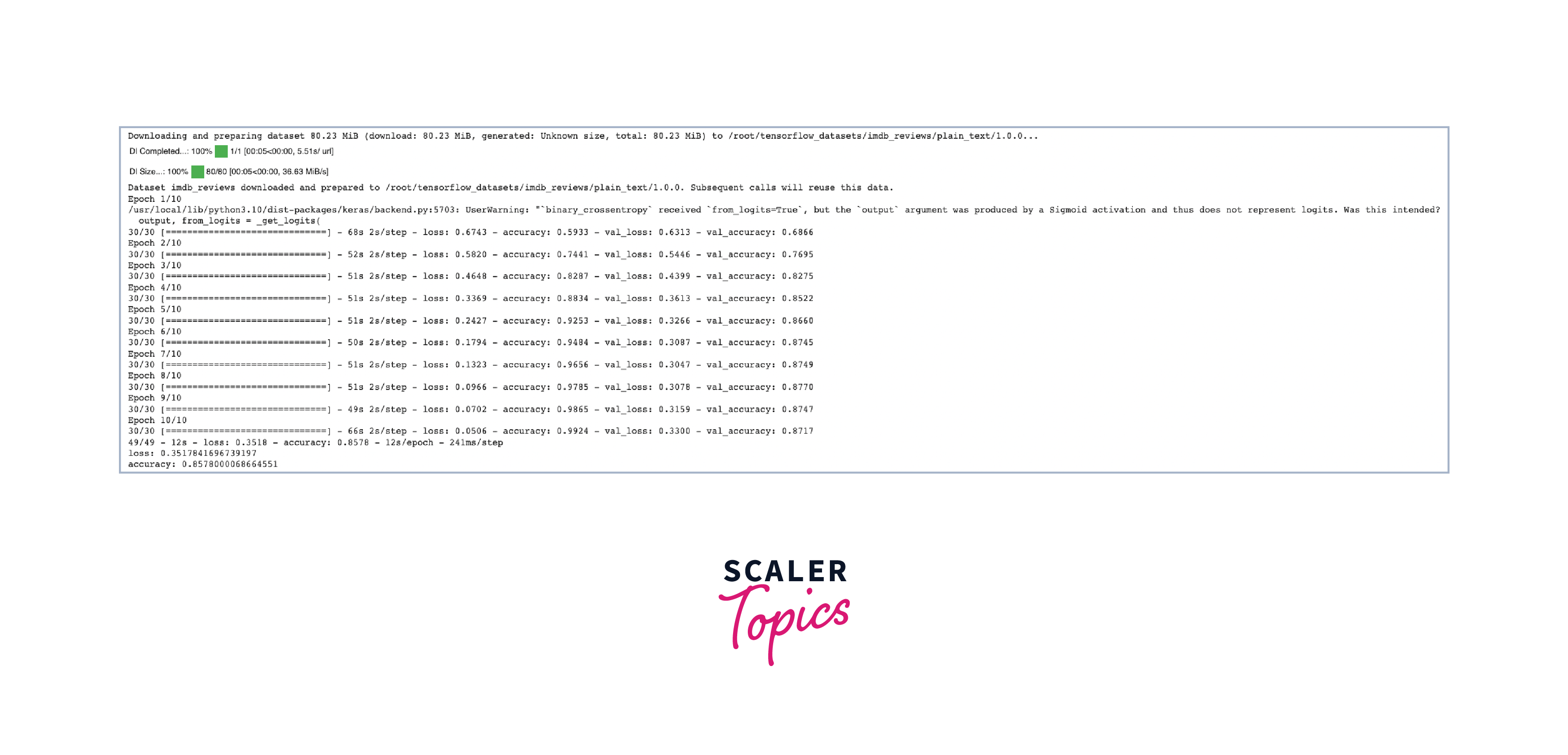

We load the IMDb movie review dataset from TensorFlow Datasets and split it into training, validation, and test sets.

Step 4: Model Training

We define our model architecture using a pre-trained embedding layer from TensorFlow Hub. The embedding layer converts text into dense vectors, which are then passed through a dense layer with ReLU activation and a final dense layer with sigmoid activation for binary classification.

Step 5: Model Evaluation and Analysis

Finally, we evaluate our model on the test set and print the loss and accuracy metrics.

By following these steps, we can easily perform transfer learning for text classification using TensorFlow. This approach allows us to benefit from pre-trained models and achieve good results with less effort.

Conclusion

- Transfer learning tensorflow for text offers a powerful and efficient approach to leverage pre-trained models, such as BERT, for text-related tasks. These models have learned intricate language patterns and semantic relationships from vast amounts of text data.

- By utilizing pre-trained models, developers can save time and resources, as they don't need to train models from scratch. Transfer learning enables better generalization, even with limited task-specific data, resulting in improved performance on downstream tasks.

- TensorFlow provides a rich ecosystem for implementing transfer learning for text. Developers can easily integrate pre-trained models, preprocess text data, and build custom architectures for various NLP tasks.

- The process typically involves tokenization, padding, and encoding text data into input features suitable for the pre-trained models. Fine-tuning or using the pre-trained models as feature extractors allows for efficient adaptation to specific tasks.

- Transfer learning tensorflow for text is a game-changer in natural language processing, enabling developers to build state-of-the-art models for tasks like sentiment analysis, text classification, named entity recognition, and more with relative ease and excellent performance.