Transparent Encryption in HDFS

Overview

In computer systems that handle a lot of data, security is crucial, and encryption is a common technique used to enhance it. To automate the process of encryption and decryption, a type of encryption called transparent encryption is used. It enables users to encrypt and decrypt data without any manual intervention or knowledge of the encryption algorithm. Data processing systems like HDFS also implement these transparent encryptions to protect and secure the data. We will learn about the process used in securing HDFS data using transparent encryption in this article.

Introduction

With the exponential growth of data in modern organizations, data security is becoming increasingly important. Transparent Encryption is a technique that enhances the security posture of big data environments, making them more resilient to cyber threats and data breaches. Transparent Encryption is implemented at the storage system level, providing a transparent layer of security that protects sensitive stored data at rest from unauthorized access, theft, or tampering, without sacrificing performance or usability.

What is Transparent Encryption?

Transparent Encryption is a security mechanism that provides data encryption without requiring any changes to the underlying applications or systems. Transparent Encryption is usually implemented in storage systems, such as databases or file systems, to protect data at rest or in storage. Transparent Encryption provides a layer of security that is invisible to the user or in other terms, the encryption is handled automatically by the system, without the need for any actions from the user.

Transparent Encryption in HDFS

Introduction

Transparent Encryption in Hadoop Distributed File System (HDFS) is a security feature that ensures data confidentiality by encrypting the data at rest. HDFS is a primary storage layer for big data platforms like Apache Hadoop and therefore it requires robust security mechanisms to protect sensitive information stored on it. Transparent Encryption allows clients to encrypt and decrypt data without having to make any changes to their existing applications or processes.

HDFS provides Secure Sockets Layer (SSL) and Transport Layer Security (TLS) protocols for encrypting data in transit between HDFS clients and DataNodes, as well as between the DataNodes themselves. Therefore by implementing transparent Encryption, we can achieve encryption for data both at rest and in transit.

Background

Encryption in traditional data management software/hardware stack can be performed in many layers. Each layer has its advantages and disadvantages, depending on the specific needs of the user. The layers are,

- Application-level encryption: This encryption provides the highest level of security and flexibility, as the application has full control over what is encrypted. However, it can be challenging to implement, and may not be feasible for existing applications that do not support encryption.

- Database-level encryption: This is similar to application-level encryption in terms of its security and flexibility. Most database vendors offer such encryption options, but there are performance issues associated with this approach, such as slower queries and the inability to encrypt indexes. Database-level encryption may require changes to the database schema, which can be time-consuming and difficult.

- FileSystem-level encryption: This option protects data at the file system level and is easy to deploy, provides high performance, and is transparent to applications. However, it may not be able to accommodate certain application-level policies, such as multi-tenancy encryption. Microsoft's BitLocker provides filesystem-level encryption.

- Disk-level encryption: This option is easy to deploy and has high performance, but is limited in its flexibility and only protects against physical theft, leaving the data vulnerable to attacks that exploit software vulnerabilities or network-based attacks.

HDFS-level encryption falls between database-level and filesystem-level encryption in the stack. This provides a balance between security and performance, allowing Hadoop applications to run transparently on encrypted data.

Use Cases

Transparent encryption is used to encrypt data at rest and is particularly useful for organizations that need to store sensitive data in HDFS, such as financial data, healthcare data, or personally identifiable information (PII). It can also be used in cloud computing to ensure data security. Transparent Encryption can also help organizations meet regulatory compliance requirements such as General Data Protection Regulation (GDPR).

Key Concepts and Architecture

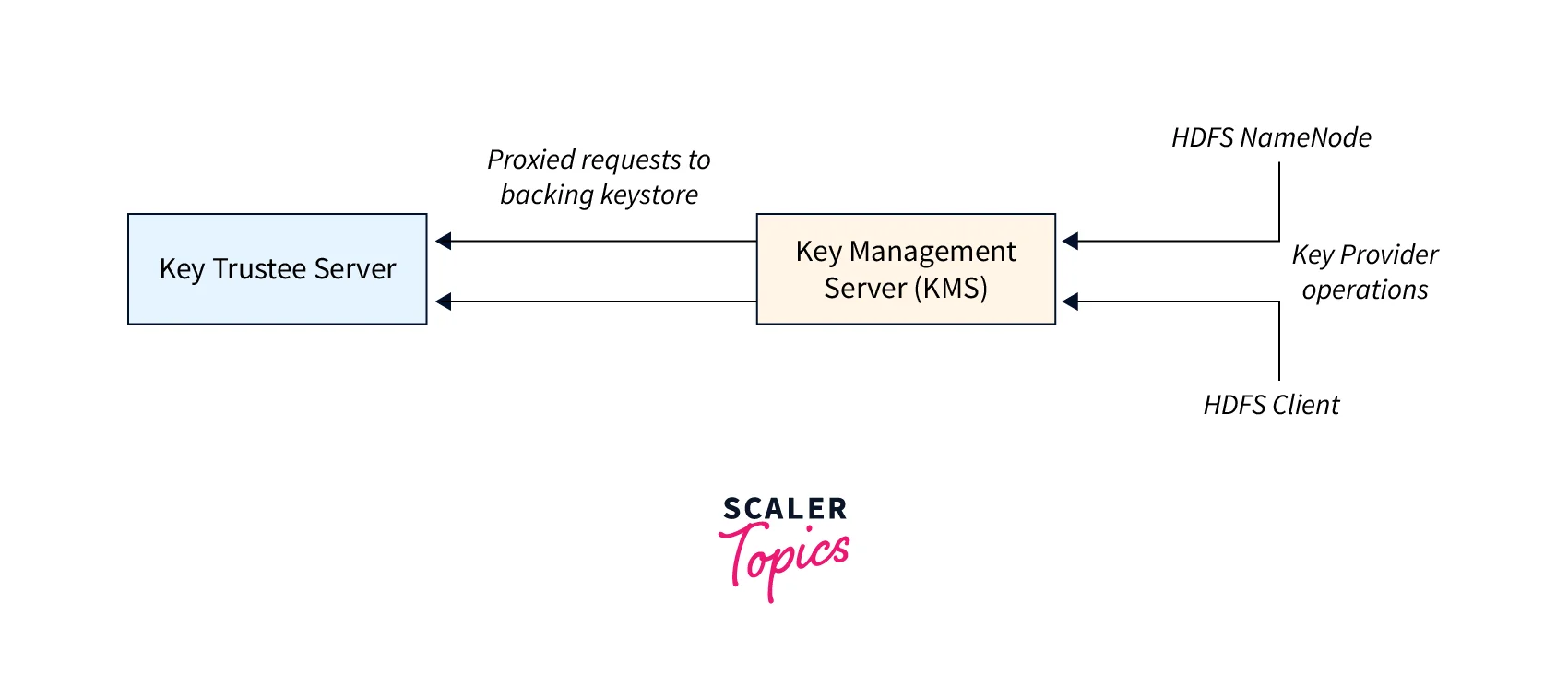

The architecture of Transparent Encryption in HDFS consists of several major components, including the Hadoop Key Management Server (KMS), the Hadoop Distributed File System Encryption Zone (EZ), and the Hadoop NameNode

The Hadoop KMS consists of two key components, a key server, and a client API, and is responsible for the following major functions,

- Managing the cryptographic keys that are used to encrypt and decrypt data in HDFS.

- It provides a centralized location for key management. It allows administrators to easily create keys, distribute, and revoke encryption keys as needed.

The key server assigns a unique key name to each encryption key. When a key is rolled, its version is updated and a key can have multiple versions. Each version will have its key secrets. Encryption keys can be retrieved either by their name, returning the latest version, or by a specific version.

To create a new encrypted encryption key (EEK), the following steps are followed,

- The KMS automatically generates a random key or EZ key with a fixed length of 128, 192, or 256 bits.

- The random key is sent to the Key Trustee(KT) which is responsible for storing and managing the Master Key. The master key is a highly secure key used to encrypt and decrypt the Encrypted Encryption Keys.

- Encrypt the random key with the master key to create an EEK, and store it in the Key Trustee.

To decrypt an EEK, the KMS confirms the user's access to the encryption key, decrypts the EEK using it, and returns the decrypted encryption key.

The DataNode performs the following actions,

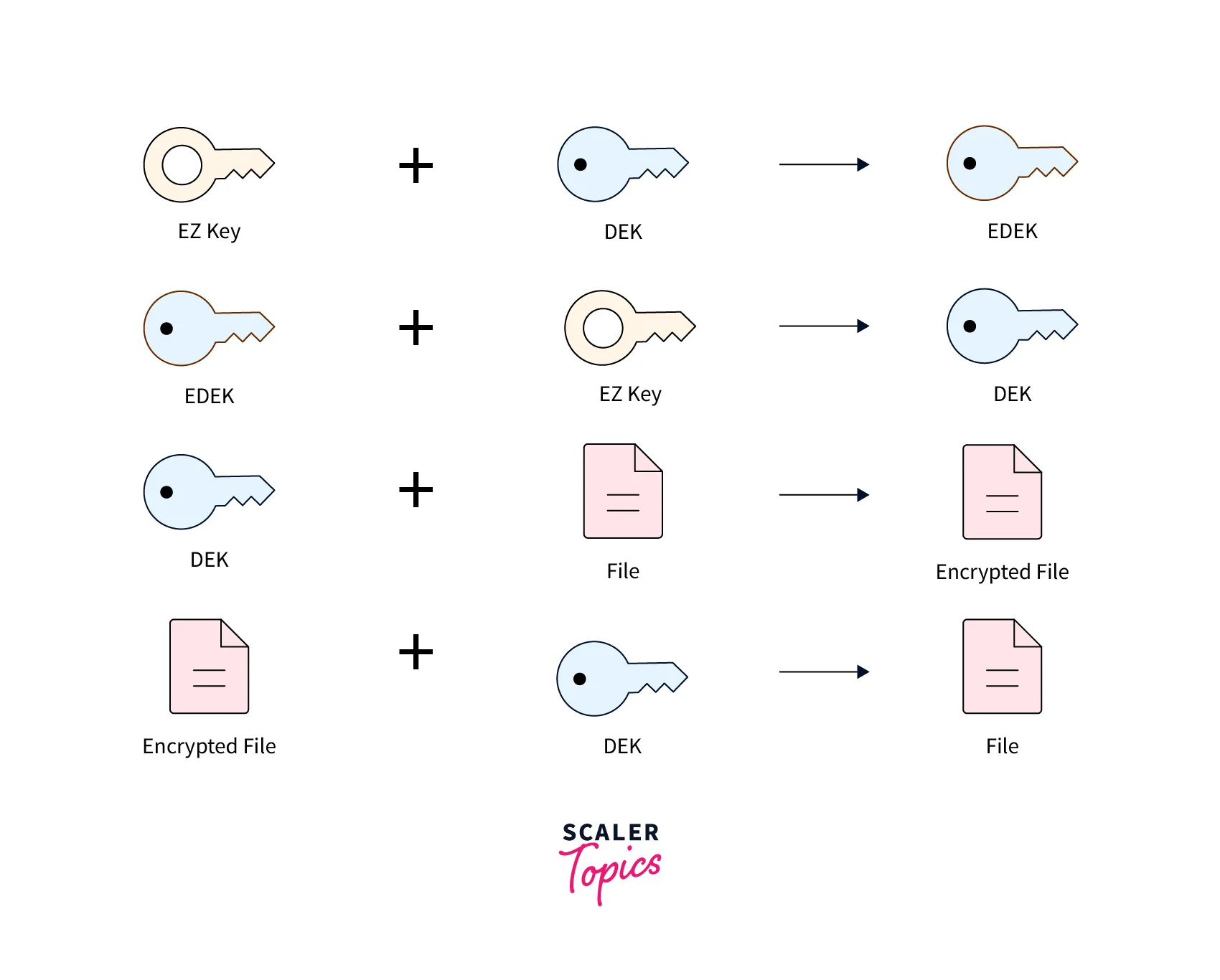

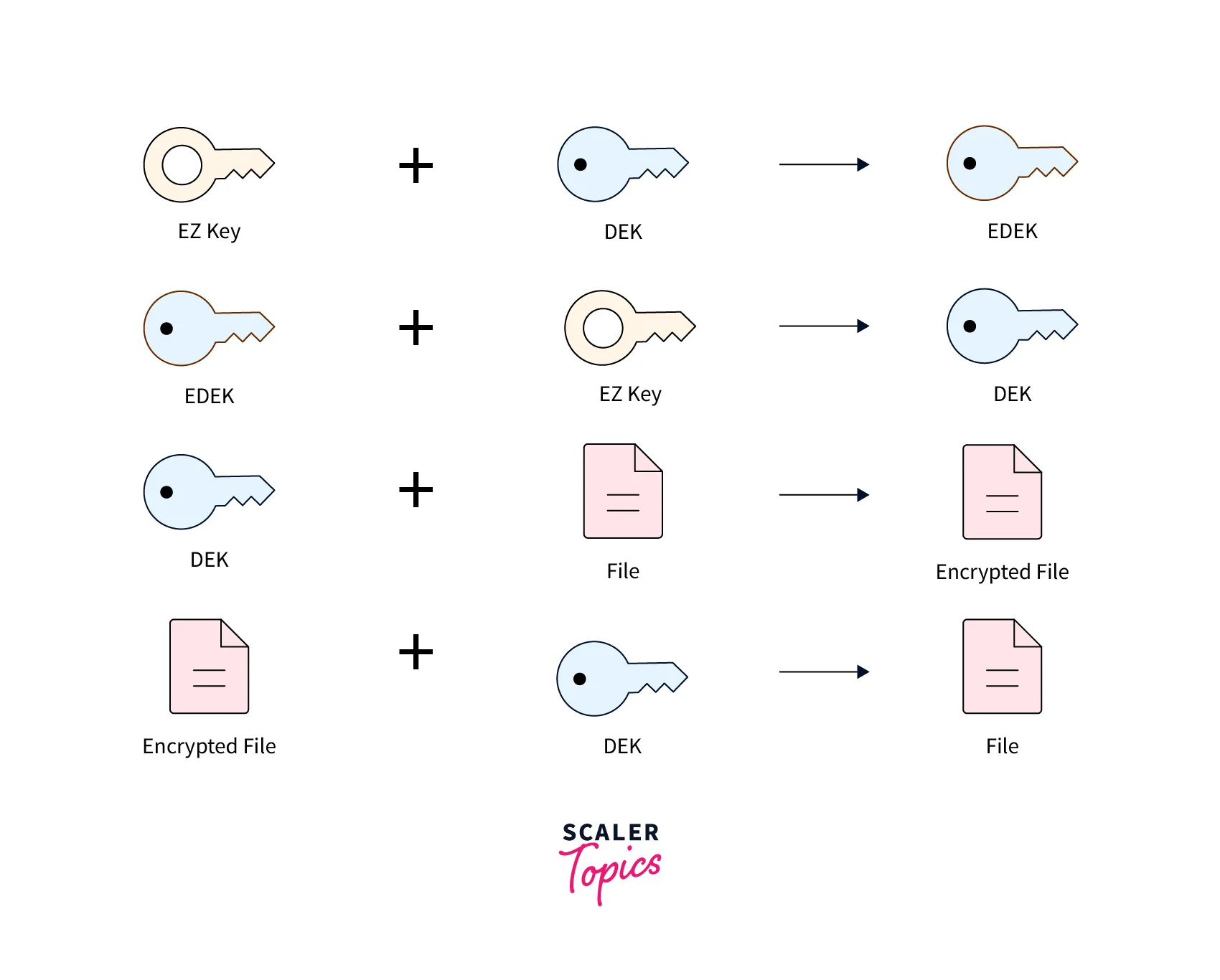

- Generates a unique data encryption key (DEK) to encrypt and decrypt file data. The DEK is further encrypted by the EEK to create the encrypted data encryption keys(EDEK). The EZ key is used to encrypt and decrypt the DEK.

- Generates a unique Initialization Vector (IV), which is used to add randomness to the encryption process, making the encryption more secure.

- Confirm that only end users with proper permissions can access EEK used to encrypt DEKs in the key store.

The HDFS Encryption Zone (EZ) is a logical unit of encryption that is used to protect specific data sets in HDFS. Each EZ is associated with a specific encryption key, which is managed by the KMS. Data that is stored within an EZ is automatically encrypted using the associated key, providing robust protection against unauthorized access or theft.

The NameNode is responsible for managing the file system metadata in HDFS. It stores the DEK and IV provided by the DataNode in the file system metadata for the respective file. The NameNode is also responsible for authenticating the user and authorizing them to access the file.

Configuring HDFS Transparent Encryption

Configuring transparent encryption in HDFS involves the following steps,

- Install and configure the KMS or Key Trustee server by updating the kms-site.xml file. Also, ensure that it is accessible to the HDFS cluster.

- Then configure the HDFS cluster to use the KMS as its key provider by setting the dfs.encryption.key.provider.uri property in the hdfs-site.xml file to the URI of the KMS.

- Create an encryption key for the Hadoop Key Management Server (KMS). This key will be used to encrypt the data encryption keys (DEK) for each encryption zone. The key can be generated using the hadoop key command and the zones can be generated by the hdfs crypto command which we will be seeing in the later part of the article.

- Tools like distcp can be used to copy the data from other areas into the created encrypted zones.

- Configure the KMS to authenticate clients that request keys using Kerberos or SSL and authorize clients to access keys using the Hadoop Access Control List (ACL) mechanism.

Kerberos is a network authentication protocol that is used in Hadoop for secure communication between Hadoop services. ACL allows the admin to set permissions for specific users or groups to read, write or execute files and directories.

:::

Considerations for HDFS Transparent Encryption

Distcp Considerations

Running as the superuser

Distcp is a common tool used in Hadoop to replicate data between clusters for backup and disaster recovery purposes. Typically, this is performed by the cluster administrator, who is an HDFS superuser. However, when using HDFS encryption, the process of copying data can become more complicated due to the need for access to encryption keys and the overhead of decrypting and re-encrypting data.

The virtual path prefix, /.reserved/raw/, has been introduced to allow replication of encrypted data between HDFS clusters without requiring HDFS superusers to have access to encryption keys. Therefore, avoiding the overhead of decryption and re-encryption. By using this prefix, the source and destination data will be byte-for-byte identical, which is not the case when the data is re-encrypted with a new EDEK.

It is important to preserve extended attributes when using /.reserved/raw to distcp encrypted data because attributes such as the EDEK are exposed within /.reserved/raw and must be preserved to decrypt the file. If the distcp command is initiated at or above the encryption zone root, it will automatically create an encryption zone at the destination if it does not already exist. However, it is recommended that the admin create identical encryption zones on the destination cluster to avoid potential mishaps.

Copying into encrypted locations

When performing a copy operation using distcp, the default behavior is to verify the successful copying of data to the destination by comparing the checksums provided by the filesystem. However, when copying from an unencrypted or encrypted location into an encrypted location, the checksums provided by the filesystem will not match. This is because the underlying block data is different as a new EDEK will be used to encrypt the data at the destination.

Rename and Trash Considerations

In HDFS, renaming files is a common operation that users perform. However, when performing this operation on files with transparent encryption, there are some considerations to keep in mind.

- When a file is renamed, HDFS must create a new file with the new name and move the data from the old file to the new file.

- During this operation, HDFS must re-encrypt the data with a new EDEK, which can be a time-consuming operation.

To avoid this, HDFS uses an optimization called rename-based encryption where it retains the old EDEK and creates a new file with the new name using the same EDEK. This optimization is only possible if the rename operation occurs within the same encryption zone. If the rename operation occurs across different encryption zones, the file must be re-encrypted with a new EDEK, which can take time.

As for trash operation, when a file is deleted in HDFS, it is not immediately removed from the file system but moved to a Trash directory. The file remains in the Trash directory for a specified amount of time before it is permanently deleted. During this time, the file can be restored if needed.

- When using transparent encryption, the Trash operation retains the EDEK associated with the file, even though the file is moved to the Trash directory. This is because the Trash directory is considered part of the same encryption zone as the original file.

- Therefore, if a file is restored from the Trash directory, it will be restored with the same EDEK it had before it was deleted.

- If a file is deleted from the Trash directory, it is permanently removed from the file system, including the EDEK associated with the file.

- Therefore, if that file is restored from a backup, it will have a new EDEK, and the data will need to be re-encrypted.

Optimizing Performance for HDFS Transparent Encryption

The following ideas can be followed to optimize the performance of HDFS Transparent Encryption,

- Choose a cipher suite like AES for the best performance. Use the AES-CTR cipher mode, which is faster than AES-CBC, and choose the smallest key length that meets your security requirements.

- A solid-state drive (SSD) can be used to attain high-performance Hadoop clusters.

- Avoid overlapping jobs that use the same files. This is because each job requires a unique EDEK, and creating a new EDEK for each job can create significant overhead.

- Hardware acceleration can significantly improve the performance of encryption and decryption operations.

Key Management Server

Introduction

Key Management Server (KMS) is a critical component in the Hadoop ecosystem that provides a centralized key management service and is used to create, store, and manage encryption keys. KMS allows Hadoop users to encrypt their sensitive data stored in HDFS and YARN.

Enabling HDFS Encryption Using the Wizard

- Navigate to the Hadoop KMS web interface, which is typically located at the URL: http://<kms-host>:<kms-port>/kms. Next, click on the Key tab, and then click on the New Key button.

- Enter the key details, including the name of the key, and the algorithm, and click on the Create button.

- Then configure the encryption zone by entering the zone details, including the path, the key name, and the EZ key ACL.

- Then finally configure the HDFS core-site.xml file to use the KMS server.

Managing Encryption Keys and Zones

To manage encryption zones, you can use the HDFS command-line interface or the Hadoop KMS API. You can create new encryption zones, modify their attributes, and delete them as needed. You can also use the HDFS command-line tools to manage the keys associated with the zones, such as rotating the keys or changing their ACLs. The Hadoop KMS API provides additional functionality, such as creating and managing key providers and key ACLs.

Configuring the Key Management Server (KMS)

The following data can be configured on the kms-site.xml file,

- hadoop.kms.authentication.type for the authentication type. It can be simple(username/ password) or Kerberos.

- Set the hadoop.kms.authentication.kerberos.keytab and hadoop.kms.authentication.kerberos.principal if Kerberos authentication is being used.

- If the access control list(ACL) is enabled, then set hadoop.kms.acls.enabled to true.

- The key provider URL value should be set to the hadoop.kms.key.provider.uri variable. Other keystore details like a password can also be configured

- Set the hadoop.ssl.enabled to true to enable SSL/TLS encryption.

Securing the Key Management Server (KMS)

- Restrict network access to the KMS server by using firewall rules or network security groups.

- Using secure protocols such as TLS/SSL for client-server communication. This can be configured by generating and deploying X.509 certificates to the KMS and clients.

- Regularly apply security updates and patches to the operating system and other software running on the KMS server.

- Implement disk encryption and monitor the logs regularly to identify suspicious activity.

Migrating Keys from a Java KeyStore to Cloudera Navigator Key Trustee Server

The ktkeyutil command line tool can be used to migrate keys from a Java KeyStore to Cloudera Navigator Key Trustee Server.

- Export keys into PKCS 12 file format using the following command,

- Import the exported key into Trustee Server,

- Verify whether the key was successfully imported using the ktadmin command.

- The final step is to update the Hadoop configurations to use the imported key.

PKCS12 is a binary format for storing cryptographic keys, certificates, and other related data. It is commonly used for importing and exporting certificates and private keys in a secure manner

Configuring CDH Services for HDFS Encryption

CDH (Cloudera Distribution for Hadoop) is a popular Hadoop distribution used for big data processing. The following steps can be followed to configure CDH services,

- Install Cloudera Manager, which is a management tool used to install, configure, and monitor CDH services.

- Install and configure KMS for managing encryption keys in HDFS using the Cloudera Manager.

- In the Cloudera Manager, select the HDFS service, go to the Configuration tab, and enable the Enable HDFS Transparent Encryption option to enable Transparent Encryption.

- Create encryption zones on a per-directory basis using the hdfs crypto CLI tool. Details such as the encryption zone path, the encryption zone key provider URI, and the encryption algorithm are to be provided.

- Set ACL permissions using the hdfs dfs CLI tool. Then we can test encryption by writing and reading data to and from the encryption zone. The distcp tool can also be used to test the replication of encrypted data between clusters.

Troubleshooting HDFS Encryption

Let us look into some common issues you may encounter while using HDFS encryption and ways to troubleshoot them,

- If you are unable to access encrypted data, it may be because the key used to encrypt the data is not available. For such cases, use the Hadoop KeyShell tool to check the availability of the key and if the key is not available, you can create a new key and set it as the active key for the encryption zone.

- If you are experiencing slow performance, you can also optimize performance through hardware acceleration or by tuning Hadoop configuration parameters such as io.file.buffer.size and dfs.datanode.max.transfer.threads.

- If you are experiencing issues with HDFS encryption, it's important to check the Hadoop logs for error messages.

- If a user does not have the necessary permissions to access an encrypted file, you can check the access control settings for appropriate permissions using the Hadoop command-line tool or the web UI.

Crypto command-line interface

createZone This command creates an encryption zone in HDFS.

- The -keyName argument specifies the name of the encryption key to use for the zone.

- The -path argument specifies the path to the zone in HDFS.

listZones This command lists all the encryption zones that have been created in HDFS.

provisionTrash This command is used to provision a new trash directory for a specific encryption zone.

The command performs the following things,

- It creates a new directory named .Trash in the specified encryption zone path.

- It creates a new file named .Trash/ez-trash.properties, which contains the EZ key name and other metadata.

- It sets the appropriate permissions on the .Trash directory and .Trash/ez-trash.properties file.

getFileEncryptionInfo This command is used to retrieve the encryption information of a file in HDFS.

This gives information like encryption zone, encryption key version, and EDEK

reencryptZone

This command re-encrypts all the data in an encryption zone with a new encryption key.

- The -oldKeyName argument specifies the name of the old encryption key for the zone.

- The -newKeyName argument specifies the name of the new encryption key.

- The -path argument specifies the path to the zone in HDFS.

listReencryptionStatus

This command provides information about the progress of a zone's re-encryption, such as the number of files that have been re-encrypted, the percentage of completion, and the estimated time remaining for completion.

The zone-name is the name of the encryption zone.

Attack vectors

Hardware access exploits

Physical access to the HDFS data nodes or the key management server (KMS) could potentially allow an attacker to extract sensitive information such as the EZ key or master key. To mitigate this risk, it's important to ensure that physical security controls are in place to prevent unauthorized access to these systems.

Another potential hardware exploit is the use of hardware-based key loggers or other types of malicious hardware devices that could intercept encryption keys or other sensitive information as it is being entered into a system. To protect against this type of attack, it's important to use secure boot mechanisms and to regularly audit the hardware and software systems for signs of tampering or compromise.

Root Access Exploits

If a malicious actor gains root access to the KMS or the Hadoop cluster, they may be able to access or manipulate the encryption keys, compromising the security of the encrypted data.

Another potential root access exploit is through the use of superuser privileges. In HDFS, superusers have direct access to the underlying block data in the filesystem, including encrypted data. It is important to carefully manage superuser privileges and limit access to only trusted administrators.

HDFS Admin Exploits

If the HDFS admin has access to both the encrypted data and the encryption keys, they can potentially decrypt and access the sensitive data, bypassing the access controls and data protection mechanisms implemented through transparent encryption.

In a scenario where the HDFS admin account is compromised, an attacker could gain access to the encryption keys and use them to decrypt the data stored in HDFS. Therefore, it is important to ensure that appropriate access controls and monitoring mechanisms are in place to protect the encryption keys and detect any unauthorized access or activity.

Rogue User Exploits

A rogue user who gains access to an HDFS superuser's account can potentially bypass the encryption layer and gain access to sensitive data. This is because HDFS superusers have direct access to the encryption keys and can decrypt the data without any additional authentication.

Another potential exploit involves rogue users who gain physical access to the HDFS nodes and can extract the encryption keys or tamper with the encryption process. This could compromise the security of the entire HDFS cluster and allow the rogue user to access sensitive data without proper authorization. It is important to ensure proper physical security measures are in place to prevent such exploits.

Example Usage of Transparent Encryption in HDFS

Create an encryption key for the Hadoop Key Management Server (KMS). This key will be used to encrypt the data encryption keys (DEKs) for each encryption zone,

Create an encryption zone by running the following command,

Verify that the encryption zone was created,

Create a new file in the encryption zone using the HDFS command-line interface,

Verify that the file is encrypted,

Conclusion

- HDFS Transparent Encryption provides an effective solution for securing data at rest in Hadoop clusters.

- The architecture of Transparent Encryption in HDFS involves a Key Management Server, Encrypted Zone Keys, and Data Encryption Keys.

- The Configuring of KMS and Hadoop should be made correctly along with security features like Kerberos and ACL.

- The HDFS crypto command-line interface provides several commands for managing encryption zones, re-encrypting data, and checking encryption status.

- When using Distcp with encrypted data, it's important to preserve extended attributes to ensure that the encrypted data can be decrypted at the destination.

- It is important to remain vigilant against potential attack vectors.