Difference between User Level Threads and Kernel Level Threads

Overview

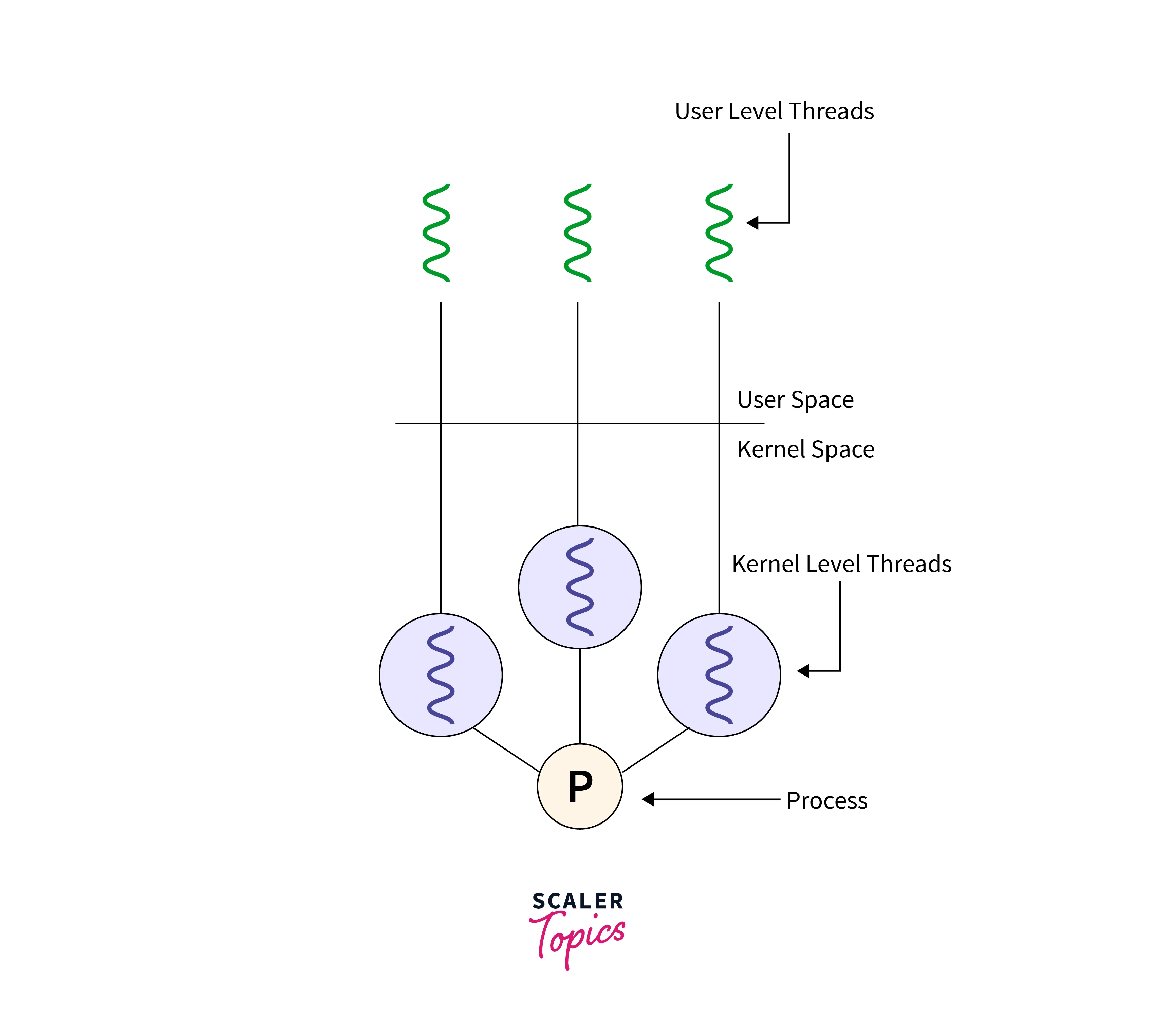

User Level Threads and Kernel Level Threads are two approaches to managing threads in a computer system. User Level Threads (ULTs) are managed entirely by user-level libraries and do not require kernel intervention. They are lightweight and provide fast thread switching but can suffer from blocking issues. Kernel Level Threads (KLTs), on the other hand, are managed by the operating system's kernel. They offer better concurrency and can take advantage of multi-core processors but have higher overhead due to kernel involvement. A key difference is that ULTs are oblivious to the kernel's threading mechanism, while KLTs rely on it for scheduling and management. Choosing between them depends on specific application requirements and performance considerations.

User-Level Threads

User-level threads (ULTs), also known as green threads or lightweight threads, are a form of threading where thread management is handled entirely by user-level code rather than the operating system kernel. These threads are created and managed by a user-level thread library or a runtime environment without requiring any kernel-level support. In contrast, kernel-level threads (KLTs) rely on the operating system to manage threads.

Thread Creation and Management:

- User-level threads are created and managed entirely by user-level programs, libraries, or runtime environments. They are often implemented using standard programming language features or libraries without requiring any special support from the operating system.

- Each user-level thread is a separate sequence of execution that runs within a single process. Multiple user-level threads can exist within the same process, and they share the same process resources, such as memory and file handles.

Lightweight and Efficient:

- User-level threads are lightweight compared to kernel-level threads. Creating and managing ULTs is typically faster and requires less memory overhead because there is no need to switch to kernel mode.

- ULTs are suitable for scenarios where many threads are needed, and the overhead of creating and managing kernel-level threads would be prohibitive.

Thread Scheduling:

- ULTs are scheduled by the user-level thread library or runtime environment, not by the operating system kernel. This means that thread scheduling decisions are made based on user-defined policies, priorities, or algorithms.

- Scheduling at the user level allows for more flexibility and customization in how threads are managed. However, it also means that user-level threads cannot take full advantage of multi-core processors without additional support.

Blocking and I/O Operations:

- One challenge with user-level threads is that when a ULT blocks for I/O or other reasons, it can potentially block all the threads within the same process since the kernel is unaware of these threads.

- To mitigate this issue, user-level thread libraries often implement mechanisms like asynchronous I/O or use a combination of ULTs and KLTs. In such cases, a few KLTs may be used to manage ULTs and handle blocking operations.

Portability:

User-level threads can be more portable than kernel-level threads because they don't rely on specific OS threading implementations. Code written using ULTs can often be easily moved between different operating systems or platforms as long as the user-level threading library is available.

Examples

- Popular programming languages and libraries that support user-level threads include POSIX Threads (Pthreads) in C/C++, Java's green threads, and the Python threading module (for CPU-bound tasks).

- Many user-level thread libraries provide similar APIs and functionalities to their kernel-level counterparts, making it easier for developers to switch between thread models as needed.

Use Cases

- User-level threads are commonly used in applications that require high concurrency, such as web servers and multimedia applications.

- They are also suitable for scenarios where thread creation and management overhead must be minimized, such as in real-time systems or when implementing custom thread scheduling algorithms.

Kernel-Level Threads

Kernel-level threads (KLTs), also known as native threads or heavyweight threads, are a form of threading where thread management is handled by the operating system kernel. These threads are created and managed by the kernel itself, and they rely on kernel-level support for their operation. In contrast, user-level threads (ULTs) are managed entirely at the user level without kernel intervention.

Thread Creation and Management:

- Kernel-level threads are created and managed by the OS. They are part of the core OS infrastructure and are typically represented as process-level entities. Each process can have multiple KLTs, and they are managed by the kernel scheduler.

- KLTs have their own execution context, including registers, stacks, and program counters, managed by the OS. This separation ensures isolation between threads within the same process.

Scalability and Parallelism:

- KLTs can take full advantage of multi-core processors because they are managed by the kernel, which can schedule them to run on different processor cores simultaneously. This allows for true parallelism and efficient utilization of hardware resources.

- The kernel can also optimize the scheduling of KLTs based on factors like priority and resource availability, making them suitable for performance-critical applications.

Thread Synchronization and Coordination:

- Kernel-level threads can easily synchronize and coordinate their activities using OS-level synchronization primitives such as mutexes, semaphores, and condition variables. This makes it easier to implement complex concurrency control in multi-threaded programs.

- The kernel provides robust mechanisms for handling thread blocking and wakeup events, ensuring that threads can efficiently wait for resources without consuming CPU cycles.

Overhead and Resource Utilization:

- Creating and managing KLTs typically involves higher overhead compared to ULTs because the kernel is responsible for context switching and thread management. This overhead can be significant when dealing with a large number of threads.

- KLTs consume more memory and system resources due to their heavier nature, which can be a concern in resource-constrained environments.

Portability:

Code written using KLTs may be less portable than code using ULTs because it often relies on specific kernel APIs and threading models. Porting such code between different operating systems may require significant changes.

Examples

Operating systems like Linux, Windows, macOS, FreeBSD, Solaris, and AIX provide support for kernel-level threads through their respective thread APIs, such as pthread in Linux, Win32 threads in Windows, POSIX threads in Unix-like systems, kernel threads in FreeBSD, lightweight processes (LWPs) in Solaris, and POSIX threads in AIX.

Use Cases

- Kernel-level threads are well-suited for applications that require maximum hardware utilization, such as database management systems, virtualization, and high-performance computing.

- They are also preferred when fine-grained control over thread behavior and interactions with the OS is necessary.

Kernel-level threads are managed by the operating system kernel, providing scalability, parallelism, and robust synchronization capabilities. They are suitable for performance-critical applications but come with higher overhead and may be less portable compared to user-level threads.

Difference between User Level Threads and Kernel Level Threads

| Aspect | User-Level Threads (ULTs) | Kernel-Level Threads (KLTs) |

|---|---|---|

| Managed by | Managed entirely by user-level libraries | Managed by the operating system (kernel) |

| Responsiveness | May be more responsive as thread switches occur in user space, avoiding kernel overhead | Less responsive due to the need to interact with the kernel for thread management |

| Parallelism | Better for applications with many lightweight threads, as they can be created and scheduled quickly | Suitable for applications requiring true parallelism as the kernel can distribute threads across multiple CPU cores |

| Portability | Highly portable across different operating systems as they rely on user-level libraries | Less portable, as they are tied to the specific kernel and threading model of the operating system |

| Thread Creation | Quick and lightweight thread creation, as it doesn't involve kernel calls | Involves system calls to create and manage threads, which can be slower |

| Thread Management | Managed by user-level libraries, so developers have more control over thread behavior | Managed by the operating system, which abstracts thread management from developers |

| Synchronization | Synchronization between threads often requires additional mechanisms like mutexes or semaphores | Can take advantage of kernel-level synchronization primitives, such as mutexes and condition variables |

| Fault Tolerance | A bug or crash in one user-level thread can potentially affect all threads in the process | More isolated, so a crash in one thread is less likely to affect other threads |

| Scalability | Well-suited for applications with a large number of threads, but limited by available CPU cores | Can efficiently utilize multiple CPU cores for true parallelism, making them suitable for highly parallel workloads |

| Context Switching Overhead | Generally lower context-switching overhead, as it's managed in user space | Higher context-switching overhead, as it involves kernel transitions |

| Examples | Pthreads (POSIX threads), Java threads, and Go goroutines are often implemented using ULTs | Most traditional threading models in operating systems, like Linux threads (pthreads), are implemented using KLTs |

FAQs

Q. Can User Level Threads work on a single-core processor?

A. Yes, User Level Threads can work on a single-core processor as they are managed by the application and not dependent on the number of cores.

Q. Do Kernel Level Threads require kernel intervention for context s.itching?

A: Yes, Kernel Level Threads rely on the kernel for context switching, which can be slower due to system call overhead.

Q. Which thread type is better for I/O-bound tasks?

A. User Level Threads are often preferred for I/O-bound tasks because they can efficiently handle non-blocking I/O operations.

Q. Which thread type is suitable for CPU-bound tasks?

A. Kernel Level Threads are better suited for CPU-bound tasks, as they can take advantage of multiple cores for parallel execution.

Conclusion

- User User-level threads are managed by the application, while Kernel-level threads are controlled by the operating system.

- User User-level threads are efficient but less robust, while Kernel Level Threads offer robustness and multitasking.

- User Level Threads have lower creation overhead compared to Kernel Level Threads.

- User User-level threads can lead to process-wide blocking, unlike Kernel Level Threads.

- Kernel Level Threads support better parallelism across CPU cores.

- User User-level threads are more portable across different OSs; Kernel-level threads rely on OS-specific features.