Web Scraping Using R Programming

Overview

Web scraping is a powerful technique for extracting data from websites automatically. In this article, we will explore how web scraping can be done using the R programming language, known for its data analysis and statistical computing capabilities. Web scraping involves extracting data from websites instead of manually copying and pasting, saving time and effort. R's popularity among data analysts and statisticians makes it an excellent choice for web scraping tasks, as it offers a wide range of libraries and packages for data manipulation and analysis.

In this article, we will delve into the fundamentals of web scraping in R, covering the essential tools, techniques, and best practices to effectively gather valuable data from online sources.

Prerequisites

Before diving into web scraping using R, there are a few prerequisites you need to have in place to ensure a smooth and successful scraping process. Below are the essential prerequisites:

-

R Programming Language:

First and foremost, you should have R installed on your system. You can download and install the latest version of R from the official website. Additionally, you might want to use RStudio, a popular integrated development environment (IDE) for R, which provides a more user-friendly interface for coding and data analysis.

-

R Packages for Web Scraping:

R offers several packages that facilitate web scraping. The most commonly used packages are:

-

rvest Package:

The rvest package is one of the most popular choices for web scraping in R. It provides easy-to-use functions for navigating and extracting data from HTML web pages. You can install the package using the following command in R:

-

httr Package:

The httr package is useful for making HTTP requests and handling web interactions. It's often used in conjunction with rvest for accessing web pages and retrieving data. You can install the package using:

-

-

Basic Knowledge of HTML and CSS:

Understanding the structure of the web pages you want to scrape is crucial. HTML and CSS are the building blocks of web pages, and having a basic understanding of these languages will help you identify the elements you want to extract from a webpage.

-

Permission and Ethics:

Web scraping should always be done with permission from the website owners. Additionally, be mindful of the website's terms of service and robots.txt file, which may provide guidelines and restrictions for web scraping activities.

-

Internet Connectivity:

Since web scraping involves accessing online resources, you need a stable internet connection to download web pages and extract data.

-

Data Storage and Management:

Before scraping, consider how you will store and manage the data you extract. It could be saved in a CSV file, database, or any other data storage format that suits your analysis requirements.

By fulfilling these prerequisites, you'll be ready to embark on your web scraping journey using R. In the next sections of this article, we will explore the fundamental concepts of web scraping in R and demonstrate practical examples to illustrate the process.

What is Web Scraping?

Web scraping is a data extraction technique that allows us to automatically gather information from websites on the internet. Instead of manually copying and pasting data from web pages, web scraping uses specialized tools and scripts to navigate through web pages, locate specific data elements, and extract the desired information. This process enables us to efficiently collect large amounts of data from multiple web sources.

Why Web Scraping?

Web scraping has become increasingly important in various domains due to the vast amount of data available on the internet. It is a valuable tool for data analysts, researchers, and businesses seeking to gain insights, make data-driven decisions, and conduct market research. With web scraping, you can:

- Data Collection:

Gather large datasets quickly for analysis and research. - Competitor Analysis:

Extract data from competitor websites to understand their strategies and offerings. - Price Monitoring:

Monitor prices of products on e-commerce websites for pricing strategies and market trends. - Content Aggregation:

Curate content from various websites to create a comprehensive database. - Sentiment Analysis:

Scrape social media platforms for sentiment analysis and customer feedback.

Web Scraping in R

R is a powerful programming language for statistical computing and data analysis. It offers various packages that make web scraping in R a straightforward and efficient task. The widely used R packages for web scraping are:

- rvest Package:

The rvest package, developed by Hadley Wickham, is a popular choice for web scraping in R. It provides functions to extract data from HTML web pages using CSS selectors. - httr Package:

The httr package is another useful package that facilitates web scraping in R by allowing you to interact with web pages via HTTP requests. This package is often used in combination with rvest. - Rcrawler Package:

The Rcrawler package is a powerful web scraping and crawling tool in R. It provides a user-friendly interface for navigating through websites and extracting data. This package is equipped to handle various complexities, including dynamic content and JavaScript rendering, making it suitable for scraping modern web applications. With Rcrawler, you can easily traverse websites, extract data, and save it in a structured format for further analysis. It offers robust features for handling authentication, managing sessions, and adhering to web scraping etiquette, making it a versatile choice for web scraping projects in R.

Web Scraping Using "rvest"

Web scraping using the rvest package in R is a straightforward and powerful method to extract data from HTML web pages. Developed by Hadley Wickham, rvest provides a collection of functions that make web scraping tasks more accessible and efficient. In this section, we will walk through all the necessary steps to perform web scraping using rvest.

Step - 1: Install and Load the "rvest" Package

To begin, you need to install the rvest package if you haven't already. Open R or RStudio and execute the following code:

Before we start, ensure you have an internet connection, as we will be fetching data from the IMDb website.

Step - 2: Fetch the Web Page

After loading the rvest package, you can fetch the web page you want to scrape using the read_html() function. This function reads the HTML content of the specified URL and stores it in an R object.

The read_html() function from the rvest package is used to fetch the HTML content of the URL and store it in the webpage variable.

Step - 3: Inspect the Web Page's Structure

To extract data from a web page, you need to understand its structure. You can use web browsers' developer tools (usually accessible by right-clicking on the web page and selecting Inspect or Inspect Element) to inspect the HTML elements you want to extract.

Step - 4: Extract Data Using CSS Selectors

Once you've identified the elements to scrape, you can use CSS selectors with html_nodes() and html_text() functions to extract the data. The html_nodes() function selects specific HTML elements based on their CSS selectors, while html_text() extracts the text content of those elements.

In this example, we use CSS selectors to target specific HTML elements. For the movie titles, we use .titleColumn a as the CSS selector, and for the movie ratings, we use .imdbRating strong as the CSS selector. The %>% operator (pipe) from the dplyr package is used to chain multiple functions together.

Step - 5: Combine Data into a Data Frame and Perform Data Cleaning (If Needed)

After extracting the data, you may need to perform data cleaning and transformation to make it suitable for analysis. Use R's data manipulation and transformation functions to clean and process the scraped data as per your requirements.

We create a data frame top_movies with two columns: Title and Rating. We extract the top 10 movie titles and ratings using [1:10].

Step - 6: Display the Extracted Data

This step prints the top_movies data frame, which contains the titles and ratings of the top 10 movies from IMDb.

Output:

The top_movies data frame contains the titles and ratings of the top 10 movies on IMDb, which were extracted using the rvest package in R.

Web Scraping Using RCrawler

When it comes to web scraping in R, the RCrawler package deserves special attention. Developed by Oliver Keyes, this package provides a comprehensive set of tools and functionalities to simplify the web scraping process. The rCrawler package is designed to handle more complex scraping scenarios, making it a valuable asset for data analysts and researchers dealing with intricate website structures and large-scale data extraction.

Key Features of RCrawler in Web Scraping Using R

RCrawler is a powerful web scraping and crawling package in R that simplifies the process of extracting data from websites. It offers several key features that make it an ideal choice for web scraping tasks in R:

- Advanced Web Crawling:

RCrawler allows you to efficiently crawl multiple pages of a website, making it suitable for scraping large datasets from complex site structures. - JavaScript Rendering:

The package can handle websites with dynamic content loaded via JavaScript, ensuring data extraction from pages that rely heavily on client-side rendering. - Parallel Crawling:

RCrawler supports simultaneous crawling of multiple URLs, speeding up the scraping process for tasks involving a large number of web pages. - Authentication and Session Management:

The package provides tools to handle website authentication and session management, enabling access to data from restricted or login-required areas. - Respectful Web Scraping:

RCrawler implements configurable delays between requests, adhering to best practices for web scraping etiquette and avoiding excessive traffic to websites. - Data Storage Options:

RCrawler offers flexibility in data storage, allowing users to save scraped data in various formats such as CSV or databases for further analysis.

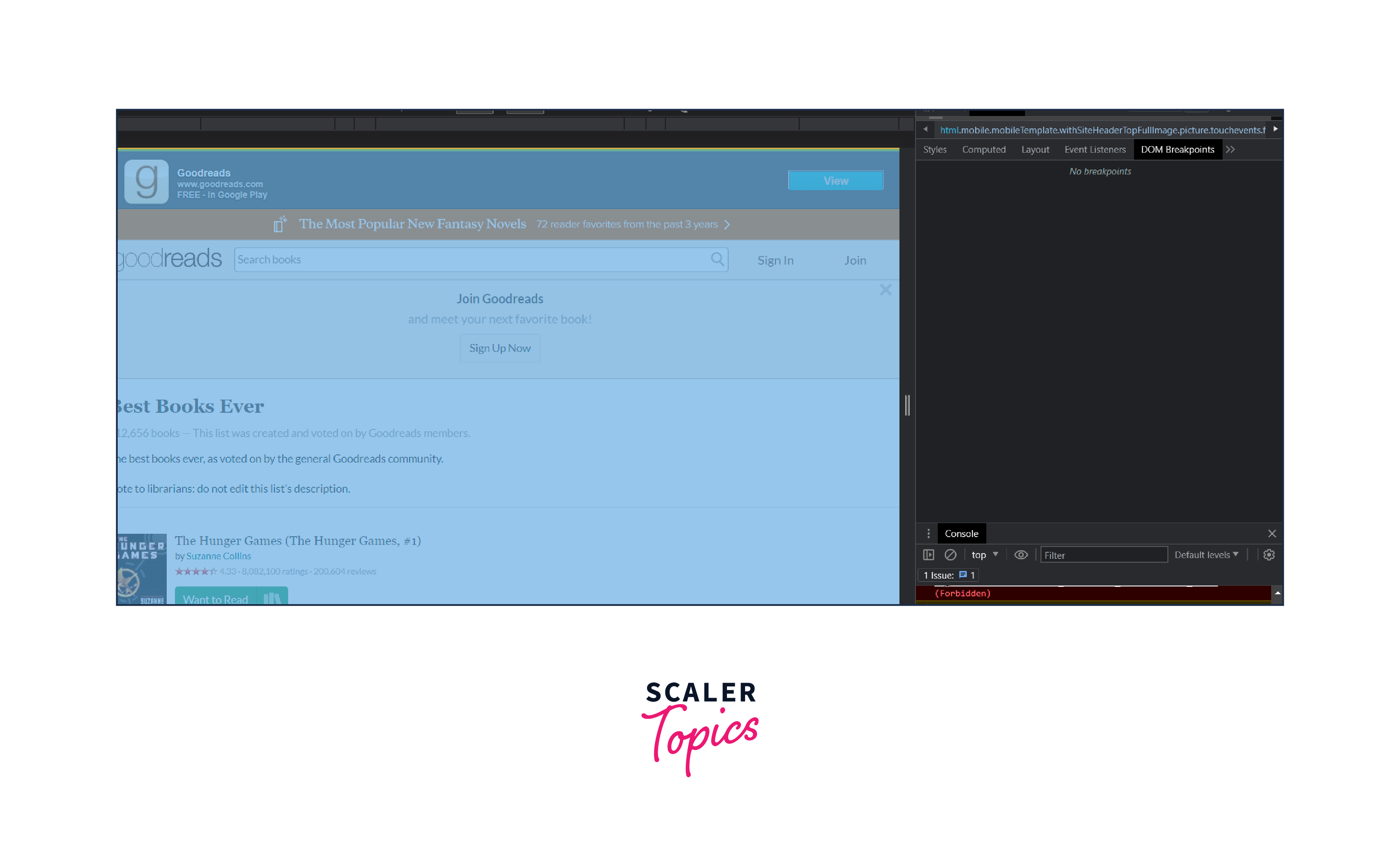

Let's walk through a real example of web scraping using the rCcrawler package in R. We will extract information about books from a popular online bookstore, Goodreads (www.goodreads.com). Specifically, we'll scrape the title, author, and rating of the top 5 books in the "Best Books Ever" category.

Step - 1: Install and Load the RCrawler Package

The install.packages() function downloads and installs the package, while library(Rcrawler) loads it into your R session, making its functions available for use.

Step - 2: Set Up Configuration for Web Scraping

Next, we need to set up the configuration for web scraping. This involves specifying the target URL, the number of pages to scrape, and the elements we want to extract. In this example, we want to scrape the top 5 books from the Best Books Ever category on Goodreads.

In this configuration, we set num_of_pages to 1 because we only want to scrape data from the first page. The delay is set to 1 second to add a delay between requests to avoid overwhelming the server.

We define three fields to extract: title, author, and rating. We use XPath expressions to locate these elements on the web page.

Step - 3: Start Web Scraping

With the configuration set, we can now start the web scraping process using the Rcrawler package.

The Rcrawler() function takes the target URL and the configuration as inputs and starts the web scraping process.

Step - 4: Extract and Process the Data

Once the web scraping is complete, we can extract and process the data into a more structured format, such as a data frame.

The results$content object contains the scraped data. We extract the title, author, and rating fields from results$content and store them in the book_data data frame.

Step - 5: Display the Extracted Data

Finally, let's display the extracted data:

Output:

The book_data data frame contains the title, author, and rating of the top 5 books in the Best Books Ever category on Goodreads, which were successfully scraped using the Rcrawler package in R

Web Scraping Real-life Example

Web scraping using R is a powerful technique that can be applied to various real-life scenarios. In this example, we'll demonstrate how to scrape weather data from a website using the rvest package in R.

Step - 1: Installing and Loading Required Packages

Before we begin, make sure you have the rvest and xml2 packages installed and loaded in your R environment.

Step - 2: Fetching the Web Page

For this example, we'll scrape weather data from this website for a specific location. Let's say we want to extract the current temperature and weather description for New York City.

Step - 3: Inspecting the Web Page's Structure

To extract data accurately, we need to understand the web page's HTML structure. Using web browser developer tools or the html_nodes() function, we can inspect the elements we want to scrape.

Step - 4: Extracting Weather Data

Based on the inspection, we found the appropriate CSS selectors for the current temperature and weather description on the page.

Step - 5: Displaying the Extracted Data

Now, let's display the scraped weather data for New York City.

Output:

In this real-life example, we successfully scraped weather data for New York City using the rvest package in R. By following these steps, you can apply web scraping techniques to collect valuable data from various websites for your specific use cases.

Advanced Techniques in Web Scraping in R

Web scraping in R opens up a world of possibilities for data extraction and analysis. In this section, we will explore advanced techniques to enhance your web scraping capabilities using R. Let's delve into some powerful techniques and packages that can take your web scraping projects to the next level.

Handling Dynamic Content

Many modern websites use JavaScript to dynamically load content. Traditional web scraping techniques may not capture this content directly. To overcome this limitation, we can use the RSelenium package in R. RSelenium allows you to automate web browsers and interact with dynamic elements. It provides a way to scrape content from web pages that rely on JavaScript rendering.

Handling AJAX Requests

AJAX (Asynchronous JavaScript and XML) is commonly used to update web page content dynamically. Traditional scraping may miss this data as it loads asynchronously. To scrape AJAX-based content, we can use the V8 package in R. V8 allows you to execute JavaScript within R, making it possible to handle AJAX requests and retrieve the required data.

Working with APIs

Some websites provide APIs (Application Programming Interfaces) that allow access to their data in a structured format. Instead of scraping web pages, you can directly access the data using APIs. The httr package in R allows you to interact with APIs and fetch data in JSON or XML formats.

Session Management and Cookies

For web scraping tasks that require user authentication or session management, the httr package provides functions to handle cookies and sessions. You can log in to websites, maintain sessions, and scrape authenticated content.

Conclusion

- Web scraping in R is a powerful and versatile technique that allows data analysts and researchers to extract valuable information from websites efficiently.

- Using packages like rvest, httr, and RCrawler, web scraping in R becomes accessible and user-friendly, enabling data extraction from diverse sources.

- The key features of RCrawler, such as advanced web crawling, handling dynamic content, parallel crawling, and authentication support, make it a valuable asset for large-scale and complex web scraping projects.

- In real-life examples like weather data extraction or scraping top book lists, R's web scraping capabilities prove valuable for obtaining relevant insights and data-driven decision-making.

- Advanced techniques, including handling dynamic content with RSelenium, AJAX requests with V8, API integration with httr, and session management, extend the possibilities of web scraping in R, allowing access to even the most modern websites.

- Responsible and ethical web scraping practices, respecting website terms of service, and adhering to legal guidelines are crucial for maintaining a positive web scraping experience and fostering trust in data analysis and research using web scraping in R.