XGBoost Algorithm in Machine Learning

Forget guessing games, XGBoost unleashes the power of teamwork through decision trees. Each tree learns from the last, building a super-sleuth that cracks your data's mysteries and delivers rock-solid predictions. This article unlocks the secrets of XGBoost, the algorithm that takes you from "hmm, maybe?" to "boom, nailed it!" We'll explore its essentials, how it works its magic, and even see it in action.

Prerequisites:

-

Decision Trees: Imagine splitting your data with simple rules, like a choose-your-own-adventure book, to unlock predictions. To learn more about it, visit this link.

-

Bagging: Picture building diverse decision trees from random data parts, like having a team of detectives with different clues, leading to more accurate conclusions. To learn more about it, visit this link.

-

Random Forest: Think of a whole forest of randomly grown decision trees voting on the best answer, like a wise council, reducing individual biases and making predictions sturdier. To learn more about it, visit this link.

-

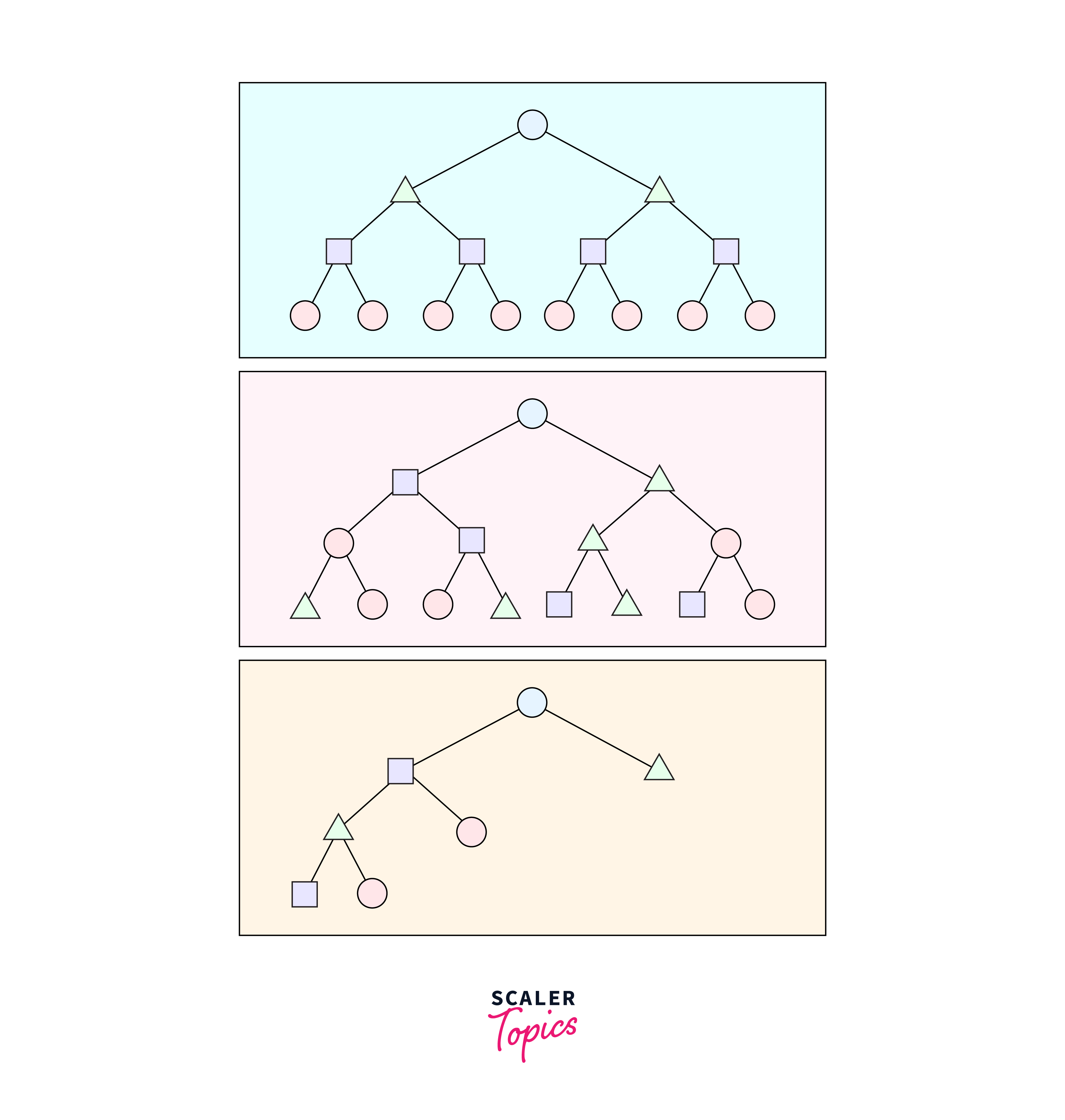

Boosting: Imagine decision trees learning like students, building on each other's mistakes to become super sleuths that crack your data's mysteries with laser-sharp predictions. To learn more about it, visit this link.

What is XGBoost Algorithm in Machine Learning?

Features

Forget magic spells and crystal balls, XGBoost is the real deal when it comes to predicting the future (well, your data's future, at least). Imagine a team of super-sleuths, each armed with their own magnifying glass and razor-sharp deduction skills. That's what XGBoost brings to the table, but instead of magnifying glasses, these sleuths have decision trees – tiny models that learn from your data, one step at a time.

But what makes XGBoost stand out from the crowd? Let's dive into its superpowers:

-

Teamwork makes the prediction dream work: XGBoost doesn't rely on a single sleuth to crack the case. It builds a whole team of them, each decision tree learning from the mistakes of the one before it. This means XGBoost keeps getting smarter with every prediction, honing its skills to unlock hidden patterns and deliver rock-solid results.

-

No more overfitting nightmares: Overfitting is like your sleuth getting stuck on a single clue, ignoring all the other evidence. XGBoost tackles this with built-in penalties that keep its predictions grounded in reality. It's like having a wise mentor guiding the team, ensuring they don't get lost in the details and miss the bigger picture.

-

Speed demon at your service: Time is money, and XGBoost knows it. This algorithm is optimized for lightning-fast training, even with massive datasets. Imagine your team of data detectives working in hyper-drive, churning through information and delivering insights before you can say "abracadabra."

-

Handling the unknown with grace: Missing data and unusual patterns can throw even the best sleuths off their game. But XGBoost embraces the unknown. Its special algorithms can handle sparse data and complex relationships with ease, ensuring your predictions stay accurate even when things get messy.

-

Customization is key: No two data mysteries are the same, and XGBoost understands that. It offers a toolbox of adjustable parameters, allowing you to tailor the algorithm to your specific needs. It's like giving your team of sleuths specialized equipment for each case, maximizing their chances of success.

With these superpowers at its disposal, XGBoost has become the go-to tool for data scientists across the globe. From predicting customer churn to identifying fraudulent transactions, XGBoost helps us unlock the secrets hidden within our data, making the future a little more predictable (and a lot more profitable).

Formula of XGBoost in Machine Learning

Certainly! Here's an explanation of each variable used in the XGBoost formulas:

- Objective Function:

- Loss Function:

- Regularization Term:

- Predicted Value:

- Update Rule:

The XGBoost algorithm aims to minimize this objective function during the training process by optimizing the parameters of the weak learners (trees) and their contributions to the final model. The optimization process involves gradient boosting, where each weak learner is added sequentially to correct the errors made by the existing ensemble.

Implementation of XGBoost in Machine Learning

Implementing XGBoost in machine learning typically involves the following steps:

- Install XGBoost Library: Ensure that you have the XGBoost library installed. You can install it using a package manager like pip:

- Import Libraries: Import the necessary libraries in your Python script or Jupyter notebook:

- Load and Preprocess Data: Load your dataset and preprocess it. XGBoost works well with numerical features, so ensure that your data is appropriately encoded.

- Split Data into Training and Testing Sets: Split your dataset into training and testing sets to evaluate the model's performance.

- Define and Train the XGBoost Model: Define an XGBoost model and train it on the training data.

Note: For regression tasks, you might use objective='reg:squarederror'.

- Make Predictions: Use the trained model to make predictions on the testing set.

- Evaluate Model Performance: Evaluate the model's performance using appropriate metrics.

Examples of XGBoost in Machine Learning

In this section, we will take 3 classic examples to demonstrate the use of XGBoost in Machine Learning:

Example 1: Binary Classification

Example 2: Multiclass Classification

Example 3: Regression

These examples cover binary classification, multiclass classification, and regression tasks using XGBoost. Adjust the datasets and parameters based on your specific use case and data.

Advantages of XGBoost in Machine Learning

-

XGBoost excels in high-performance machine learning, leveraging parallelization for faster training on large datasets. It incorporates regularization methods for enhanced model generalization.

-

In handling missing data, XGBoost's robustness automates optimal imputation, simplifying data preparation and enhancing versatility across diverse datasets.

-

Offering insights into feature importance, XGBoost aids in informed decision-making for feature selection. It can also serve as a tool to automatically identify and prune less important features, streamlining the modeling process.

-

XGBoost's versatility addresses various machine learning tasks, with customizable hyperparameters empowering practitioners to tailor models to unique dataset characteristics.

-

Widely adopted in the machine learning community, XGBoost benefits from active maintenance, extensive documentation, and community support, facilitating effective utilization for diverse projects.

FAQs

Q. What sets XGBoost apart in machine learning?

A. XGBoost distinguishes itself through collaborative decision trees. Instead of relying on a single model, it builds a team of decision trees, each learning from the mistakes of the previous one. This teamwork enhances predictive accuracy and helps uncover hidden patterns.

Q. How does XGBoost handle overfitting?

A. Overfitting is addressed by built-in penalties in XGBoost, preventing models from getting stuck on specific clues. The algorithm ensures predictions remain grounded in reality, akin to having a wise mentor guiding the team to avoid missing the bigger picture.

Q. What makes XGBoost a speed demon in machine learning?

A. XGBoost is optimized for high-speed training, even with extensive datasets. This efficiency is crucial in time-sensitive scenarios, allowing data detectives (models) to work in hyper-drive and deliver insights rapidly.

Conclusion

In conclusion, the XGBoost algorithm stands as a powerhouse in the realm of machine learning, offering a formidable arsenal of capabilities that set it apart. Here are three key takeaways:

1. Collaborative Predictions Through Teamwork:

XGBoost's unique strength lies in its collaborative approach. By employing a team of decision trees, each learning from the mistakes of its predecessors, the algorithm evolves into a master investigator. This teamwork enhances predictive accuracy, unlocking hidden patterns and delivering robust results.

2. Efficiency, Versatility, and Customization: XGBoost doesn't just stop at predictive prowess; it excels in efficiency, versatility, and customization. Optimized for rapid training, even with extensive datasets, the algorithm proves itself as a speed demon. Its robustness in handling missing data, coupled with feature importance insights, simplifies the modeling process. Customization, facilitated by a toolbox of adjustable parameters, ensures adaptability to diverse data mysteries.

3. Widespread Adoption and Community Support: XGBoost has earned its reputation as a go-to tool for data scientists globally. Its high performance, handling of missing values, feature selection capabilities, and community support contribute to its wide adoption. Actively maintained and backed by a vibrant user community, XGBoost remains a reliable choice for various machine learning tasks.